At its core, medical image acquisition is the first, and arguably most crucial, step in seeing inside the human body without a scalpel. It’s the process of creating incredibly detailed digital pictures that translate physical properties—like how dense a bone is or how much water is in a muscle—into data. This data is the bedrock for everything that follows, from a doctor's diagnosis to complex AI analysis.

Understanding the Core of Medical Imaging

It helps to think of this process less like snapping a photo and more like conducting a sophisticated geological survey. A surveyor uses different tools to map what’s underground, and in the same way, medical professionals use various scanning technologies—what we call modalities—to capture specific biological information. Each modality offers a unique window into the body’s internal landscape.

You can't overstate how important this initial step is. The quality of the data gathered right at the start directly impacts every subsequent action. A fuzzy, low-quality scan can hide a critical diagnosis, while a crystal-clear image gives a radiologist—or an AI model—the raw material needed to make the right call.

The Journey from Body to Screen

So, how does it actually work? The process kicks off when a machine, like a CT scanner or an MRI, interacts with the patient's body. These devices don't just "see" inside you in the traditional sense; they measure how your tissues respond to different forms of energy. A CT scanner, for instance, records how tissues absorb X-rays, while an MRI maps out the behavior of water molecules when they’re placed in a powerful magnetic field.

Those raw physical measurements are then fed into powerful computers that translate them into digital signals. This is where the real magic happens, as invisible phenomena are converted into a detailed 2D or 3D picture we can see on a screen.

In essence, image acquisition is a translation process. It converts physical events happening inside the body into a digital format that can be stored, shared, and analyzed. Every single pixel in that final medical image represents a precise data point gathered from the patient.

Why the Right Modality Matters

Choosing the right tool for the job is everything in medical imaging. Each modality has its own set of strengths and is tailored for specific diagnostic questions. The decision always comes down to what a clinician needs to visualize.

- For dense structures: A CT scan is the go-to for looking at bone. It's perfect for spotting fractures or getting a clear view of the skull.

- For soft tissues: When it comes to the brain, ligaments, or internal organs, nothing beats an MRI for its stunning detail.

- For real-time processes: Ultrasound is brilliant for watching things in motion, whether it's blood pumping through an artery or a baby moving in the womb.

Ultimately, the goal is always the same: capture the most diagnostically useful information with the least possible risk to the patient. This foundational act of data gathering is where modern medicine truly begins, providing the clear, accurate views doctors need to make life-saving decisions.

Comparing Major Medical Imaging Modalities

Choosing the right tool for medical imaging is a bit like a photographer picking the right lens for a shot. Each imaging modality gives us a completely different view of the human body, with unique strengths perfectly suited for specific diagnostic puzzles. This is why a clinician might order a CT scan for a head injury but an MRI for a torn ligament—it’s all about getting the clearest possible picture for the job at hand.

In modern medicine, four main players dominate the field: Computed Tomography (CT), Magnetic Resonance Imaging (MRI), Ultrasound, and Positron Emission Tomography (PET). While they all create images of our insides, the physics behind them are worlds apart, providing clinicians with very different kinds of information.

Computed Tomography: The 3D X-Ray

A Computed Tomography (CT) scan is basically a supercharged, three-dimensional X-ray. Instead of taking a single, flat picture, a CT scanner spins an X-ray source around the body, capturing hundreds of cross-sectional "slices." A computer then digitally stacks these slices to build a highly detailed 3D model.

Imagine trying to understand a whole loaf of bread by just looking at it from the top. A CT scan is like slicing that loaf into hundreds of paper-thin pieces, allowing you to inspect every single crumb and air pocket. This approach is fantastic for visualizing dense materials.

That’s why CT scans are perfect for:

- Bone Fractures: They give incredibly clear views of complex breaks and bone injuries.

- Internal Bleeding: CT is often the quickest way to spot dangerous bleeding in the brain or abdomen after an accident.

- Tumor Detection: It's a go-to for finding and tracking tumors in the chest, abdomen, and pelvis.

The biggest plus for CT is speed. A full scan can be over in minutes, which is absolutely critical in an emergency. The main downside is that it uses ionizing radiation. While the dose is low, doctors are always careful to use it only when necessary.

Magnetic Resonance Imaging: Mapping the Body's Water

Unlike CT, Magnetic Resonance Imaging (MRI) uses zero ionizing radiation. Instead, it relies on a powerful magnetic field and radio waves to get a census of all the water molecules in your body. Since different tissues—like muscle, fat, and cartilage—have different amounts of water, an MRI can create stunningly detailed images of our soft tissues.

If a CT scan creates a high-res map of the body's solid structures, an MRI gives you a detailed survey of its soft, water-filled terrain. This ability makes it the undisputed champion for looking at the brain, spinal cord, muscles, and ligaments.

MRI is the top choice for:

- Neurological Conditions: It’s essential for diagnosing things like multiple sclerosis, brain tumors, and strokes.

- Joint and Ligament Injuries: Nothing beats it for visualizing a torn ACL or damaged shoulder cartilage.

- Spinal Cord Issues: It can pinpoint a herniated disc or spinal tumor with amazing clarity.

The trade-off for this incredible detail is time. MRI scans can take anywhere from 30 to 90 minutes, and the patient has to lie perfectly still inside a narrow, noisy tube. For some people, that can be a real challenge.

Ultrasound: Sound Waves in Real-Time

Ultrasound imaging, also known as sonography, uses high-frequency sound waves—way above what we can hear—to create images in real-time. A small, handheld probe called a transducer sends sound waves into the body and listens for the echoes that bounce back. A computer then instantly translates these echoes into a live video on a screen.

You can think of it as a kind of biological sonar. Just as a submarine pings the ocean floor to map it, ultrasound maps our internal organs and can even show blood flowing through vessels. Its real-time, dynamic nature is what makes it so special.

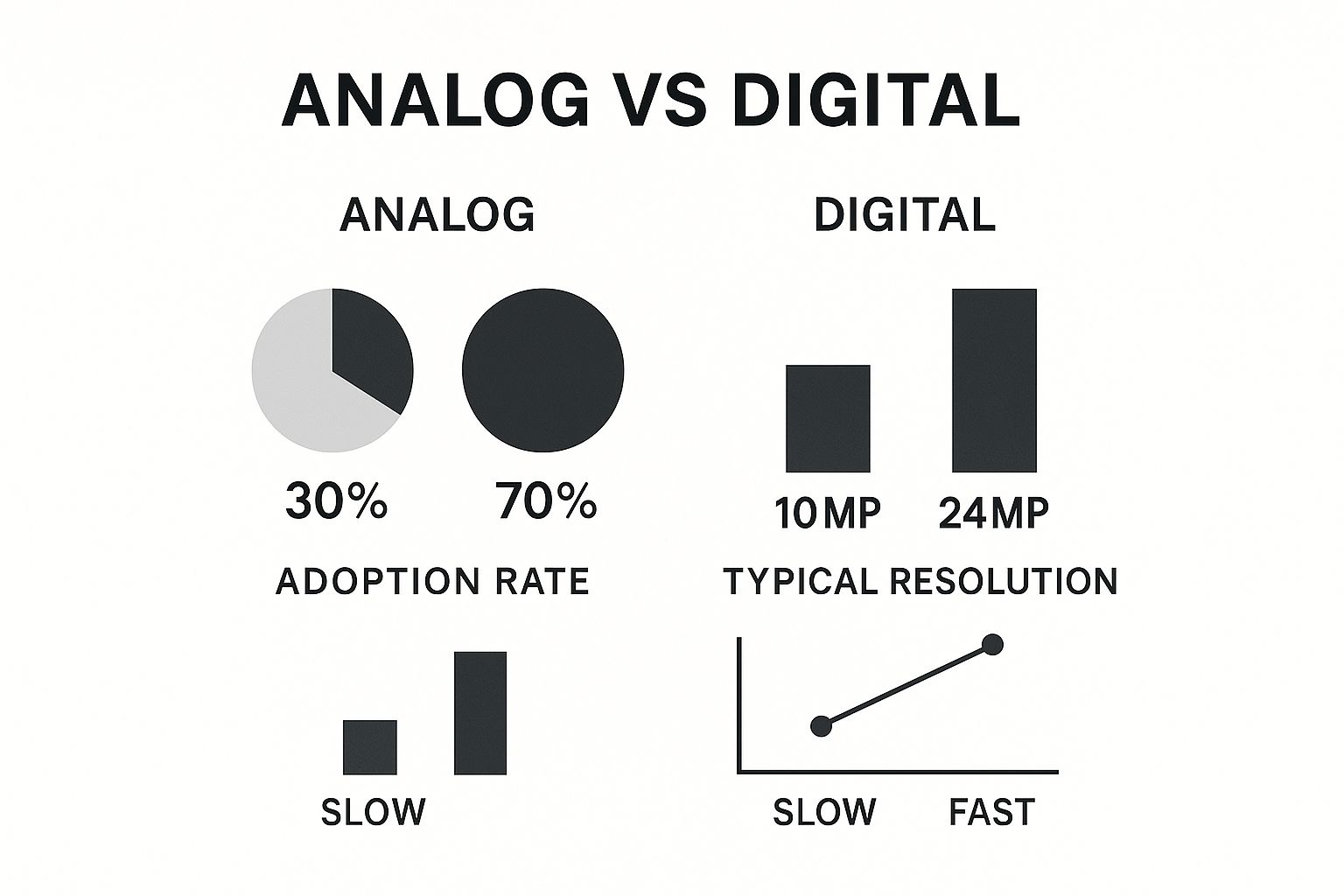

This infographic compares the evolution from older analog methods to today's digital techniques in imaging, highlighting key improvements.

As the graphic shows, digital methods have not only become the standard but also provide much better resolution and faster acquisition times—both huge wins for clinical workflow and patient care.

Positron Emission Tomography: Visualizing Function

Finally, we have Positron Emission Tomography (PET), which is in a class of its own. It shows us metabolic function, not just physical structure. Before the scan, a patient is injected with a tiny amount of a radioactive tracer. This tracer travels through the bloodstream and gets soaked up by the body's tissues. Areas that are working harder and using more energy—like aggressive cancer cells—absorb more of the tracer and "light up" on the scan.

A PET scan reveals what the body's cells are doing, rather than just what they look like. It gives us a functional map of biological activity.

This makes PET scans incredibly powerful in oncology. They’re used to find cancer, see if it has spread, and check if a treatment is actually working. Very often, PET scans are combined with CT scans (in a single machine called a PET-CT) to overlay the functional data onto a detailed anatomical map. This fusion gives doctors the complete story.

Here's a quick side-by-side look at these powerful tools.

Comparing Medical Image Acquisition Modalities

| Modality | Underlying Technology | Best For | Key Advantages | Key Limitations |

|---|---|---|---|---|

| CT | X-rays and computer processing to create cross-sectional "slices" | Bone fractures, internal bleeding, tumor detection, emergency diagnostics | Very fast (minutes), excellent for dense structures, widely available | Uses ionizing radiation, less detail in soft tissues compared to MRI |

| MRI | Powerful magnets and radio waves to map water molecules in tissue | Soft tissues (brain, joints, ligaments, spine), neurological conditions | No ionizing radiation, exceptional soft tissue detail, highly versatile | Slow (30-90 mins), expensive, can be claustrophobic, noisy |

| Ultrasound | High-frequency sound waves and their echoes to create real-time images | Obstetrics (fetal imaging), cardiology (echocardiograms), guiding procedures | Real-time imaging, no radiation, portable, inexpensive | Operator-dependent, sound waves blocked by bone and air, limited depth |

| PET | Radioactive tracers to visualize metabolic activity in cells | Cancer staging, assessing treatment response, brain disorders (e.g., Alzheimer's) | Shows biological function, not just anatomy; can detect disease very early | Uses radiation, expensive, low anatomical detail on its own |

Each of these modalities provides a vital piece of the diagnostic puzzle. By understanding what makes each one unique, we can better appreciate how clinicians put them to work to see inside the human body and make life-saving decisions.

How DICOM Standardizes Medical Imaging Workflows

Once the scanner captures the images, a fundamental problem emerges. How does a CT scan from a Siemens machine show up perfectly on a viewing station running software from a completely different company? The answer is DICOM (Digital Imaging and Communications in Medicine), the unsung hero that makes modern medical imaging possible. It’s the universal translator for nearly every medical image produced today.

I like to think of it like the standardized shipping container that changed global trade forever. Before containers, cargo was a chaotic mix of barrels, sacks, and crates of all sizes. The standard container meant any crane, truck, or ship could handle any cargo, creating a seamless global supply chain. That’s exactly what DICOM does for medical images, ensuring everything just works together.

Without this standard, hospitals would be trapped in a single vendor's ecosystem. They couldn't share vital patient scans with other facilities or upgrade equipment without causing massive compatibility headaches. DICOM prevents this by defining a universal file format and a common network language.

The DICOM File: A Digital Envelope

A DICOM file is far more than just a picture. Think of it as a smart, self-contained envelope that bundles the image pixels with a huge amount of critical metadata, called the DICOM header. This header is the file's digital DNA, carrying all the context needed to understand the image.

So, what's tucked inside this envelope?

- Patient Details: Name, ID, date of birth, and sex.

- Study Information: The type of scan (e.g., "CT Brain"), the exact date and time it was performed, and the referring physician.

- Equipment Data: The scanner's manufacturer and model, plus specific acquisition settings like slice thickness or radiation dose.

- Image Specifics: Details like image orientation, pixel spacing, and even suggested windowing settings for optimal viewing.

This bundling of image and data is non-negotiable for patient safety. It ensures a scan can never be separated from its context, preventing dangerous mix-ups and giving clinicians the full story at a glance.

Tracing a Standard DICOM Workflow

The journey of an image through a hospital’s system is a beautifully logical process, built from the ground up for efficiency and data integrity.

-

Acquisition: It all starts at the modality—the CT, MRI, or ultrasound machine. As soon as the scan is done, the machine packages the images and metadata into DICOM-compliant files.

-

Transmission to PACS: The modality then sends these DICOM files over the hospital's secure network to a central repository. This is the Picture Archiving and Communication System (PACS), which acts as a massive, specialized library for all medical images.

-

Archiving and Storage: The PACS securely stores the DICOM files, often for many years, so they can be retrieved for future reference. It indexes everything by patient name, ID, and study type, making it easy to find what you're looking for.

-

Retrieval and Viewing: A radiologist or specialist queries the PACS from their viewing workstation. The PACS locates the requested files and sends them across the network. The viewing software then reads the DICOM header to display the images exactly as they were intended to be seen.

This entire workflow guarantees that from the moment of creation to the final diagnosis, the image data stays secure, uncorrupted, and permanently linked to the correct patient and study. It is truly the backbone of modern digital radiology.

By standardizing this entire process, DICOM enables seamless collaboration, not just inside one hospital but between different healthcare organizations anywhere in the world. It’s the foundation for teleradiology, second opinions, and efficient patient transfers—all of which lead to better, faster care.

The Critical Role of Quality in Data Acquisition

In medical imaging, there's a timeless saying that rings especially true: "garbage in, garbage out." The entire diagnostic journey, from a radiologist's first look to the training of a sophisticated AI model, hinges on the quality of the initial image. A blurry, distorted, or incomplete scan isn't just a minor inconvenience—it can hide the very details needed to make a life-saving diagnosis.

Think of it like trying to tune a sensitive musical instrument before a big performance. If the instrument is off-key, even the most brilliant musician can't create a beautiful sound. It's the same with a medical scanner. If it isn't perfectly calibrated and operated, the resulting images will be flawed, no matter how advanced the analysis software is.

Minimizing Artifacts for a Clearer Picture

One of the biggest hurdles we face during image acquisition is dealing with artifacts. These are distortions or glitches in an image that aren’t actually part of the patient's anatomy. They're like static on a radio, obscuring the signal you're trying to hear.

Artifacts can pop up for all sorts of reasons, and a skilled technologist's job is to get ahead of them.

- Patient Movement: Something as simple as a patient taking a deep breath or a slight cough can blur the entire scan. Technologists are pros at using positioning aids and giving clear instructions to help patients stay perfectly still.

- Metallic Implants: Metal in the body—from surgical clips and dental fillings to joint replacements—can wreak havoc on CT and MRI scans, often creating bright streaks or dark patches that completely obscure the surrounding tissue.

- Machine-Specific Issues: Every imaging modality has its own quirks and common artifacts. Overcoming them requires specialized knowledge and techniques unique to that machine.

The goal is always to create an image that's a pure, faithful representation of what's inside the patient. Every artifact you eliminate is one less distraction, allowing for a much more confident and accurate diagnosis.

The Importance of Scanner Calibration and Protocols

Just as crucial as managing the patient is making sure the equipment is in top form. This is where scanner calibration and standardized protocols are non-negotiable. Calibration means regularly testing and tweaking the machine to make sure its performance is spot-on, guaranteeing that a scan taken today is directly comparable to one taken six months from now.

Standardized protocols act as the "recipe" for each scan. They tell the technologist exactly which settings to use, from the radiation dose in a CT to the specific pulse sequences in an MRI. This consistency is everything. It makes images comparable across different patients and hospitals, which is essential for large clinical trials and for building AI models that actually work reliably in the real world.

The demand for this level of quality is only growing. The global market for image processing systems, sitting at around USD 19.07 billion in 2024, is expected to explode to nearly USD 49.48 billion by 2032. This isn't just a trend; it's a testament to how much value the medical field and other industries place on getting clear, usable data. You can learn more about the factors driving this market growth and what it means for the future of healthcare tech.

Ultimately, great image acquisition is the bedrock of modern medical analysis. It's a delicate dance between physics, technology, and human care, all working in concert to open the clearest possible window into the human body.

Preparing Acquired Images for AI Analysis

Getting a high-quality scan is the first, essential step, but it’s just the starting line. Raw medical images, fresh off the scanner, are absolutely not ready for an AI model. This is where a careful preparation pipeline comes in, turning that promising raw data into powerful training material.

Think of it like creating a curated, expert-level textbook for a machine. This isn't just about organizing files; it’s about meticulously refining the data to make it safe, understandable, and tough enough for AI training. Every step is designed to ensure the final AI tool is accurate, reliable, and ethically sound. Skip this work, and even the most sophisticated algorithms will produce garbage.

Anonymization: Protecting Patient Privacy

The absolute first move is anonymization. Medical images, especially in the DICOM format, are loaded with Protected Health Information (PHI). We're talking about patient names, birthdates, medical ID numbers—it's all tucked away inside the image's metadata header.

Leaving that information in place is a massive privacy violation and a surefire way to break regulations like HIPAA. So, the anonymization process systematically scrubs every personal identifier from the DICOM headers, creating a "de-identified" dataset. This crucial step ensures the data is completely disconnected from the individual, protecting patient confidentiality while keeping the valuable clinical imagery for research.

Annotation: Teaching the AI What to See

With the data secured, we move on to annotation. This is where the real work begins. It’s easily the most labor-intensive part of the whole process, where human experts quite literally teach the AI what it needs to see. Radiologists and other clinical specialists meticulously label the images, highlighting the exact features they want the model to learn.

It’s like giving the AI a guided tour of human anatomy. The experts might:

- Draw bounding boxes around suspected tumors.

- Outline the precise contours of an organ in a process called segmentation.

- Place markers on specific anatomical landmarks.

Each label becomes the "ground truth"—an undeniable example of what the AI needs to find on its own. The quality and consistency of these annotations directly shape how accurate the final AI model will be. A dataset with sloppy annotations will just teach the AI to make the same mistakes.

Annotation transforms a simple image into a structured learning example. It’s the process of translating a radiologist's expert eye into a language a machine can understand, pixel by pixel.

Data Augmentation: Building a More Robust AI

Even with thousands of perfectly annotated images, a dataset can still be too narrow. To get around this, we use a technique called data augmentation to artificially expand our training library. It’s a clever way to create slightly modified versions of existing images, exposing the AI to a much wider range of variations than what we started with.

For instance, we can take a single MRI scan and spin it into dozens of new training examples by:

- Rotating the image by a few degrees.

- Zooming in or out slightly.

- Flipping it horizontally.

- Altering the brightness or contrast.

This process helps the AI become more resilient and stops it from just "memorizing" the training data. A model trained on an augmented dataset is far better at applying what it learned to new, unseen images in a real clinic. This constant push for more accurate and automated analysis is a major driver of market growth, with a high demand for technologies that enable faster, more precise disease detection. You can learn more about the trends in image acquisition software adoption on datainsightsmarket.com.

This whole pipeline—from acquisition to augmentation—is what turns raw scans into the high-octane fuel required for medical AI.

What’s Next for Medical Image Acquisition?

The world of medical imaging never sits still. It's constantly being pushed forward by fresh ideas and new tech. As we look to the horizon, the focus is clearly on making imaging faster, safer, smarter, and far more portable. These shifts aren't just incremental improvements; they're set to fundamentally change how we diagnose and treat patients.

A huge part of this evolution is what’s known as computational imaging. Think of it as a clever way to build a masterpiece from an incomplete sketch. This approach uses powerful algorithms to reconstruct high-fidelity images even when the raw data isn't perfect. For example, we can get a sharp, clean image from a very fast, low-dose CT scan by letting software fill in the gaps and filter out the "noise." This means less radiation for the patient and shorter scan times, all without losing diagnostic quality.

Bringing Diagnostics to the Patient

Another exciting shift is the move toward portable, point-of-care (POC) devices. The days of imaging being confined to a massive, stationary machine in a hospital basement are numbered. We're seeing the rise of incredibly capable handheld tools.

Take handheld ultrasound scanners, for instance. These devices can now plug directly into a smartphone or tablet, putting powerful imaging technology right into the hands of a paramedic in an ambulance, a doctor at a patient's bedside, or a clinician in a remote village. This trend is about getting critical information faster and making diagnostics accessible to everyone, everywhere.

The future of imaging isn't just about better pictures; it's about getting the right picture at the right time, wherever the patient may be. This shift focuses on speed, accessibility, and integrating imaging directly into the clinical workflow.

The Power of the Cloud and Fusing Data

The "back office" of imaging is getting a major upgrade, too. Cloud-based platforms are quickly becoming the new standard. They provide a secure, scalable way to store and share enormous imaging datasets, which is a game-changer for collaboration. A specialist in New York can instantly review a scan from a patient in rural Montana, bringing top-tier expertise to anyone who needs it.

Even more profound is the push toward multi-modal data integration. The goal is to stop looking at different scans and reports in silos. Instead, future systems will intelligently fuse a CT scan with an MRI, then layer in genomic data, lab results, and notes from the patient's electronic health record. This creates a rich, holistic view of an individual's health, laying the groundwork for truly predictive and personalized medicine.

This kind of integration is a major reason the image recognition market is booming. Valued at USD 53.11 billion in 2024, the global market is expected to explode to USD 184.55 billion by 2034, with healthcare leading the charge. You can discover more insights about the image recognition market on precedenceresearch.com. As these complex systems evolve, the expertise of generative AI UX design agencies becomes crucial in designing interfaces that allow clinicians to make sense of this fused data. It all points to a more connected and insightful future for medical diagnostics.

Your Questions About Image Acquisition, Answered

Getting to grips with medical imaging can feel like learning a new language. Let's clear up some of the most common questions that pop up when talking about acquiring these complex images.

What’s the Real Difference Between a CT Scan and an MRI?

The easiest way to remember the difference is to think about what each one sees best. CT scans are the experts on dense structures, while MRIs are unparalleled for soft tissues.

A CT scan is essentially a super-powered X-ray, creating a series of detailed cross-sectional "slices" of the body. This makes it fantastic for quickly identifying things like bone fractures, tumors, or internal bleeding. An MRI, on the other hand, uses a strong magnetic field and radio waves to get an incredibly detailed look at the brain, muscles, ligaments, and other soft tissues—all without using any ionizing radiation.

Why Do People Keep Talking About the DICOM Standard?

Think of DICOM as the universal language of medical imaging. It’s the reason a scan taken on a GE machine in New York can be flawlessly read on a Siemens workstation in Tokyo.

It’s basically the PDF of the medical imaging world. DICOM bundles the image itself with a treasure trove of critical patient data—like name, date, and study details—into a single, secure file. This prevents dangerous mix-ups and allows doctors and hospitals to share vital information without a hitch. Modern radiology simply couldn't function without it.

How Much Does Patient Movement Affect Image Quality?

A lot. Even the tiniest twitch or breath at the wrong moment can create blur and distortions, known as artifacts, that can completely obscure what a radiologist needs to see. This is a huge challenge in longer scans, like an MRI, which can take an hour or more.

To get a crystal-clear, diagnostically useful image, technologists use all sorts of tools—from foam positioning aids to clear, calm instructions—to help patients stay perfectly still for the entire scan.

Is the Radiation From Medical Imaging Something to Worry About?

It's a valid concern, but one that medical professionals take very seriously. For imaging that uses radiation, like CT and X-rays, the guiding principle is ALARA, which stands for As Low As Reasonably Achievable.

This means clinicians are trained to use the absolute minimum dose of radiation required to capture a high-quality image that provides the necessary diagnostic answers. While any radiation exposure carries some theoretical risk, the benefit of an accurate diagnosis almost always far outweighs the very small risk involved.

At PYCAD, our expertise lies in turning these high-quality medical images into robust AI-driven insights. We manage the entire pipeline, from data preparation to deploying sophisticated models, helping medical device companies sharpen their diagnostic edge. See how we can help advance your technology at https://pycad.co.