A 3D brain app is a powerful piece of software that takes flat, layered medical scans—like those from an MRI or CT—and stitches them together into a fully interactive, three-dimensional model of the brain. Think of it as moving from a paper road map to a live, explorable globe.

Exploring the Brain in a New Dimension

Trying to grasp the brain's staggering complexity from a 2D image is a real challenge. You're looking at a series of flat slices, and your mind has to do the heavy lifting of mentally stacking them to imagine the whole structure. It’s like trying to understand a complex engine by only looking at its blueprints, one page at a time.

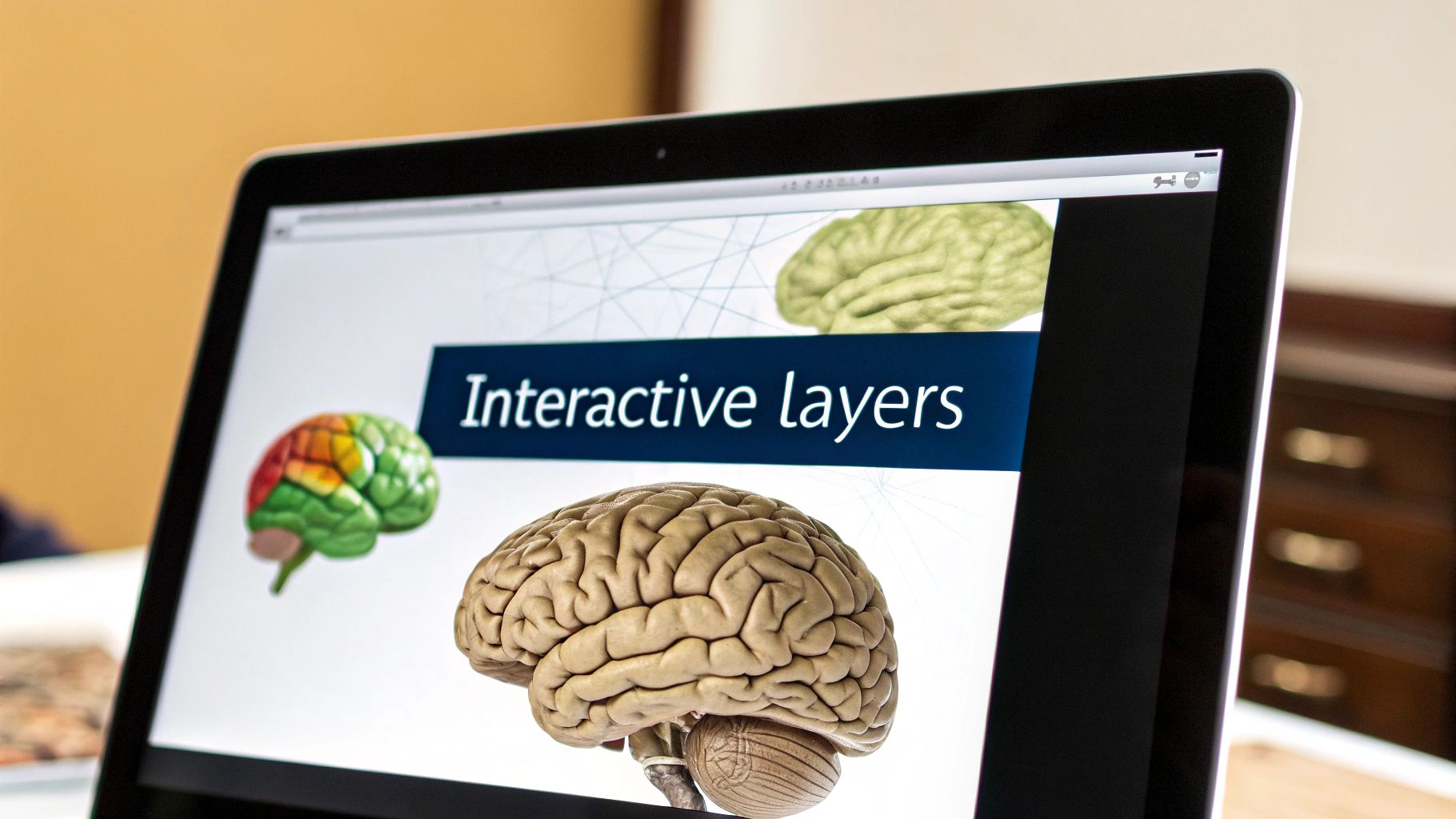

A 3D brain app completely changes the game. It’s not just a static picture; it’s a dynamic digital environment you can manipulate. You can zoom in on the smallest sulcus, rotate the entire organ with a flick of the wrist, and even slice it open virtually to see how deep structures connect.

A Deeper Way of Seeing

This leap from 2D to 3D isn't just a visual upgrade—it's a fundamental shift in how we interact with neurological data. The impact is felt immediately across different fields:

- For Students: Textbook diagrams suddenly come to life. Abstract concepts like neural pathways become tangible, traceable routes you can follow through the brain's landscape.

- For Clinicians: A neurosurgeon can "rehearse" a complex procedure on a perfect digital twin of their patient's brain, mapping out the safest path to a tumor and avoiding critical blood vessels.

- For Researchers: It becomes possible to overlay different types of data—like functional activity on top of anatomical structures—to see how form and function truly relate.

This technology transforms passive viewing into active exploration. It creates a shared visual language that bridges the gap between education, clinical practice, and pure research.

The appetite for these kinds of immersive tools is growing fast. A closely related field, the 3D printed brain model market, gives us a good indicator. It was valued at USD 42.8 million in 2024 and is expected to climb to USD 227.8 million by 2034. You can discover more insights about this market growth on market.us. This trend underscores a clear demand for tools that make the brain’s anatomy tangible and intuitive.

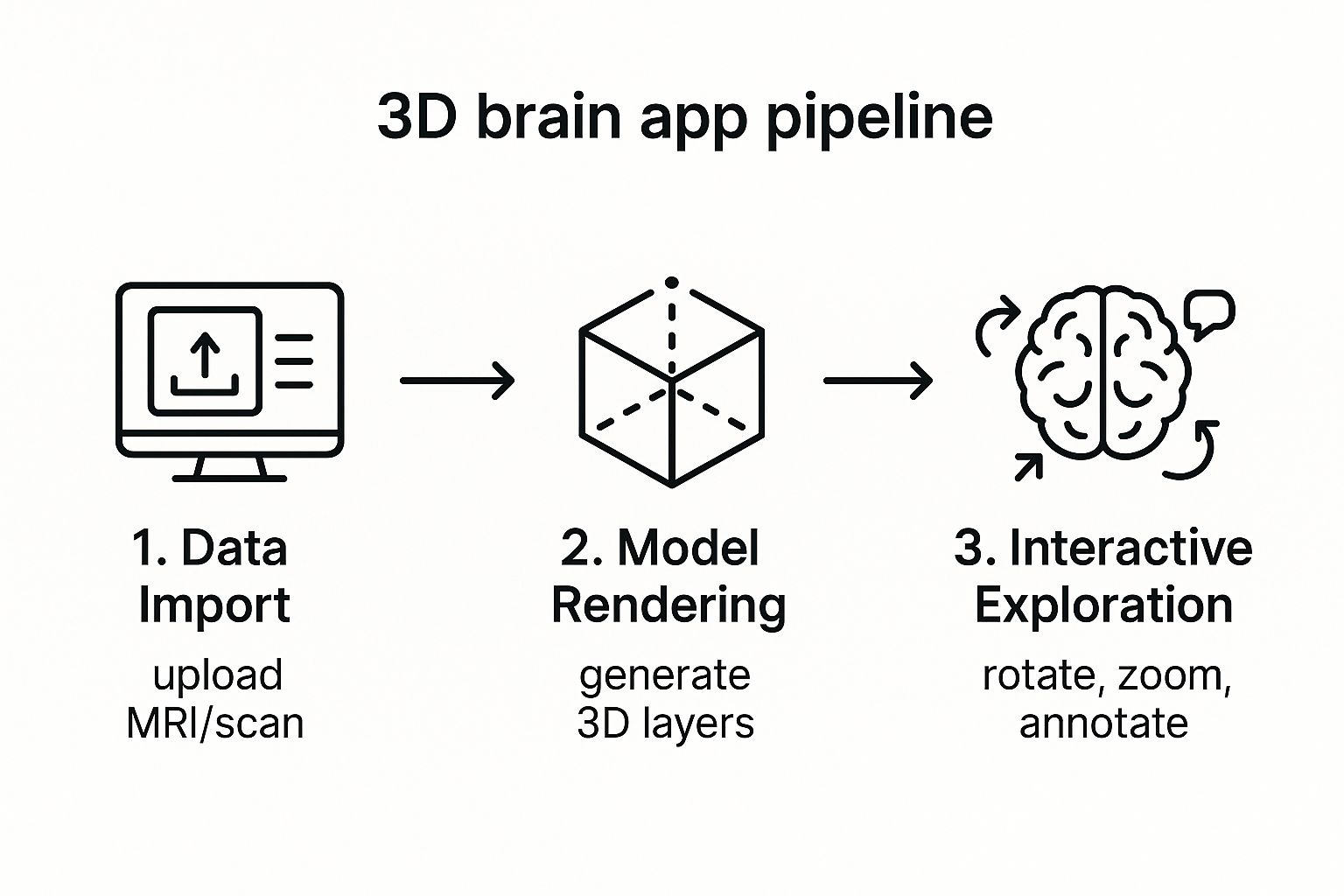

How a 3D Brain App Turns Flat Scans into Interactive Models

So, how does a 3D brain app work its magic? It’s a process that feels like digital alchemy, taking flat, grayscale medical scans and turning them into a vibrant, interactive model you can hold in your virtual hands. But it's not magic—it's a brilliant combination of advanced software techniques that build a complete picture from thousands of individual pieces.

The journey from raw data to a dynamic 3D model kicks off with a process called volumetric rendering.

Think about a massive stack of incredibly thin glass slides. Each slide holds a single cross-section from an MRI or CT scan. By itself, one slide gives you a very narrow view. But when you stack them all together, perfectly aligned, a complete, three-dimensional shape suddenly appears.

Volumetric rendering does this digitally. The software takes all those 2D scan "slices" and stacks them up, then calculates how light would interact with the entire volume. This is what creates a solid, explorable object from what was once just a collection of flat data points.

From a Ghostly Shape to a Detailed Map

Just stacking the images gives you a basic, translucent outline of the brain. To make it truly useful for a doctor or researcher, the app needs to know what it's looking at. This is where the next critical step, called segmentation, comes in.

Segmentation is basically the process of digitally coloring in the different parts of the brain on each individual slice. Imagine a sophisticated coloring book where you trace the hippocampus in blue, the cerebellum in green, and a tumor in red, layer by layer. Once a structure is "colored" across every slice, the app can pull it all together and display it as a distinct 3D object.

This is the core of how a 3D brain app transforms raw scan data into an interactive model you can spin around and explore.

This workflow, from raw data to the final interactive model, is what unlocks a deeper level of understanding.

Not long ago, this was an incredibly tedious, manual job. A radiologist or neuroscientist would have to sit and meticulously trace these structures on every single slice, a task that could easily eat up hours of their time. Thankfully, this is where modern AI has completely changed the game.

Today, sophisticated AI algorithms can handle most of the heavy lifting. Trained on huge datasets of expertly annotated brain scans, these AI models can identify and outline different structures with incredible speed and impressive accuracy.

The Power of AI in Segmentation

AI-driven segmentation does more than just save a massive amount of time. It also brings a level of precision and consistency that’s tough for a human to maintain over hours of repetitive work. This automation is what makes it practical to use advanced 3D modeling in day-to-day clinical settings and for large-scale research.

These two technologies work together to create the final experience:

- Volumetric Rendering: This is the engine that builds the foundational 3D shape from all the 2D scan slices.

- AI-Powered Segmentation: This is the intelligence that identifies and separates the specific anatomical parts within that shape.

Together, they convert a static, grayscale dataset into a dynamic, color-coded map of the brain. Users can peel back layers, make certain areas transparent, or isolate a single structure to examine it from every angle. It provides a real grasp of the spatial relationships inside the brain—something a flat image could never do.

Where Technology Meets Human Health: Neurosurgery and Patient Care

The real power of a 3D brain app isn't in the code—it’s in its impact on real people. It closes the gap between abstract medical data and human understanding, giving both clinicians and their patients a much clearer picture. This is where the technology stops being a cool concept and becomes a tool that can save lives.

Let's walk through a common scenario. A neurosurgeon is prepping for a tough operation to remove a tumor buried deep in a patient's brain. It’s right next to the delicate areas controlling speech and movement. With traditional 2D MRI scans, the surgeon has to mentally piece together a 3D puzzle of the tumor and its surroundings, a high-stakes task demanding incredible spatial awareness under immense pressure.

Now, imagine that same surgeon using a 3D brain app. They upload the patient's MRI data, and in just a few minutes, they’re looking at a fully interactive, detailed 3D model of that person’s unique brain.

Precision Planning for Safer Outcomes

With this "digital twin," the surgeon can spin the brain model around, peel back layers of healthy tissue to make them transparent, and see exactly where the tumor sits. They can map out the safest route to it, millimeter by millimeter, essentially rehearsing the entire procedure before ever stepping into the operating room.

This level of detailed planning offers huge advantages:

- Minimized Risk: Surgeons can clearly spot and navigate around critical blood vessels and functional brain areas, drastically cutting the risk of complications.

- Improved Accuracy: The 3D view leaves no room for ambiguity about spatial relationships, which often leads to a more complete removal of the tumor.

- Increased Confidence: The entire surgical team, and even the patient, can head into the procedure with a much greater sense of clarity and confidence.

It's also a game-changer for communicating with patients. A surgeon can now sit down with a patient and their family and pull up the 3D model of their brain. They can point directly to the tumor, explain the surgical plan, and answer questions with a visual aid that makes infinitely more sense than a flat, gray MRI slice.

By making the invisible visible, a 3D brain app turns patients into active participants in their own care. It transforms a frightening, abstract diagnosis into something tangible and understandable.

Beyond the Operating Room

The applications for these apps don't stop at surgery. Think about a neurologist diagnosing a condition like multiple sclerosis. Being able to see lesions in their true three-dimensional context gives them a much clearer understanding of the disease's progression. It’s like the difference between seeing a few dots on a map and grasping the entire landscape they cover.

This is all part of a much larger movement in cognitive health tech. The global brain training app market was valued at USD 9.76 billion in 2025 and is expected to soar to USD 39.37 billion by 2033. You can read the full research on this expanding market at snsinsider.com. This digital approach is also making waves in education, with specialized digital therapy platforms for neurodiverse learners helping to provide tailored support.

For the next generation of doctors and nurses, these apps are fantastic educational tools. Medical students can finally explore neural pathways and anatomy in an interactive way that static textbook diagrams could never match. This kind of hands-on learning builds a deeper, more intuitive grasp of neuroanatomy, setting them up for success when they face the real-world complexities of their careers.

Driving Breakthroughs in Neuroscience Research

Beyond the operating room, the 3D brain app has become a fixture in the research lab. It's an essential tool in our quest to understand the most complex object in the known universe. For neuroscientists, these apps are like powerful microscopes, letting them dive deep into the brain’s tangled structures and dynamic activity in a way flat, static images never could.

This fundamental shift from 2D analysis to 3D exploration is uncovering fresh insights into how the brain works—and what happens when it doesn't.

Think about a team studying the early signs of Alzheimer's disease. They need to find tiny, almost imperceptible changes across hundreds of brain scans. With a 3D brain app, they can overlay all those scans, instantly revealing patterns of atrophy in key areas like the hippocampus. They're no longer just guessing; they can precisely quantify the differences and turn visual observations into hard, reliable data.

Visualizing the Brain in Action

One of the biggest game-changers for researchers is visualizing functional data. When scientists run a functional magnetic resonance imaging (fMRI) study, they're capturing brain activity in real-time. In the past, they had to sift through hundreds of 2D slices flickering with activity, a process that was informative but made it incredibly difficult to grasp the bigger picture.

Now, that fMRI data can be mapped directly onto a high-resolution 3D anatomical model. All at once, researchers can see exactly which neural networks fire up during a memory test or a decision-making task. They can watch the flow of activity as it moves through an interconnected system, helping to expose the functional wiring behind conditions like autism, epilepsy, and depression.

By transforming abstract datasets into interactive visual models, researchers can spot subtle patterns and form new hypotheses that would have otherwise remained hidden in spreadsheets and stacks of 2D scans.

Fostering Global Scientific Collaboration

This technology also completely flattens the world for scientific collaboration. A research team in Boston can share a complex, multi-layered 3D brain model with partners in Tokyo and Berlin. Everyone can interact with the exact same data simultaneously.

Imagine them on a video call, rotating the model in real-time and pointing to specific fiber tracts or cortical regions. This shared visual context is critical for moving complex projects forward, building a more connected and effective scientific community.

To push the boundaries, researchers are pairing these visualization tools with other advanced technologies. Exploring the best AI tools for researchers shows how artificial intelligence—often baked right into these apps—is accelerating data analysis. This combination of powerful visualization and AI is what makes the 3D brain app a cornerstone of modern neuroscience.

How to Choose the Right 3D Brain App

Picking the right 3D brain app isn't as simple as just downloading the one with the best ratings. The perfect tool for a medical student trying to memorize the cranial nerves is worlds away from what a neurosurgeon needs to plan a delicate tumor resection. Your day-to-day work should be the single biggest factor in your decision.

Think about it this way: what problem are you trying to solve? Are you learning, diagnosing, or researching? Each of these goals demands a completely different set of tools and capabilities. Let's break down what truly matters for each type of user.

Features That Matter for Your Role

For students and educators, the main goal is understanding. A great 3D brain app for learning should be like an infinitely explorable, interactive textbook. Clarity, anatomical accuracy, and features that guide you through complex structures are paramount.

For clinicians, that same app becomes a vital part of patient care. It’s no longer just about learning; it’s about applying that knowledge to a real person. Precision, the ability to work with actual patient scans, and rock-solid security become the most important features.

Researchers, on the other hand, need a powerful engine for data visualization and analysis. Their ideal app must be flexible enough to handle massive, complex datasets and allow them to ask—and answer—new questions about the brain.

The best app isn't the one with the longest feature list. It's the one with the right features for what you do every day. A student would be lost in a sea of clinical tools, while a surgeon would find a basic anatomical atlas completely inadequate.

To make this crystal clear, let's look at a side-by-side comparison of what different professionals should be looking for.

Feature Comparison for Different User Profiles

This table highlights how the priority of features shifts dramatically depending on whether you're a student, a clinician, or a researcher.

| Feature | Importance for Students | Importance for Clinicians | Importance for Researchers |

|---|---|---|---|

| Anatomical Atlas | High: Essential for foundational learning and exploring standard brain structures. | Medium: Useful for reference, but patient-specific data is more critical. | Medium: A good baseline, but often needs to be augmented with custom data. |

| DICOM Integration | Low: Not necessary for general learning. Pre-loaded models are sufficient. | High: Absolutely critical. Must seamlessly import and render MRI/CT scans. | High: Essential for visualizing and analyzing data from study participants. |

| Measurement Tools | Low: Basic measurement might be helpful, but not a core need. | High: Non-negotiable for pre-surgical planning and tracking pathologies. | High: Crucial for quantitative analysis, like measuring lesion volumes. |

| Segmentation | Medium: Helps isolate structures, but pre-segmented models often work fine. | High: Vital for isolating tumors, lesions, or specific tissues from patient scans. | High: A core function for isolating regions of interest (ROIs) for analysis. |

| Collaboration | Medium: Good for sharing views with classmates or instructors. | High: Must be secure and HIPAA-compliant for sharing cases with colleagues. | High: Necessary for sharing datasets, models, and findings with collaborators. |

| Data Overlay | Low: Not a primary focus for introductory learning. | Medium: Useful for fusing different scan types (e.g., PET on MRI). | High: Foundational for mapping functional data (fMRI) or tracts (DTI) onto anatomy. |

As you can see, what’s a “nice-to-have” for one user is a “must-have” for another. Keeping your specific needs front and center is the key to making a good choice.

Asking the Right Questions Before You Commit

When you're looking at different apps, filter out the marketing noise by asking a few pointed questions based on your professional role:

- For Students: Does this app have a beautifully detailed and accurately labeled atlas? Can I peel back layers and isolate structures easily? Are there any built-in quizzes or guided tours to help me study?

- For Clinicians: How easily can I import a patient's DICOM files from our PACS system? Are the measurement and annotation tools precise enough for surgical planning? Can I share a view securely with a colleague for a second opinion?

- For Researchers: Does it support the specific file types and imaging modalities I use (e.g., NIfTI, DTI, fMRI)? Can I overlay my functional data onto anatomical scans and perform volumetric analysis?

By zeroing in on these practical, role-specific needs, you can confidently choose a 3D brain app that will become an indispensable part of your workflow, whether you're just starting your journey into neuroscience or pushing the boundaries of what we know about the brain.

The Future of Brain Visualization Technology

Looking ahead, it's clear that 3D brain apps are poised to break free from the confines of 2D screens. The next big leap is into immersive environments that merge digital anatomy with our physical world, creating a powerful new toolkit for medicine and research.

Imagine a surgeon wearing AR glasses, overlaying a patient's unique 3D brain model directly onto their head during an operation. This isn't science fiction; it's the future of surgical precision, where digital maps guide the surgeon’s hands in real time.

This shift opens up some incredible possibilities:

- Augmented Surgery: Surgeons could see critical neural pathways or tumor boundaries highlighted in their field of view, helping them navigate delicate tissue with greater confidence.

- Virtual Training: Medical residents could enter virtual operating rooms to practice complex procedures on a variety of digital brains, honing their skills in a zero-risk environment.

- Mixed-Reality Consultations: Specialists from opposite sides of the globe could meet in a shared virtual space to examine and manipulate the same interactive 3D model, collaborating as if they were in the same room.

Think about the training implications. A resident in Omaha could practice a rare procedure on a digital model derived from a patient case in Tokyo, all through a VR headset. This not only builds technical skill but also exposes them to a global diversity of brain anatomies.

At the same time, the power of cloud computing is set to completely change how we handle the massive datasets involved in brain imaging. It removes the bottleneck of local hardware, allowing for seamless, real-time teamwork on an international scale.

This means:

- Instant collaboration on high-resolution scans between research institutions.

- Access to immense processing power on demand, without needing a supercomputer on-site.

- Secure, controlled sharing of sensitive data, accelerating global research efforts.

But the journey doesn't stop there. The true frontier is integrating live brain activity data directly into these dynamic 3D models.

This is about creating a living digital twin of the brain—a model that reflects not just its structure, but its function as it happens.

Live Brain Data Integration

Picture a 3D brain model on a screen, with data from an fMRI or EEG streaming into it. As a person thinks, solves a problem, or recalls a memory, you could watch the corresponding neuronal activity light up across different regions, layer by layer.

This would allow us to see exactly how a cognitive task affects blood flow or electrical signals in real time, offering an unprecedented window into the brain's inner workings.

This could lead to:

- Personalized Monitoring: Patients could get instant visual feedback on how their brain responds to therapies or cognitive exercises.

- Large-Scale Research: Scientists could analyze brain activity across entire populations to identify biomarkers for neurological disorders.

- Interactive Education: Students could see a brain model react to their own input, creating an incredibly engaging learning experience.

This concept of a "living digital twin" creates a powerful feedback loop between a patient's live data and their digital model. It stands to completely reshape how we diagnose disorders, test new treatments, and understand the very nature of consciousness.

As this vision takes shape, the market is already showing a massive appetite for interactive brain technology. The global brain training app market was valued at USD 9.65 billion in 2024, with 23% of that—around USD 2.22 billion—coming from the Asia Pacific region alone. You can explore more about these trends in market findings on cognitive research.

A New Era of Discovery

Ultimately, this is about more than just slicker software. It’s a fundamental step toward a future where we can interact with the brain in ways we've only dreamed of.

3D brain apps are becoming the foundation for the next generation of neuroscience and medicine. They are the tools that will inspire new discoveries, unlock the secrets of the mind, and, most importantly, save lives.

Your Top Questions About 3D Brain Apps

As you start digging into the world of neuro-visualization, a few practical questions almost always pop up. Getting clear on these points is key to understanding what a specific 3D brain app can—and can't—do for you.

Can I actually see my own brain scan in one of these apps?

This is probably the most common question I hear. The short answer is: maybe. It all comes down to whether the app can handle the DICOM (Digital Imaging and Communications in Medicine) file format. Think of DICOM as the universal language for medical scans.

If you're looking at a consumer app for general learning, it probably won't have this feature. But for any software built for clinicians or researchers, DICOM compatibility is a must-have, allowing you to load and view your own MRI or CT data.

How accurate are these 3D models?

Another great question. When it comes to reliability, it really depends on the app's purpose.

Reputable apps that provide general anatomical atlases build their models from huge libraries of high-resolution scans and data that’s been validated by peer-reviewed research. For apps that create a model from your specific scan, the accuracy is only as good as the original MRI or CT image itself. A blurry or low-resolution scan will naturally result in a less precise 3D model.

It's crucial to understand the two main flavors these apps come in:

- Anatomical Atlases: These are idealized, "textbook" models of the brain. They're fantastic for students, educators, and anyone who needs to learn standard neuroanatomy.

- Patient-Specific Viewers: These are powerful, specialized tools designed to take an individual's scan data and turn it into a unique 3D model. This is what surgeons and radiologists use for diagnosis and planning.

The most important thing is to match the tool to the task. An educational atlas is perfect for studying, but for any kind of clinical work, you absolutely need a DICOM-compatible viewer. Knowing the difference from the start saves a lot of headaches.

Ready to bring sophisticated AI to your medical imaging projects? PYCAD specializes in developing and deploying advanced computer vision models for diagnostics and analysis. See how we can accelerate your innovation by exploring our services.