The Future of Healthcare is Here

This listicle explores seven key applications of computer vision in healthcare, demonstrating its transformative impact. Discover how this technology improves diagnostics, treatment, and patient care by streamlining processes and enhancing accuracy. From diagnostic medical imaging analysis to remote patient monitoring, understand how computer vision is shaping the future of medicine. This list is essential for anyone working in healthcare technology, medical devices, research, or IT, providing valuable insights into the growing role of computer vision in healthcare.

1. Diagnostic Medical Imaging Analysis

Diagnostic medical imaging analysis is revolutionizing healthcare through the application of computer vision. This cutting-edge technology utilizes sophisticated algorithms, particularly deep learning models, to analyze medical images such as X-rays, MRIs, CT scans, and ultrasounds. The goal is to detect abnormalities, assist in diagnosis, quantify disease progression, and ultimately improve patient outcomes. These systems excel at identifying subtle patterns that might be missed by the human eye, enhancing diagnostic accuracy and reducing interpretation time. This application of computer vision in healthcare represents a significant advancement in medical practice, offering the potential for earlier and more precise diagnoses.

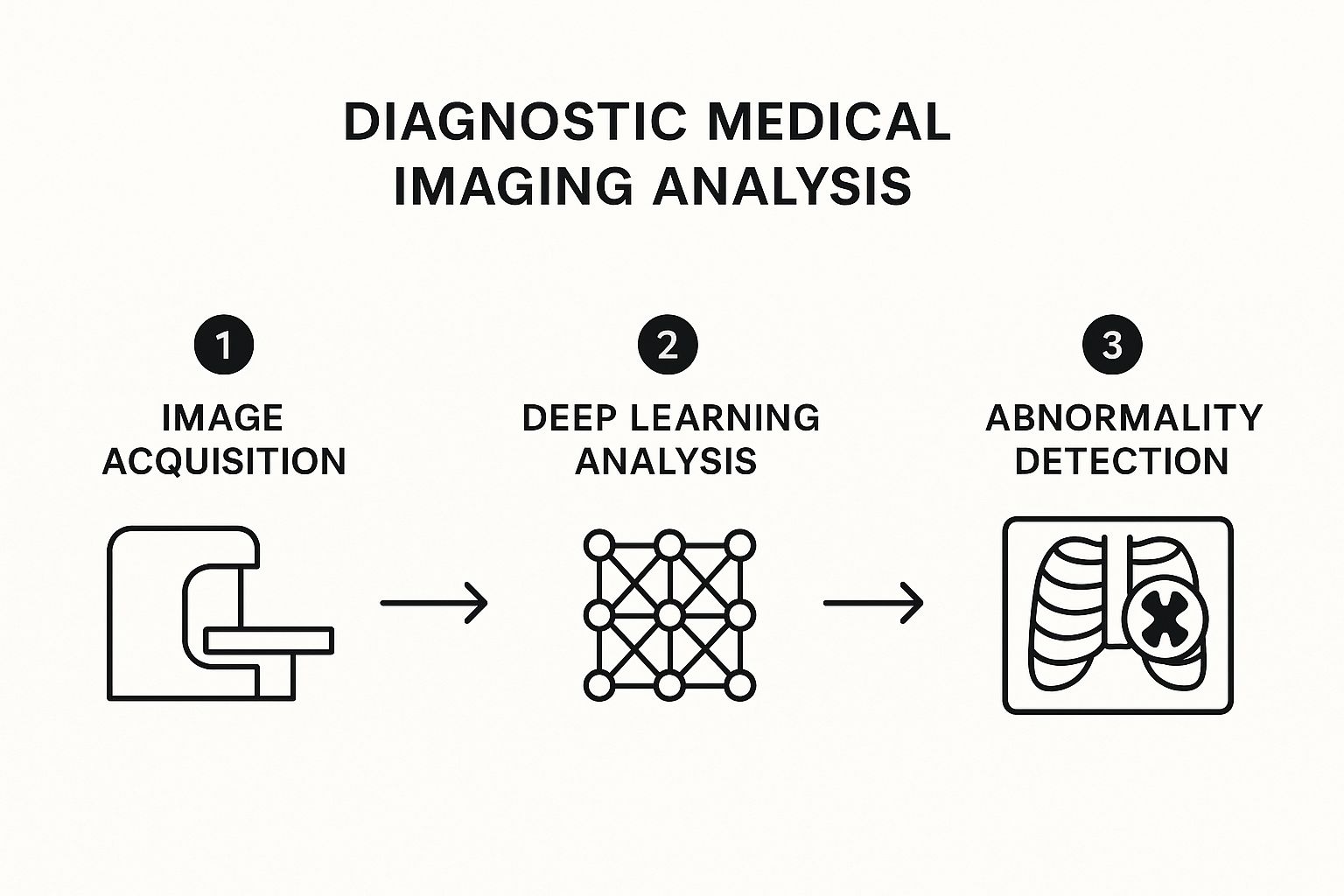

The infographic illustrates the process of diagnostic medical image analysis using AI, starting with image acquisition, followed by preprocessing, AI-powered analysis, and finally, the generation of a report for radiologist review. This streamlined workflow demonstrates how computer vision accelerates and enhances the diagnostic process. The key steps highlighted, from image input to diagnostic output, showcase the efficiency and potential of this technology. The sequential nature of the process ensures systematic analysis, minimizing errors and maximizing the benefits of AI-driven insights.

This powerful technology offers a range of features, including automated detection of anomalies in radiological images, segmentation of anatomical structures and regions of interest, quantitative measurements of disease markers, multimodal image fusion and analysis, and 3D reconstruction from 2D image slices. These capabilities enable more comprehensive and precise evaluations of medical images.

When and Why to Use Diagnostic Medical Imaging Analysis:

This approach is particularly valuable in situations requiring rapid and accurate image interpretation, high volumes of imaging data, or the detection of subtle anomalies. Examples include screening programs for diseases like breast cancer or lung cancer, emergency room triage for conditions like intracranial hemorrhage, and monitoring disease progression in chronic conditions.

Examples of Successful Implementation:

Several companies have successfully implemented computer vision for diagnostic medical imaging analysis. Google Health's AI for breast cancer screening demonstrated higher accuracy than radiologists in certain studies. Aidoc offers FDA-cleared algorithms for detecting intracranial hemorrhage in CT scans. Zebra Medical Vision uses AI to detect coronary calcium in CT scans. Arterys has developed a cardiac MRI analysis platform for measuring ventricular function. These examples highlight the real-world impact of computer vision in healthcare.

Pros and Cons:

Pros:

- Improved diagnostic accuracy, potentially exceeding human capabilities in specific tasks

- Reduced reading time for radiologists, increasing efficiency

- Standardized interpretation, reducing inter-observer variability

- Ability to handle large volumes of imaging data

- Early detection of subtle disease markers

Cons:

- Reliance on large, annotated datasets for training

- "Black box" nature of deep learning models can limit explainability

- Integration challenges with existing healthcare IT systems

- Regulatory hurdles for clinical deployment

- Potential for automation bias among clinicians

Actionable Tips:

- Implement AI as a decision support tool rather than a complete replacement for human expertise.

- Ensure diverse training data to avoid demographic biases.

- Validate algorithm performance across different imaging equipment vendors.

- Establish clear workflows for handling AI findings and discrepancies.

- Continuously monitor for drift in algorithm performance over time.

Key Contributors and Companies:

Pioneers in this field include Enlitic (founded by Jeremy Howard), DeepMind Health (acquired by Google), Zebra Medical Vision, Imagen Technologies, and Dr. Andrew Ng's research at Stanford. Their work has paved the way for widespread adoption of computer vision in medical imaging.

Diagnostic medical imaging analysis using computer vision offers substantial benefits for patients, clinicians, and healthcare systems. By carefully considering the pros and cons and following best practices, healthcare providers can leverage this transformative technology to enhance diagnostic accuracy, improve efficiency, and ultimately deliver better patient care. This particular application of computer vision in healthcare is crucial due to its potential to significantly impact diagnosis and treatment across a wide range of medical specialties.

2. Surgical Assistance and Planning

Computer vision in healthcare is revolutionizing surgical procedures, offering unprecedented levels of precision and control. Surgical assistance and planning, powered by computer vision, empowers surgeons with enhanced visualization, procedural guidance, and real-time analytics, ultimately leading to improved patient outcomes. This technology transforms preoperative imaging (CT scans, MRI, etc.) into detailed 3D models, allowing surgeons to meticulously plan procedures in a virtual environment. During surgery, augmented reality (AR) overlays project critical information, such as anatomical structures or tumor margins, directly onto the surgeon's field of view. Furthermore, computer vision algorithms can monitor surgical execution in real-time, tracking instruments, analyzing motions, and even recognizing surgical phases to optimize workflow and ensure adherence to best practices. This makes its inclusion in any discussion of computer vision in healthcare essential.

How it Works:

Computer vision algorithms analyze medical images to create accurate 3D reconstructions of patient anatomy. This digital twin allows for virtual surgical simulations and pre-operative planning, including optimal incision placement, trajectory planning for minimally invasive procedures, and identification of critical structures to avoid. During the surgery itself, computer vision systems track surgical tools in real-time, providing precise navigation and minimizing invasiveness. AR overlays enhance the surgeon's view by superimposing pre-operative plans, anatomical data, or real-time physiological information onto the surgical field. Automated surgical phase recognition algorithms can analyze the ongoing procedure, providing feedback, and documenting steps for training and quality assurance purposes.

Features and Benefits:

- Real-time surgical tool tracking and motion analysis: Enables precise control and minimizes unintended movements.

- 3D reconstruction of patient anatomy for pre-operative planning: Facilitates detailed surgical planning and reduces intraoperative surprises.

- Augmented reality overlays during minimally invasive procedures: Enhances visualization and improves accuracy in confined surgical fields.

- Automated surgical phase recognition: Streamlines workflow, provides feedback, and supports training initiatives.

- Workflow optimization through procedural monitoring: Improves efficiency and reduces procedural time.

Examples of Successful Implementation:

- Da Vinci Surgical System's vision cart: Provides advanced visualization and robotic-assisted surgical capabilities.

- Microsoft HoloLens in neurosurgery: Enables AR overlays for pre-operative planning and intraoperative guidance.

- Surgical Theater's VR planning system: Offers immersive VR experiences for complex neurosurgical case planning.

- Proprio's surgical navigation platform: Utilizes computer vision for precise surgical navigation.

- Digital Surgery's AI system: Recognizes surgical steps in laparoscopic procedures for improved training and quality control.

- Medtronic's GI Genius endoscopy system: Employs AI-powered computer vision to detect polyps during colonoscopies.

Pros:

- Enhanced visualization beyond human visual capabilities

- Reduced surgical complications through better planning

- Improved training for surgical residents through recorded procedures

- More precise navigation during complex procedures

- Potential for semi-autonomous surgical actions in defined scenarios

Cons:

- High computational requirements for real-time processing

- Technical complexity requiring specialized training

- Risk of system failures during critical moments

- High initial implementation costs

- Potential for over-reliance on technology

Actionable Tips:

- Start with hybrid approaches that augment rather than replace surgical judgment.

- Ensure redundancy systems are in place for technology failures.

- Develop standardized protocols for equipment calibration.

- Incorporate simulation training with the technology before clinical use.

- Collect outcome data to validate performance improvements.

When and Why to Use this Approach:

Surgical assistance and planning using computer vision is particularly valuable in complex procedures, minimally invasive surgeries, and situations where enhanced precision is paramount. This technology can benefit a wide range of surgical specialties, including neurosurgery, cardiac surgery, orthopedics, and general surgery. The use of computer vision enhances surgical training, improves patient safety, and has the potential to push the boundaries of surgical possibilities.

Popularized By:

Intuitive Surgical (Da Vinci System), Medtronic's GI Genius endoscopy system, Verb Surgical (Johnson & Johnson and Verily collaboration), Theator's surgical intelligence platform, and Dr. Nicholas Ayache's pioneering work in computer-assisted surgery have all significantly contributed to the advancement and popularization of computer vision in surgical assistance and planning. This area holds immense promise for the future of surgery, making computer vision in healthcare a field of intense research and development.

3. Pathology Image Analysis

Pathology image analysis represents a significant advancement in computer vision in healthcare, leveraging the power of algorithms to analyze digitized pathology slides. This technology transforms the traditional, subjective microscopic examination of tissue samples into a quantitative, data-driven process. By training computer vision models on vast datasets of labeled images, these algorithms can detect cellular abnormalities, classify tissue types, quantify biomarkers, and even assist pathologists in cancer diagnosis and grading. This approach moves beyond the limitations of human observation, detecting patterns too subtle for the naked eye and facilitating computational pathology for more precise and efficient diagnoses.

This technology is particularly impactful due to its ability to analyze whole-slide images at multiple magnifications, effectively mimicking and enhancing the pathologist's workflow. Features such as automated detection and classification of cellular structures, quantification of histological features and biomarkers, cell counting, morphological assessment, and tumor microenvironment characterization are all made possible through this technology. This allows for a comprehensive, detailed analysis of each slide, unlocking a wealth of information that can contribute to more informed clinical decisions.

Several companies are pioneering the application of pathology image analysis. Paige.AI, for example, developed a prostate cancer detection system that received the first FDA breakthrough designation for computational pathology. PathAI offers a platform for liver biopsy analysis in clinical trials, while Ibex Medical Analytics' Galen platform aids in cancer detection in routine pathology. Proscia's Concentriq platform streamlines digital pathology workflows, and Philips IntelliSite Pathology Solution supports primary diagnosis. These examples highlight the growing adoption and impact of this technology across diverse pathology subspecialties.

Why use Pathology Image Analysis?

This approach addresses several key challenges in traditional pathology. It reduces inter-observer variability, a common issue with subjective interpretations. It significantly increases throughput, enabling the rapid processing of large volumes of slides, crucial in high-volume settings and large-scale studies. Furthermore, it shifts from qualitative to quantitative assessment of histological features, providing more objective and measurable data for diagnosis and prognosis. Finally, its ability to detect rare cellular events could prove transformative in early disease detection and personalized medicine.

Pros:

- Reduced inter-observer variability in pathology interpretation

- Ability to process large volumes of slides quickly

- Quantitative rather than qualitative assessment of histological features

- Enhanced detection of rare cellular events

- Potential for integrating genomic data with image analysis

Cons:

- Digitization infrastructure requirements (slide scanners, storage)

- Color variation and staining inconsistencies affect algorithm performance

- Regulatory pathway still evolving for computational pathology tools

- Resistance to adoption among traditional pathology practices

- Challenge of validating against subjective gold standards

Tips for Implementation:

- Start with focused applications (e.g., mitotic counting) before full deployment.

- Standardize slide preparation and scanning protocols to minimize variability.

- Implement quality control procedures for digital slide images.

- Involve pathologists in algorithm development and validation for optimal integration.

- Consider cloud-based solutions for managing large image datasets.

Pathology image analysis deserves its place on this list because it fundamentally changes the practice of pathology. Its potential to improve diagnostic accuracy, efficiency, and patient outcomes makes it a critical application of computer vision in healthcare. This technology holds immense promise for the future of diagnostics and personalized medicine, offering valuable insights for medical device manufacturers, healthcare technology companies, researchers, and academic institutions alike. By embracing this evolving technology, the field can move towards more precise, data-driven, and ultimately more effective patient care.

4. Remote Patient Monitoring

Remote patient monitoring (RPM) represents a transformative application of computer vision in healthcare, enabling continuous assessment of patients' physical state, activities, and even vital signs without relying on wearable sensors. This technology leverages video feeds to analyze subtle visual cues, extending the reach of healthcare beyond traditional settings and into homes and care facilities. This powerful capability makes it a crucial element in the evolution of modern healthcare, deserving its place on this list.

How it Works: RPM systems utilize computer vision algorithms to analyze video data captured by cameras placed strategically in the patient's environment. These algorithms can be trained to detect a wide range of events and physiological signals, including falls, changes in gait, medication adherence, activity levels, and even subtle changes in skin color related to heart rate and respiration. The extracted information can then be transmitted to healthcare providers, enabling timely interventions and personalized care.

Features and Benefits:

- Fall detection and prevention systems: Automatically identify falls and trigger alerts for immediate assistance, reducing the risk of serious injuries.

- Gait analysis and mobility assessment: Track changes in gait and mobility patterns, providing insights into functional decline or recovery progress after an injury or surgery.

- Medication adherence monitoring: Verify medication intake visually, improving adherence rates and treatment outcomes.

- Activity recognition for independent living: Monitor daily activities to assess the level of independence and identify potential safety risks.

- Non-contact vital sign monitoring (breathing rate, heart rate): Measure vital signs remotely without physical contact, offering a less intrusive and more comfortable alternative to traditional methods.

- Behavioral analysis for early detection of neurological conditions: Detect subtle behavioral changes that might indicate early signs of neurological conditions like dementia or Parkinson's disease.

Pros:

- Continuous monitoring without wearable devices or patient compliance requirements: Eliminates the burden and discomfort of wearables and addresses compliance challenges.

- Early intervention for deteriorating conditions: Enables proactive interventions, preventing hospitalizations and improving patient outcomes.

- Enhanced safety for elderly or vulnerable patients: Provides an added layer of safety and security, particularly for those living alone.

- Reduced need for in-person check-ups: Decreases the frequency of in-person visits, saving time and resources for both patients and healthcare providers.

- Privacy-preserving alternatives to constant human supervision: Offers a less intrusive alternative to in-person monitoring, preserving patient privacy and dignity.

- Quantitative assessment of functional abilities: Provides objective data for tracking progress and evaluating treatment effectiveness.

Cons:

- Privacy concerns with constant video monitoring: Raises ethical considerations regarding patient privacy and data security.

- Variable lighting and environmental conditions affecting performance: System accuracy can be impacted by changes in lighting, shadows, and background clutter.

- Network reliability requirements for continuous monitoring: Requires reliable network connectivity for uninterrupted data transmission.

- Power requirements for edge computing devices: On-site processing devices require a stable power supply.

- Limited accuracy compared to medical-grade monitoring devices: While promising, current RPM systems might not match the accuracy of clinical-grade monitoring equipment.

Examples of Successful Implementation:

- VirtuSense: Provides AI-powered fall prevention solutions for nursing homes.

- Caspar.AI: Offers smart home monitoring solutions for senior living.

- Kepler Vision: Develops Night Nurse, a system for nighttime patient monitoring.

- Current Health: Combines wearables with visual monitoring for a comprehensive approach to RPM.

- Oxehealth: Offers the Digital Care Assistant for mental health facilities.

Tips for Implementation:

- Implement privacy-by-design principles: Utilize on-device processing, silhouette-only visualization, and other privacy-enhancing techniques.

- Establish clear consent protocols and data management policies: Obtain informed consent from patients and ensure compliance with data privacy regulations.

- Deploy edge computing to reduce bandwidth requirements: Process data locally to minimize bandwidth consumption and latency.

- Start with high-risk use cases like fall detection before expanding: Begin with applications that offer the greatest potential for immediate impact.

- Create clear escalation protocols for detected events: Define clear procedures for responding to alerts and escalating cases to healthcare professionals.

When and Why to Use RPM: RPM is particularly valuable for managing chronic conditions, supporting post-acute care, and enhancing the safety and independence of elderly or vulnerable individuals. It allows for proactive intervention, personalized care, and more efficient resource allocation in a variety of healthcare settings. Computer vision in healthcare, specifically within the realm of RPM, offers significant potential to improve patient outcomes and transform the delivery of care.

5. Dermatological Assessment

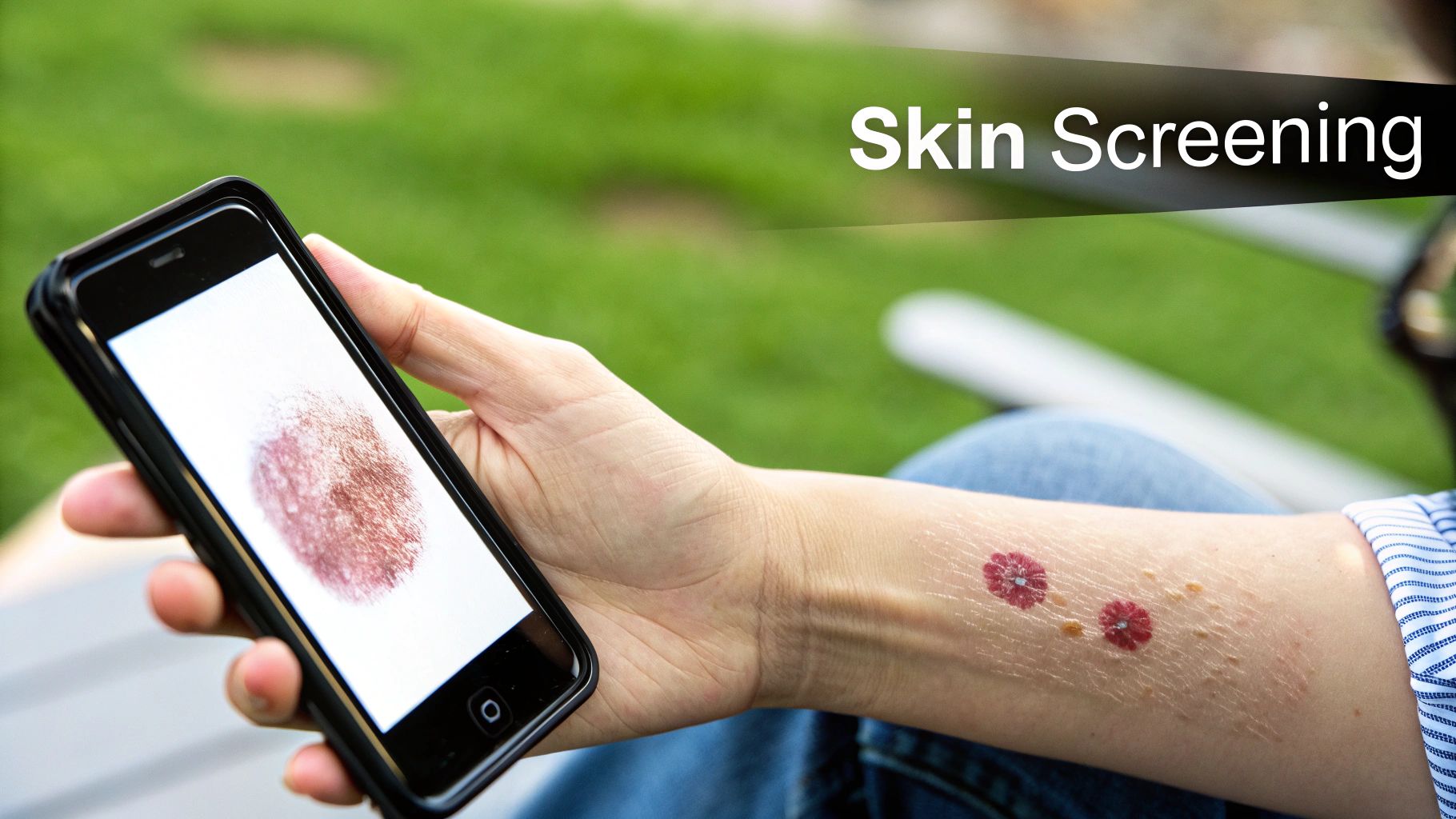

Computer vision is revolutionizing healthcare, and dermatological assessment is a prime example of its transformative potential. This application leverages computer vision systems to analyze images of skin conditions, enabling the detection, classification, and monitoring of various dermatological diseases. This includes everything from common issues like eczema and psoriasis to potentially life-threatening conditions like melanoma and other skin cancers. These platforms often utilize deep learning algorithms, trained on vast datasets of skin images, to identify subtle visual patterns in skin lesions and rashes that might be missed by the human eye. This technology allows for earlier detection of malignancies, more consistent assessment of disease progression, and expanded access to dermatological expertise, particularly through telemedicine platforms.

Computer vision in healthcare, specifically within dermatology, offers a powerful suite of features. These include melanoma and other skin cancer detection from standard photographs, classification of common skin conditions, precise measurement of lesion characteristics over time (size, shape, color), skin health monitoring via smartphone apps, 3D surface analysis for wound assessment, and even vascular pattern recognition for underlying conditions. For example, a patient could use a smartphone app to track a suspicious mole over time, providing valuable data for dermatologists to assess potential changes indicative of malignancy.

The benefits of this technology are numerous. It democratizes access to skin assessment, particularly in underserved areas with limited access to specialists. It also provides objective measurement of lesion evolution, enabling more accurate tracking of disease progression and response to treatment. Furthermore, computer vision aids in the earlier detection of malignant transformations, increasing the chances of successful treatment and survival. In busy clinical settings, these systems offer triage capabilities, prioritizing urgent cases and optimizing resource allocation. Finally, they can reduce the need for unnecessary biopsies, minimizing patient discomfort and healthcare costs.

However, there are also limitations to consider. Variable image quality from consumer cameras can impact diagnostic accuracy. More critically, many current systems demonstrate limited accuracy for darker skin tones due to biases in training data. Distinguishing visually similar conditions can also be challenging for AI. It's crucial to remember that computer vision cannot replace histopathological confirmation for cancer diagnoses; it serves as a valuable tool for initial assessment and risk stratification, not a definitive diagnostic tool. Lighting and angle variations in images can also affect the performance of these systems.

Examples of computer vision in dermatological assessment include the SkinVision app for consumer melanoma risk assessment, Google's dermatology assist tool (currently a research project), VisualDx's clinical decision support platform for dermatological conditions, DermEngine's platform designed for dermatologists, and Miiskin's app for monitoring skin changes over time. These tools exemplify the diverse applications of computer vision in this field.

For developers and practitioners implementing computer vision in dermatology, several tips can improve accuracy and effectiveness. Implementing standardized photography protocols ensures consistent imaging, minimizing variations that can confound AI algorithms. Using dermatoscopic attachments for smartphone cameras, when possible, significantly enhances image quality. Critically, ensuring that training data includes diverse skin types and ethnicities is paramount to addressing existing biases and improving accuracy across all populations. Combining visual assessment with patient history further improves diagnostic accuracy. Finally, maintaining a clear biopsy pathway for high-risk lesions, regardless of the AI assessment, is crucial for definitive diagnosis and appropriate management.

Pioneers in this field include Dr. Allan Halpern at Memorial Sloan Kettering for his early work in digital dermatology, DermaSensor with their spectroscopy-enhanced visual detection technology, MetaOptima (creators of MoleScope and DermEngine), SkinIO's full-body photography platform, and ongoing research at Stanford University on deep learning for skin cancer detection. These individuals and organizations are driving innovation and shaping the future of computer vision in dermatological assessment.

6. Endoscopy and Colonoscopy Assistance

Computer vision is revolutionizing gastrointestinal procedures like endoscopy and colonoscopy, offering a powerful tool for enhanced diagnostics and treatment. This technology empowers medical professionals to improve the accuracy and efficiency of these examinations, ultimately leading to better patient outcomes. This application of computer vision in healthcare significantly improves the detection and characterization of various gastrointestinal abnormalities.

How it Works: Computer vision algorithms are trained on vast datasets of endoscopic images and videos, learning to recognize patterns associated with polyps, lesions, bleeding, and other abnormal tissues. During real-time procedures, the system analyzes the incoming video feed from the endoscope, highlighting suspicious areas on a secondary monitor. This acts as a "second observer," alerting the physician to potentially problematic areas they might otherwise overlook. Furthermore, these systems can automatically classify the detected abnormalities (e.g., polyp type, degree of dysplasia), providing valuable diagnostic information. They also facilitate standardized reporting and documentation, enhancing quality control and communication amongst healthcare providers.

Features and Benefits: Computer vision-assisted endoscopy boasts numerous features designed to improve diagnostic accuracy and procedural efficiency. These include:

- Real-time polyp detection: Algorithms pinpoint polyps during colonoscopy, improving adenoma detection rates (ADR), a crucial quality metric.

- Automated lesion classification: The system can categorize identified lesions, helping guide treatment decisions.

- Quality metrics for colonoscopy completeness: Provides feedback on examination thoroughness.

- Bleeding detection in capsule endoscopy: Automates the tedious review of capsule endoscopy images, reducing review time from hours to minutes.

- Barrett's esophagus and dysplasia recognition: Aids in the early detection and management of these precancerous conditions.

- Standardized reporting with visual documentation: Creates consistent, detailed reports with supporting images for quality assurance and efficient communication.

Pros:

- Increased adenoma detection rates: Leading to earlier detection and prevention of colorectal cancer.

- Reduced variability in detection rates between practitioners: Standardizing quality of care across different experience levels.

- Faster review of capsule endoscopy images: Significant time savings, leading to quicker diagnosis and treatment.

- Objective documentation of findings for quality assurance: Improves record-keeping and facilitates audits.

- Enhanced training for new endoscopists: Provides a valuable educational tool for trainees, fostering skill development and improving diagnostic accuracy.

Cons:

- Potential for false positives interrupting workflow: Can lead to unnecessary biopsies or interventions.

- Computational demands for real-time processing: Requires powerful hardware and can sometimes introduce latency.

- Challenges with unusual anatomical variations: The algorithms may struggle with unusual or complex anatomy.

- Limited field of view in some endoscopic procedures: May miss abnormalities outside the camera's immediate view.

- Integration complexity with existing endoscopy equipment: Can require significant investment and technical expertise for seamless integration.

Examples of Successful Implementation:

- Medtronic's GI Genius™ module for colonoscopy (FDA-cleared): A widely adopted AI-assisted colonoscopy system.

- Olympus' ENDO-AID CAD platform for polyp detection: Offers real-time polyp identification and characterization.

- Fujifilm's CAD EYE system for colorectal polyp detection: Assists in detecting and characterizing polyps during colonoscopy.

- Docbot's UltraVision AI for automated polyp detection: Provides real-time polyp detection during endoscopic procedures.

- Chinese PLA General Hospital's system for early gastric cancer detection: Demonstrates the application of computer vision in detecting early signs of gastric cancer.

Tips for Implementation and Use:

- Install on secondary monitors to avoid disrupting primary view: Ensures the AI assists without interfering with the endoscopist's primary focus.

- Establish protocols for addressing AI-detected regions of interest: Standardizes how the medical team responds to AI alerts.

- Ensure AI sensitivity settings match institutional preferences: Allows customization based on specific clinical needs and risk tolerance.

- Use as educational tool by reviewing detection patterns post-procedure: Facilitates continuous learning and improvement in diagnostic skills.

- Maintain procedural thoroughness regardless of AI assistance: Emphasizes the importance of the endoscopist's expertise even with AI support.

Why it Deserves its Place in the List: Computer vision-assisted endoscopy and colonoscopy represent a major advancement in computer vision in healthcare. By addressing critical limitations of traditional procedures, such as human error and variability in detection rates, this technology empowers clinicians to provide higher-quality, more consistent care, leading to earlier diagnosis and improved patient outcomes in gastrointestinal diseases. This technology is transformative, contributing directly to improved cancer prevention and treatment. It demonstrates the practical and life-saving potential of computer vision in the healthcare setting.

7. Ophthalmology and Retinal Imaging

Computer vision is revolutionizing healthcare, and ophthalmology is at the forefront of this transformation. Retinal imaging, coupled with sophisticated computer vision algorithms, offers a powerful tool for detecting and managing a range of eye diseases. This non-invasive approach analyzes images from fundus photography and optical coherence tomography (OCT) to identify subtle indicators often missed by the human eye, allowing for earlier and more precise interventions. This application of computer vision in healthcare significantly improves the efficiency and accuracy of diagnosis, monitoring, and treatment decisions in ophthalmology.

How it Works:

Computer vision algorithms are trained on vast datasets of labeled retinal images, learning to recognize patterns indicative of various pathologies. These algorithms can then analyze new images, identifying features such as microaneurysms, hemorrhages, drusen, and changes in retinal layer thickness. This analysis provides objective and quantifiable data that aids clinicians in diagnosis, disease grading, and treatment planning.

Features and Benefits:

This technology boasts several key features, including:

- Diabetic retinopathy grading from retinal photographs: Automating the grading process reduces the burden on specialists and enables wider screening.

- OCT image analysis for macular degeneration: Provides detailed cross-sectional views of the retina, enabling precise measurement of retinal layers and identification of abnormalities.

- Glaucoma detection through optic disc evaluation: Analyzes optic disc parameters for signs of glaucoma, assisting in early diagnosis and monitoring.

- Retinal vessel analysis for systemic disease markers: Retinal vessels can offer insights into systemic health, and computer vision can identify subtle changes indicative of cardiovascular disease and other conditions.

- Quantification of retinal layer thickness changes: Precise measurements allow for objective monitoring of disease progression and response to treatment.

- Automated visual field interpretation: Simplifies the analysis of visual field tests, improving efficiency and reducing variability.

Pros:

- Scalable screening for diabetic eye disease in underserved populations: Cost-effective screening can reach a larger population, particularly in areas with limited access to ophthalmologists.

- Objective quantification of disease progression over time: Provides consistent and measurable data for tracking disease progression and treatment efficacy.

- Consistency in interpretation compared to human variability: Reduces inter- and intra-observer variability, improving diagnostic accuracy.

- Detection of subtle changes not visible to human observers: Algorithms can identify minute changes that might be missed during traditional examination.

- Integration with teleophthalmology for remote care: Enables remote diagnosis and monitoring, expanding access to specialized care.

Cons:

- Image quality dependence on operator skill and equipment: Poor quality images can affect the accuracy of the analysis.

- Inability to capture peripheral retinal pathology in standard images: Standard imaging techniques may not capture the entire retina, potentially missing peripheral lesions.

- Limited effectiveness with uncommon or rare conditions: Algorithms trained on common conditions may not perform well on rare or atypical presentations.

- Challenges with media opacities (cataracts, vitreous hemorrhage): Opacities can obscure the retina, hindering image analysis.

- Need for disease-specific algorithms rather than general retinal assessment: Developing and validating algorithms for specific diseases is resource-intensive.

Examples of Successful Implementation:

- IDx-DR (now Digital Diagnostics): The first FDA-approved autonomous AI diagnostic system for diabetic retinopathy.

- Google/DeepMind's diabetic retinopathy detection system: Deployed in India and Thailand, demonstrating the potential for large-scale screening programs.

- ZEISS FORUM® Retina Workplace with AI-assisted analytics: Integrates AI tools into a comprehensive retinal imaging platform.

- Eyenuk's EyeArt system for diabetic retinopathy screening: Provides automated diabetic retinopathy screening through fundus photography.

- Optos' AI solutions for ultra-widefield retinal imaging analysis: Leverages ultra-widefield imaging to analyze a larger area of the retina.

Tips for Implementation:

- Ensure proper training of technicians for image acquisition: High-quality images are crucial for accurate analysis.

- Implement quality control steps before AI processing: Automated quality checks can identify and reject suboptimal images.

- Establish clear referral pathways for positive findings: Ensure timely referral to specialists for further evaluation and management.

- Consider point-of-care deployment in primary care and endocrinology: Integrating screening into primary care settings can improve access and early detection.

- Integrate with electronic health records for longitudinal tracking: Seamless integration facilitates data management and long-term monitoring.

Popularized By:

Key figures in this field include Dr. Michael Abramoff (founder of Digital Diagnostics/IDx), Dr. Pearse Keane (Moorfields Eye Hospital/DeepMind collaboration), Dr. Lily Peng (Google Health's ophthalmology AI research), Heidelberg Engineering's OCT analysis platforms, and the Singapore Eye Research Institute's AI initiatives.

Ophthalmology and retinal imaging exemplify the potential of computer vision in healthcare. By automating analysis, improving accuracy, and expanding access to care, these technologies are poised to transform the landscape of eye care and significantly improve patient outcomes. This field continues to evolve, promising further advancements in early detection, personalized treatment, and ultimately, the prevention of blindness.

Computer Vision Applications in Healthcare: 7-Area Comparison

| Application Area | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Diagnostic Medical Imaging Analysis | High: requires large annotated datasets and integration efforts | High: computational power, annotated imaging data | Improved diagnostic accuracy and faster interpretations | Radiology departments, large hospitals | Early detection, standardization, large data handling |

| Surgical Assistance and Planning | Very high: real-time processing and system reliability critical | Very high: high-end hardware, specialized training | Enhanced surgical precision and reduced complications | Complex surgeries, minimally invasive procedures | Real-time guidance, improved training, workflow optimization |

| Pathology Image Analysis | High: digitization and consistent slide prep mandatory | High: slide scanners, storage, and computing | Reduced variability; quantitative, scalable pathology | Cancer diagnosis, histopathology labs | Whole-slide analysis, rare event detection, quantitative |

| Remote Patient Monitoring | Moderate to high: privacy and network reliability concerns | Moderate: edge devices, video infrastructure | Continuous safety monitoring and early intervention | Elderly care, remote health management | Non-contact monitoring, safety, reduced clinical visits |

| Dermatological Assessment | Moderate: data diversity and image quality challenges | Moderate: smartphone cameras, cloud platforms | Early skin disease detection and remote triage | Teledermatology, primary care | Access in underserved areas, consistent lesion tracking |

| Endoscopy and Colonoscopy Assistance | High: real-time detection with integration to existing systems | High: real-time video processing and specialized hardware | Increased detection rates and procedural quality | GI endoscopy suites, colonoscopy screening | Increased adenoma detection, reduced variability |

| Ophthalmology and Retinal Imaging | High: quality image capture and disease-specific models required | High: specialized imaging devices and analysis tools | Scalable screening and early eye disease detection | Diabetic retinopathy and glaucoma screening | Objective grading, remote screening, progression tracking |

Looking Ahead: Computer Vision's Continued Impact

Computer vision in healthcare has emerged as a transformative force, revolutionizing areas from diagnostic imaging analysis and surgical planning to remote patient monitoring and ophthalmology. This article has explored key applications of this technology, including its use in pathology image analysis, dermatological assessments, and endoscopy/colonoscopy assistance. The advancements discussed highlight the potential of computer vision to significantly enhance diagnostic accuracy, personalize patient care, and improve surgical outcomes across various medical specialties.

The most important takeaway is the potential for continued growth and innovation in this field. As algorithms become more sophisticated and access to robust medical datasets expands, the applications of computer vision in healthcare will only broaden. Mastering these concepts and integrating them into existing healthcare workflows is crucial for staying at the forefront of medical innovation and delivering optimal patient care. Furthermore, as computer vision continues to evolve, its integration with other advanced technologies like decision intelligence AI will unlock even greater potential for healthcare innovation, enabling more efficient and effective data-driven decision-making. This synergy will allow healthcare providers to glean deeper insights from the vast amounts of data generated by computer vision systems, ultimately leading to better patient outcomes, as discussed in "What is Decision Intelligence AI? Practical implementation and Pitfalls" from Kleene.ai.

The future of healthcare is intertwined with the continued development and implementation of computer vision. By embracing these advancements, we can pave the way for a more efficient, accurate, and patient-centered healthcare system. Ready to harness the power of computer vision in your healthcare practice or research? Explore the cutting-edge solutions offered by PYCAD, a leader in developing and implementing computer vision applications for medical imaging and healthcare, and discover how they can help you transform the future of medicine.