Understanding AI Annotation Service Beyond the Hype

Imagine teaching a child to recognize the world around them. You don’t just show them objects; you point and explain. “This is a car; it has wheels.” “That’s a stop sign; it’s red.” An AI annotation service does this exact job for artificial intelligence, translating raw data into structured lessons a machine can process. This is far different from basic data entry. It's not about typing information; it's about applying expert human judgment and context. This labeled data becomes the textbook from which an AI model studies.

From Raw Data to Intelligent Fuel

Raw data, whether it's a folder of chest X-rays, a collection of satellite images, or thousands of customer service emails, is essentially inert. To an AI model, it’s just a chaotic jumble of pixels or words with no built-in meaning. The job of an AI annotation service is to turn this chaos into organized, intelligent fuel. For a self-driving car, this means labeling every pixel in a video feed as 'road,' 'pedestrian,' or 'other vehicle.'

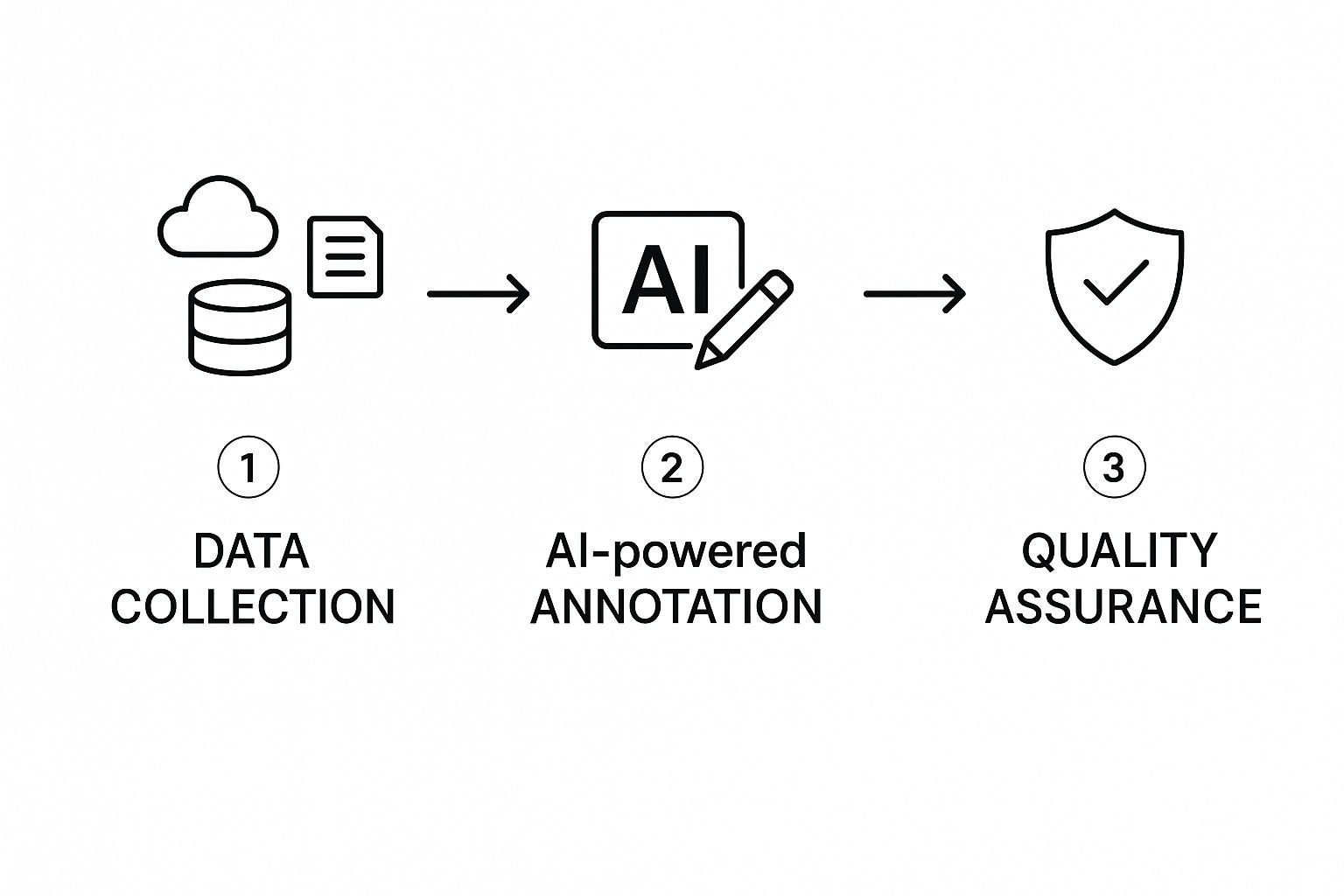

To fully grasp its importance, it helps to see where annotation fits within the broader machine learning lifecycle.

This visual shows that training data, the direct product of annotation, is the essential input that allows a machine learning model to learn and make accurate predictions. The quality of this initial input directly determines the final performance of the AI system.

The Critical Role of Context and Quality

This is where the difference between a casual effort and a professional service becomes clear. The task is not just about drawing boxes quickly; it’s about the quality and consistency of the labels. An inaccurate label is much worse than no label at all, as it teaches the AI the wrong lesson, leading to flawed results and unreliable behavior. This careful process is a necessary investment.

The immense value placed on high-quality data is clear in the market's growth. The AI data annotation service market, valued at $2 billion in 2025, is expected to grow at a compound annual growth rate of 25% from 2025 to 2033, potentially reaching $10 billion by 2033. You can explore these market projections in this detailed industry report. This rapid expansion shows a deep industry agreement that annotation isn't just another expense—it's the bedrock of successful AI development.

Professional services are set apart by:

- Domain Expertise: Annotators who understand the subject matter, whether that means identifying specific pathologies in medical scans or recognizing subtle sentiment in text.

- Rigorous Quality Control: Multi-step review processes that ensure high agreement between annotators and consistent accuracy across huge datasets.

- Scalable Workflows: Proven systems for managing large teams and complex projects to guarantee timely delivery without compromising on quality.

Ultimately, a high-quality AI annotation service provides the invisible backbone for any successful AI application, turning a promising concept into a dependable, real-world tool.

Inside the AI Annotation Service Workflow That Actually Works

Behind every effective AI model is a systematic process that turns raw, jumbled data into a structured and valuable asset for training. A professional ai annotation service doesn’t just apply labels at random; it follows a well-defined pipeline built for accuracy, consistency, and scale. Think of it less like a messy art project and more like a precision manufacturing line.

The Foundational Stages: From Chaos to Clarity

The journey begins with Data Assessment and Scoping. When a client provides a dataset, the first order of business is a deep-dive analysis. Imagine a head chef inspecting fresh ingredients before creating a new menu. The service provider examines the data's quality, format, and complexity to spot potential roadblocks and outline the project's scope. This initial review helps prevent issues later and establishes clear goals from the start.

Next comes one of the most important steps: creating detailed Annotation Guidelines. This document acts as the project's official rulebook, ensuring every annotator is on the same page. It removes guesswork by clearly defining what to label and how. For instance, the guidelines might detail the exact pixel boundaries for segmenting a medical tumor or the criteria for classifying customer support tickets as "Urgent." Solid guidelines are the foundation for a consistent dataset.

The flow chart below illustrates this journey from raw data to a high-quality, validated asset.

As the graphic shows, the process is a cycle of continuous improvement. Data is collected, fed into the annotation process, and then refined through strict quality checks, leading to ever-improving results.

The Core Annotation and Review Loop

With clear guidelines established, the Initial Annotation can begin. Trained human annotators apply labels to the data, whether that means drawing bounding boxes around cars, performing semantic segmentation on satellite photos, or tagging key information in legal contracts. Many modern workflows use AI-assisted tools where a model proposes a label, and a human expert then confirms, corrects, or fine-tunes it. This "human-in-the-loop" approach combines machine speed with human judgment.

Platforms like Labelbox offer the necessary tools for these complex tasks, providing an interface designed for accuracy and teamwork.

This interface shows how annotators manage large-scale labeling projects, with features for quality review and collaboration built directly into the platform.

To better understand how these stages fit together, the following table breaks down the key components of a standard AI annotation workflow.

AI Annotation Workflow Stages Comparison

A detailed breakdown of each workflow stage, typical timeframes, quality checkpoints, and resource requirements

| Workflow Stage | Duration | Quality Gates | Key Activities | Output |

|---|---|---|---|---|

| Data Assessment & Scoping | 1-5 Business Days | Data format validation, complexity analysis | Review sample data, identify edge cases, define project goals, estimate effort | A clear Statement of Work (SOW) and project plan |

| Guideline Creation | 3-7 Business Days | Client review and approval, peer review | Define label classes, create visual examples, document rules and exceptions | The official Annotation Rulebook or "Source of Truth" |

| Initial Annotation | Varies (Project-dependent) | AI-assisted pre-labeling, ongoing spot checks | Apply labels, boxes, masks, or tags according to guidelines | A first-pass annotated dataset |

| Quality Review & Adjudication | Varies (Concurrent with annotation) | Multi-level review, consensus scoring, expert adjudication | Review annotations for accuracy, resolve disagreements between annotators | A validated and refined dataset meeting accuracy targets |

This table highlights the structured, multi-step nature of professional annotation. Each stage builds upon the last, with specific checks and balances to ensure the final output is reliable.

Ensuring High-Fidelity Data Through Rigorous Review

A first pass at annotation is never the final product. The labeled data immediately moves into a Multi-Level Review Process. Here, a more experienced annotator or a specialized quality assurance (QA) team checks the work against the guidelines. It’s similar to how a book editor reviews a manuscript to catch mistakes and improve the final draft.

Disagreements are a natural part of the process. When two annotators label an item differently, a formal adjudication step is used. A subject matter expert or project lead makes the final call, settles the dispute, and updates the guidelines if an ambiguity is discovered. This structured approach ensures that an accuracy rate of 95% or higher isn't just a goal—it's a consistently met standard. Only after passing these quality checks is the data packaged and delivered, ready to train a dependable AI model.

How AI Annotation Service Is Revolutionizing Medical Imaging

In hospital radiology departments, an ai annotation service is working as a powerful assistant, helping doctors detect diseases and save lives. This isn't about replacing medical experts; it's about giving them tools that can see what the human eye might miss. The combination of medical knowledge and AI technology makes precise labeling a critical step, often marking the difference between early detection and a missed diagnosis. Annotated medical images are used to train AI models to spot tumors, identify fractures, and flag subtle issues that even experienced radiologists might overlook.

From Pixels to Prognosis: Training AI to See

Think of training a medical AI like mentoring a new doctor. You wouldn't just hand them a textbook; you would guide them through thousands of patient scans, pointing out exactly what to look for. An AI annotation service does this on a massive scale. For instance, on a chest X-ray, a trained annotator might use semantic segmentation to carefully outline the exact shape of a suspicious lung nodule. On a CT scan, they could use 3D cuboids to mark the full volume of an organ. This detailed data teaches the AI to recognize complex patterns with incredible accuracy, a standard demanded by regulatory bodies.

The U.S. Food and Drug Administration (FDA) has developed clear frameworks for AI/ML-based software as a medical device, confirming its growing importance in diagnostics.

This official oversight underscores the need for transparent, traceable, and high-quality data when developing medical AI tools that clinicians can trust with patient health.

The Unique Challenges of Medical Data

Annotating medical data is a world away from labeling consumer photos or retail products. The stakes are simply higher, and the work presents a unique set of challenges that requires a specialized approach.

- Specialized Domain Knowledge: You can't ask just anyone to label these images. Annotators must be medical professionals or have deep training to correctly identify pathologies. A layperson can’t be expected to distinguish between a benign cyst and a malignant tumor.

- Extreme Accuracy Requirements: There is no room for error. A single misplaced label could teach the AI a flawed conclusion, creating serious patient safety risks. Quality and consistency are the top priorities.

- Data Privacy and Security: Patient images are rightly protected by strict regulations like HIPAA. Any annotation process must be built on a foundation of robust data anonymization and secure handling protocols.

- Complex Data Formats: Medical imaging goes beyond simple 2D pictures. Annotators work with 3D MRI and CT scans, DICOM files, and even dynamic video from ultrasounds. To learn more about the specifics of video work, check out these video annotation techniques.

Democratizing Advanced Diagnostics

When these challenges are met correctly, the results can be profound. One of the most powerful outcomes of a professional AI annotation service is its ability to bring expert-level care to more people. By training solid diagnostic models, these services help build AI tools that can be used in smaller clinics or hospitals in underserved areas. These facilities may not have an on-site sub-specialist radiologist, but an AI assistant trained on world-class data can offer a valuable second opinion and flag urgent cases for human review.

This widespread potential is driving significant market growth. This expansion is largely fueled by the increasing need for high-quality training data in fields like medical imaging and autonomous driving. These areas currently lead the market because of their strict needs for accuracy and their use of large, complex datasets. You can read more about these market-driving trends and insights.

Beyond Images: The Hidden Universe of AI Annotation Applications

When most people hear "AI annotation," they often picture someone drawing boxes around cars or cats in photos. While that’s a part of the story, the true scope of an ai annotation service goes far beyond simple image labeling and into a rich world of different data types. It’s the essential teaching process that powers the smart technology we interact with daily, from understanding voice commands to interpreting customer reviews.

This detailed labeling is the invisible but necessary work that helps build intelligent systems capable of understanding our complex world.

Unlocking Language and Sound

Think about the subtleties of human language. For a support chatbot to be genuinely effective, it must do more than match keywords; it needs to understand context and purpose. This is where Natural Language Processing (NLP) annotation is applied. Human annotators tag sentences to identify entities (like names or dates), classify user intent (like "I want to buy something" versus "I need help with an order"), and even flag emotional tones like frustration or sarcasm. This work turns a rigid, script-following bot into a helpful conversational agent.

Audio annotation teaches AI how to listen with human-like discernment. Voice assistants such as Alexa or Siri rely on meticulously labeled audio data to work correctly. Annotators not only transcribe spoken words but also perform speaker diarization to distinguish who is speaking in a conversation with multiple people. They also tag regional accents to improve recognition and identify non-speech sounds, like a dog barking, to prevent confusion. Without this level of detail, a voice assistant could easily misinterpret a command, leading to a poor user experience.

Powering Autonomous Systems and Customer Insights

In the world of autonomous vehicles, the stakes for accurate annotation are incredibly high. Here, an ai annotation service must process a massive amount of varied data to ensure safety on the road. Annotators don't just draw 2D boxes; they perform intricate semantic segmentation, where every pixel in a video frame is classified as 'road,' 'pedestrian,' or 'sky.' They also label 3D LiDAR point cloud data to create a precise digital map of the car's environment. An autonomous car can produce up to 1 GB of sensor data per second, all of which needs careful labeling.

This same need for deep understanding applies to business intelligence. Sentiment analysis is a powerful application that helps companies listen to their customers at scale. Human annotators act as emotional decoders, reading product reviews and social media comments to teach AI how to spot not just positive or negative feelings, but also complex emotions like disappointment or excitement. This gives businesses clear insights to improve their products and services. The precision required for these tasks is also vital in other fields, as explored in this project for Fighting Cervical Cancer With Artificial Intelligence.

To better understand how these methods are applied, the table below gives a clear overview of how different industries use AI annotation for specific goals.

AI Annotation Applications Across Industries

Comprehensive overview of how different industries utilize AI annotation services for specific business outcomes

| Industry | Annotation Type | Business Application | Accuracy Requirements | ROI Impact |

|---|---|---|---|---|

| E-commerce & Support | Natural Language Processing (NLP) | Building intelligent chatbots that understand user intent and resolve issues. | High | Increased customer satisfaction, reduced support agent workload. |

| Consumer Electronics | Audio Annotation, Speaker Diarization | Improving the accuracy and responsiveness of voice assistants. | Very High | Better user adoption and product loyalty. |

| Automotive | Semantic Segmentation, 3D LiDAR | Training perception models for self-driving cars to identify objects safely. | Extremely High | Enables autonomous functionality and ensures safety compliance. |

| Retail & Marketing | Sentiment Analysis | Analyzing customer feedback from reviews and social media for insights. | High | Guides product development and informs marketing strategies. |

| Healthcare | Medical Imaging Segmentation | Identifying tumors, anomalies, or organs in scans like X-rays and MRIs. | Extremely High | Supports faster and more accurate diagnostics for clinicians. |

As the table shows, the type of annotation and the required accuracy are directly linked to the application and its value. From safer cars to more helpful products, the impact is significant.

These diverse examples make it clear that a professional ai annotation service is not a one-size-fits-all product. It is a specialized discipline that applies precise methods to turn raw data into structured knowledge, solving distinct business challenges and creating a measurable advantage in many industries.

What Separates Professional AI Annotation Service From DIY Attempts

The difference between a professional ai annotation service and a do-it-yourself project isn't just about speed or volume. It’s about the hidden framework of quality control that supports the entire operation. Labeling a complex dataset without these systems is like building a house without a blueprint. It might look fine from the street, but hidden cracks in the foundation can cause the whole structure to fail later.

Professional services are experts at building that unseen foundation, ensuring the final data is trustworthy and solid.

The Unseen Layers of Quality Assurance

At the heart of a professional service is a detailed review process that goes far beyond a simple second look. It begins with intensive annotator training and calibration. Think of calibration like tuning an orchestra before a concert; it ensures every annotator interprets the project guidelines in exactly the same way, applying labels with near-perfect consistency.

This initial alignment is strengthened by ongoing quality checks and feedback loops. These systems don’t just catch mistakes—they teach the entire team, preventing the same error from being repeated and steadily improving the group's collective skill.

Managing Complexity and Consistency at Scale

As projects get bigger, keeping everyone on the same page becomes a major hurdle, especially with teams spread across different time zones and cultures. This is where a professional service truly shines. They use clear protocols for handling disagreements through adjudication, a process where a subject matter expert makes the final call on a confusing label.

This not only resolves the immediate issue but also helps refine the annotation guidelines for everyone moving forward. The need for these structured workflows is a key reason the data annotation tools market was valued at $2.33 billion in 2024 and is projected to reach $2.99 billion by 2025. You can explore the full market research to see what’s driving this growth.

Balancing Human Expertise with Automation

The best services don't just depend on manual checks. They integrate automated quality checking tools that act as the first line of defense. These tools can instantly flag statistical oddities, incorrect label formats, or logical errors that a person might overlook. For example, an automated check could flag a bounding box for a "car" that is strangely small or located in an impossible place, like floating in the sky.

However, these tools are there to support, not replace, the judgment of human experts. The final decision on tricky or ambiguous cases still falls to a person with deep domain knowledge. This quality-first mindset is critical across all data types, as creating reliable training data is a key step in projects like using AI for Business Analytics. This balance between automated efficiency and human insight produces a final dataset that is both accurate and dependable.

Smart Investment Strategies for AI Annotation Service

Evaluating an AI annotation service based on a simple per-image or per-hour price is like judging a surgeon by the cost of their scalpel—it misses the point entirely. The real value isn’t found in the unit cost, but in the total impact on your project's timeline, budget, and ultimate success. A cheap, low-quality dataset can lead to expensive model retraining, project delays, and a final product that fails to perform, wiping out any initial savings.

A smart investment strategy looks beyond the price tag to calculate the total cost of ownership and the return on that investment.

Understanding the True Cost of Annotation

An in-house annotation team might look cheaper on the surface, but hidden expenses add up quickly. These include salaries, benefits, recruitment, management overhead, software licensing fees, and the significant time spent on training and quality control. A specialized AI annotation service bundles these costs into a predictable partnership model. You aren’t just buying labels; you are investing in a ready-made infrastructure of trained experts, proven workflows, and dedicated project management. This frees your core team to focus on model development instead of data preparation logistics.

This shift toward valuing specialized expertise is a major reason why the data collection and labeling market is expanding so rapidly. Projections show this market will reach USD 29.2 billion by 2032, growing at a compound annual rate of 28.54% from 2024 to 2032. You can discover the full market analysis here.

Key Factors That Drive Your Investment

To make an informed decision, you must understand the main variables that influence the cost and structure of an annotation project. Budgeting accurately requires a clear view of what you need. The primary drivers include:

- Data Complexity: Simple 2D bounding boxes on clear images are far less expensive than detailed semantic segmentation on 3D medical scans or identifying nuanced sentiment in text.

- Accuracy Requirements: A project needing 99% accuracy demands multiple layers of review and expert oversight, which costs more than one aiming for 90%. For critical applications like medical diagnostics, this precision is non-negotiable.

- Turnaround Time: Tight deadlines often require dedicating more annotators and resources to a project, which can affect the overall cost.

- Revision Cycles: A clear set of annotation guidelines from the start is the best way to minimize expensive revisions down the line.

By structuring the partnership with a clear understanding of these factors, companies can find flexible pricing models—such as pilot projects or milestone-based payments—that balance cost control with the unwavering need for quality. This approach ensures your investment delivers a high-quality dataset that produces measurable returns.

Key Takeaways for AI Annotation Service Success

Working with an AI annotation service is like setting out on a major expedition. If you don't have a reliable map and the right gear, you're likely to get lost. This roadmap brings together the core lessons from our guide into practical strategies to make sure your investment results in a powerful, working AI model.

Define Success Before You Start

The single most common reason for failure is a lack of clarity. Before any data gets labeled, your team and your annotation partner need to be on the exact same page about the final goal. Success isn't just about labeling data; it's about labeling the right data correctly for a very specific job. Unclear instructions lead to messy results, project delays, and expensive corrections.

To build a strong foundation, you need to create a "source of truth" by defining:

- Detailed Annotation Guidelines: A complete rulebook filled with visual examples for every label and tricky situation.

- Measurable Quality Metrics: Set specific targets for accuracy, consistency, and inter-annotator agreement (IAA), which measures how well different annotators agree.

- Clear Communication Channels: A set procedure for asking questions and clearing up confusion without delay.

Treat Quality as a Continuous Process

Quality assurance isn't something you do at the end; it's a constant feedback loop that's part of the entire annotation workflow. A professional AI annotation service builds quality in from day one with solid training and alignment, ensuring every annotator follows the guidelines in the same way. This is where the reliability of a professional service truly stands out.

Look for these indicators of a quality-focused process:

- Multi-Level Reviews: An initial check of the work by senior annotators or quality assurance specialists.

- Adjudication Systems: A formal method for settling disagreements between annotators.

- Iterative Guideline Updates: Using feedback from the annotation team to make the rules clearer and better over time.

Build a Scalable and Strategic Partnership

Your first project is probably not your last. A smart approach involves thinking about how your annotation needs will grow as your business does. Choosing an AI annotation service isn't a one-time purchase; it's a long-term partnership. Assess potential partners on their capacity to grow with you, manage more complex data, and adjust to new project needs. This is especially important in high-stakes fields like medical imaging, where subject matter expertise is essential and the need for top-quality data is only going to grow.

For medical device companies that need a partner with proven experience in complex imaging data, PYCAD provides specialized annotation and AI model development services. Find out how we can help move your project forward at PYCAD.