3D image segmentation is all about teaching a computer to see and understand objects within a three-dimensional space, like the data from an MRI or CT scan. The goal is to take a dense block of digital information and carefully partition it into distinct, labeled structures—isolating organs, bones, tumors, or any other region of interest. This makes the raw data understandable, measurable, and, most importantly, actionable for experts.

What 3D Image Segmentation Really Means

Think of it like this: you're handed a clear, solid block of Jell-O with different fruits suspended inside. From the outside, you can see the shapes, but you can't truly understand their size, position, or how they relate to each other. 3D image segmentation is the digital equivalent of meticulously carving out each piece of fruit, one by one, leaving you with a perfect model of each individual item.

The "Jell-O block" in this case is a volumetric dataset, often composed of hundreds of stacked 2D images. Each tiny cube of data is called a voxel—think of it as a 3D pixel. The segmentation process assigns a specific label to every single voxel, essentially "coloring in" each distinct structure within the dataset.

This digital carving provides invaluable context that a flat, 2D image simply can't offer. Instead of just looking at a single slice of a brain scan, a neurologist can see the entire 3D structure of a tumor and understand precisely how it intertwines with surrounding healthy tissue.

From Pixels to Practical Insights

To give you a better overview, here's a quick summary of the core concepts.

3D Image Segmentation at a Glance

| Aspect | Description |

|---|---|

| Core Goal | To partition a 3D digital image into multiple, meaningful segments or regions. |

| Input | A volumetric dataset, typically from medical scans (MRI, CT) or industrial imaging. |

| Smallest Unit | A voxel (volumetric pixel), the basic building block of a 3D image. |

| Output | A labeled map where each voxel is assigned to a specific object (e.g., "heart," "tumor"). |

| Analogy | Digitally sculpting a detailed statue from a raw, uncarved block of digital data. |

This table shows how segmentation transforms abstract data into something concrete and useful.

The idea of dividing an image into parts isn't new; it dates back to the early days of computing in the 1960s. However, its application in three dimensions has become a game-changer, especially in medicine. Today, it’s a critical component in medical imaging, accounting for over 30% of all AI applications in healthcare, and its adoption is only growing.

This leap from raw data to actionable insight is what makes the process so powerful. It directly enables:

- Quantitative Analysis: Doctors can precisely measure the volume of a shrinking tumor after treatment or calculate the exact size of a cardiac chamber.

- Surgical Planning: Surgeons can use the resulting 3D models to rehearse complex procedures, mapping out their approach before ever making an incision.

- Automated Diagnostics: AI models can be trained on segmented data to automatically flag potential anomalies for a radiologist to review, significantly speeding up the diagnostic workflow.

To really get a handle on 3D image segmentation, it helps to first understand the fundamentals of computer vision. That foundational knowledge explains how machines learn to interpret visual information in the first place, setting the stage for more complex tasks like this.

Ultimately, 3D image segmentation is about adding a layer of intelligent interpretation to raw imaging data. It’s the essential bridge between just seeing a 3D scan and truly understanding the intricate structures hidden within it.

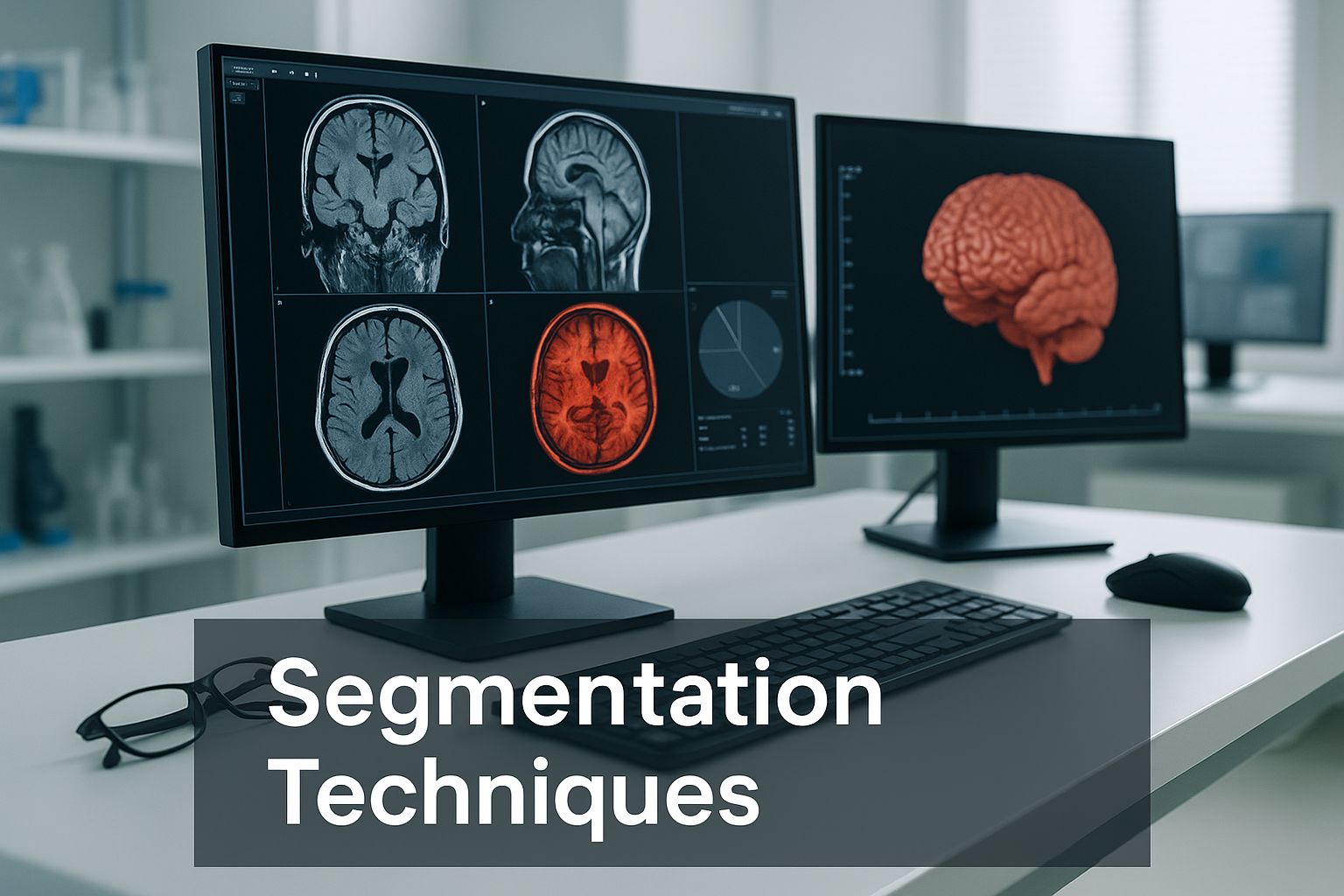

Exploring Core Segmentation Techniques

So, how does a computer actually learn to "see" inside a 3D scan? The answer isn't a single magic trick but a whole spectrum of methods, each with its own logic. These core 3d image segmentation techniques have evolved from simple, rule-based approaches to sophisticated deep learning models that feel almost intuitive.

This journey starts with foundational methods that work directly with an image's most basic properties, like the brightness of each individual voxel. Think of these as the fundamental building blocks that paved the way for the more advanced strategies we use today.

Classic Rule-Based Methods

The most straightforward way to segment an image is through thresholding. Think of it like adjusting the contrast on your TV to make an actor pop against the background. Thresholding does something similar by classifying voxels based purely on their intensity. If a voxel's intensity is above a set value, it gets labeled as part of the object; if it's below, it's considered background. This is surprisingly effective for high-contrast images, like separating dense bone from soft tissue in a CT scan.

Another classic approach is region growing. This is a bit like dropping a seed of digital dye into the scan. You start by hand-picking a "seed" voxel inside the structure you want to isolate. From there, the algorithm expands outward, absorbing any neighboring voxels that share similar properties, like intensity or texture. The process stops when it hits a boundary where the voxels are too different, neatly outlining an entire connected object, such as a single organ.

While these traditional methods are fast and easy to grasp, they have their limits. They often stumble when dealing with noisy images or when the boundaries between structures are fuzzy. This is exactly where more powerful, learning-based approaches have stepped in.

The Rise of Deep Learning in Segmentation

Deep learning has completely changed the game for 3d image segmentation. Instead of being bound by predefined rules, these models learn to spot patterns and features on their own, just by studying huge volumes of labeled data.

One of the most famous architectures in medical imaging is the U-Net. A great way to visualize a U-Net is to imagine it as a digital artist who has been tasked with creating a perfect outline of an object.

- The Encoding Path (The Rough Sketch): First, the model looks at the image and passes it through layers that shrink it down, like an artist stepping back to get the big picture. This captures the overall context—what's in the scene—but sacrifices fine detail.

- The Decoding Path (The Fine Details): Next, the model reverses course. It takes that compressed sketch and carefully enlarges it back to its original size. As it does this, it uses "skip connections" to pull in high-resolution details from the earlier layers. This is like the artist leaning back in to add the precise boundaries and delicate textures, guided by their initial sketch.

This elegant design allows U-Net and its many offshoots (like nnU-Net and V-Net) to generate incredibly accurate segmentations, even for tricky, overlapping structures. The performance of these models often hinges on the computing power available, making a solid understanding of specialized AI hardware like GPUs essential for anyone in the field.

To help put these methods into perspective, here's a quick comparison of the classic and modern approaches.

Comparing 3D Segmentation Techniques

| Technique | How It Works (Analogy) | Best For | Limitations |

|---|---|---|---|

| Thresholding | Adjusting the brightness on a TV to make things stand out. | High-contrast images where objects have distinct intensity values (e.g., bone vs. tissue). | Very sensitive to noise; struggles with objects that have similar intensities to their surroundings. |

| Region Growing | Dropping a seed of digital dye that spreads until it hits a boundary. | Segmenting single, connected objects with relatively uniform interiors (e.g., a specific organ). | Requires manual seed placement; can "leak" through weak or broken boundaries into other areas. |

| Deep Learning (U-Net) | An artist sketching the big picture, then zooming in to add fine details. | Complex, low-contrast, or overlapping structures where context is key (e.g., tumors, blood vessels). | Requires a large, well-labeled dataset for training; can be computationally expensive. |

As the table shows, there’s no single "best" method—the right choice depends entirely on the specific problem you're trying to solve.

Fundamentally, deep learning models go beyond simple rules. They learn the "look" and "feel" of different anatomical structures, which lets them make intelligent decisions that can mirror, and sometimes even surpass, a human's ability to spot patterns in 3D medical data.

This ability to learn from examples has cemented deep learning as the go-to standard for most modern 3d image segmentation tasks, whether it’s planning a complex surgery or tracking how a disease is progressing with incredible accuracy.

How 3D Segmentation Is Changing the Face of Medicine

While the technology itself is fascinatingly complex, the real impact of 3D image segmentation happens when it leaves the research lab and enters the clinic. This is where abstract code becomes a life-saving tool, giving doctors a level of clarity that was pure science fiction just a generation ago. We're not just talking about a minor upgrade here; this is a fundamental shift in how medicine is practiced.

By transforming a stack of flat, gray scan slices into an interactive 3D map of a patient's body, segmentation gives doctors an intuitive grasp of their unique anatomy. This isn't just a pretty picture. It's a detailed, measurable, and manipulable digital model that directly informs critical decisions throughout a patient's journey.

The medical world has embraced this technology with open arms. A recent market analysis shows that medical 3D imaging—which relies entirely on precise 3D image segmentation to function—accounts for over 60% of the entire 3D imaging market. This signals a massive, industry-wide belief in these powerful tools. To get a broader sense of how this technology is advancing, you can explore more on the evolution of 3D vision systems.

Empowering Surgeons with a Digital Rehearsal

Think about a surgeon getting ready for a tricky operation to remove a brain tumor. In the past, they’d sit with a series of 2D MRI scans, trying to mentally piece together a 3D puzzle of the tumor, nearby blood vessels, and critical brain tissue. This process leans heavily on years of experience, but there's always a degree of guesswork involved.

Now, imagine that same surgeon slipping on a headset or looking at a screen displaying a perfect 3D model of that patient's brain. They can spin it around, make healthy tissue transparent, and see exactly where a major artery wraps around the tumor.

This is surgical planning on a whole new level, all thanks to 3D image segmentation. The practical benefits are immense:

- Preoperative Rehearsal: Surgeons can essentially "fly through" the surgical path before making a single cut, planning the safest route to the tumor while steering clear of vital structures.

- Improved Accuracy: With a clear 3D roadmap, surgeons can use smaller, more precise incisions. For the patient, this often means less blood loss and a quicker recovery.

- Customized Surgical Tools: In complex bone surgeries, segmented models can be used to 3D-print surgical guides that fit a patient's anatomy perfectly, or even to create personalized implants.

This digital rehearsal space is all about minimizing surprises in the operating room. By planning for every possibility on a digital twin of the patient, surgeons walk into complex procedures with far more confidence and precision.

Precision in Diagnosis and Disease Tracking

The operating room isn't the only place segmentation is making a difference. It’s also a game-changer for diagnosing and managing chronic diseases. Many conditions, like multiple sclerosis (MS) or slow-growing cancers, are monitored by tracking very subtle changes inside the body over months or even years.

Trying to measure these tiny changes by hand from hundreds of individual scan slices is incredibly time-consuming and prone to error. A radiologist could easily miss a slight increase in the size of a brain lesion between two scans taken a year apart.

This is where automated segmentation shines. An AI can instantly process both the old and new scans, segment the lesion in each one, and calculate its volume down to the cubic millimeter. This gives doctors hard data, providing an objective way to see if a disease is progressing or if a treatment is working.

Examples of this quantitative analysis include:

- Oncology: Measuring the exact percentage of tumor shrinkage after chemotherapy.

- Neurology: Calculating the rate of brain volume loss in patients with Alzheimer's disease.

- Cardiology: Determining the heart's ejection fraction by segmenting the ventricles at different points in the cardiac cycle.

This moves diagnostics from a subjective guess ("it looks a little bigger") to a quantitative science ("the lesion has grown by 8%").

Optimizing Radiation Therapy

In cancer care, radiation therapy is all about precision. The entire goal is to hit a tumor with a powerful dose of radiation while doing as little harm as possible to the healthy tissue around it. It's an incredibly delicate balancing act.

Accurate 3D image segmentation is the foundation of modern radiotherapy. Before treatment, a radiation oncologist uses CT scans to outline two critical zones:

- The Target Volume: The exact 3D shape of the tumor that needs to be destroyed.

- The Organs at Risk: The nearby healthy structures, like the spinal cord, lungs, or kidneys, that are sensitive to radiation.

Once these areas are clearly segmented, powerful software can simulate thousands of radiation beam angles and intensities. The system then designs a treatment plan that wraps the radiation dose tightly around the tumor's shape, like a glove. This "dose painting," known as intensity-modulated radiation therapy (IMRT), would simply be impossible without the detailed anatomical map that segmentation provides.

Measuring the Accuracy of Segmentation Models

Building a 3D image segmentation model is one thing, but trusting it is another matter entirely. After an AI has meticulously outlined a tumor or organ from a scan, how can we be sure it got it right? This is where the real work begins: performance evaluation. It's the critical step that separates a cool tech demo from a tool that's genuinely reliable enough for doctors to use.

Without solid, objective measurements, we’re essentially flying blind. A flawed segmentation model could lead to a misdiagnosis or a botched surgical plan—the stakes are incredibly high. It brings to mind the 1999 NASA orbiter, a $125 million project lost simply because engineering teams used different units of measurement. In healthcare, where lives are on the line, the need for objective accuracy checks is even more non-negotiable.

Understanding Key Performance Metrics

So, how do we grade the AI’s work? We compare its segmentation to a "ground truth"—a gold-standard version that has been carefully hand-labeled by a human expert. Think of it like comparing a student's answer key to the teacher's official one. The closer the match, the higher the grade.

To do this, we rely on a few core metrics. While the math behind them can get complex, the ideas are surprisingly straightforward. The two most common ones you'll hear about are the Dice Score and Intersection over Union (IoU).

-

Intersection over Union (IoU): Picture two overlapping circles. One is the area identified by the AI, and the other is the ground truth from the expert. IoU measures the area where they overlap and divides it by the total area covered by both circles combined. A perfect score is 1 (perfect match), and a score of 0 means they didn't overlap at all.

-

Dice Score: This metric is a close cousin to IoU but gives a bit more weight to the overlap. It's calculated by doubling the overlapping area and dividing it by the sum of the individual areas. The Dice Score also ranges from 0 to 1 and is a favorite in the medical imaging community.

These scores give us a clear, standardized number to represent how well the model performed. A high score means the AI’s segmentation is a close match to the expert’s, which builds our confidence in its output.

At their core, these metrics aren't just numbers on a spreadsheet; they are a direct measure of trust. A model that consistently earns a high Dice or IoU score has demonstrated that it can "see" anatomy with a precision that rivals a human expert. That's what makes it a dependable tool for clinicians and researchers.

Why Accurate Measurement Is So Crucial

The quality of a 3D image segmentation model has a direct, tangible impact on a patient's health. Take radiation oncology, for example. An accurate segmentation is what allows a radiation beam to target a tumor with pinpoint accuracy while sparing the healthy tissue around it. If that boundary is off by even a few millimeters, the consequences could be severe—either healthy organs get zapped, or parts of the tumor are missed.

This need for precision is just as important when monitoring a disease over time. A reliable model that can accurately calculate the changing volume of a brain lesion gives a neurologist hard data to see if a treatment is working. This kind of objective insight is only possible if we've rigorously tested the model's accuracy against that trusted ground truth.

Ultimately, these evaluation metrics are the final gatekeepers. They’re what determines if an AI system is just a promising research idea or a truly dependable tool ready to help medical professionals make critical, life-altering decisions.

The Future of Automated 3D Analysis

The world of 3D image segmentation isn't sitting still; it's an exciting frontier that’s constantly being redrawn by new ideas and sheer computational horsepower. While the models we have today are already delivering impressive results, the research community is already wrestling with the next generation of problems. The aim is to create automated analysis that's not just faster and more accessible, but smarter, too—moving beyond simply segmenting what's there to anticipating needs in real-time medical and industrial settings.

This push forward is born out of necessity. We have to clear some significant hurdles first, the biggest of which is the "data bottleneck." Our best deep learning models are incredibly data-hungry, often needing thousands of painstakingly hand-labeled 3D scans to learn their job. This annotation work is slow, expensive, and requires a massive amount of an expert's time, which is a major roadblock for developing models for rare diseases or novel imaging methods.

Another nagging issue is image quality. Let's be honest, real-world scans are rarely perfect. They can be "noisy," filled with random visual static that hides important details, or have low contrast, making the borders between tissues frustratingly blurry. Today's models can falter under these conditions, so this is a huge area for improvement.

Pushing the Boundaries of Learning

To get around the data bottleneck, some of the most promising work is happening in semi-supervised and weakly-supervised learning. Think of it like training an apprentice. You wouldn’t stand over their shoulder guiding every single move. Instead, you'd show them a few perfect examples, then give them high-level feedback as they practice on their own.

That’s the basic idea behind these clever learning methods.

- Semi-Supervised Learning: Here, a model trains on a small, core set of perfectly labeled scans. It then takes that initial knowledge and applies it to a much larger pile of unlabeled data, making its own predictions and learning from the patterns it finds.

- Weakly-Supervised Learning: This takes it a step further by learning from much simpler labels. For instance, instead of needing a perfect, voxel-by-voxel outline of a tumor, the model might only get a simple box drawn around it or a single point showing its general location.

These approaches drastically cut down the need for expert annotators, which means we can build powerful 3D image segmentation models much faster and for a lot less money. They’re a huge leap toward making AI more scalable and adaptable for a whole host of clinical challenges.

This shift in learning is all about making AI work smarter, not just harder. When we design models that can learn effectively with less hand-holding, we open the door to applying segmentation to countless niche medical problems that were just too data-intensive to even consider before.

The Drive for Real-Time Performance

Beyond pure accuracy, the next great leap for 3D image segmentation is speed. A model that can process a scan in a few minutes is great, but many future applications need answers in seconds. This demand for real-time segmentation is on the verge of unlocking completely new uses, especially in live, interactive procedures.

Imagine a surgeon using a robotic arm. An AI could constantly segment the live video from an internal camera, highlighting delicate nerves or blood vessels in real-time to help prevent accidental nicks. This would give the surgeon an intelligent, dynamic guide right in the middle of a critical operation.

Or think about interventional radiology. A doctor performing a biopsy could watch a 3D model of a patient's organ update instantly as they guide the needle. This live feedback loop would help them hit the exact right spot with incredible precision.

This future isn’t some far-off fantasy. Thanks to more efficient model designs and specialized hardware, real-time analysis is quickly becoming a reality. It’s set to change 3D image segmentation from a static, offline tool into a dynamic, interactive partner in the operating room. The ultimate goal is a seamless blend of human skill and machine perception that elevates the standard of care.

Answering Your Top Questions About 3D Segmentation

To wrap things up, let's tackle a few common questions that come up when people first dive into 3D image segmentation. Think of this as a quick-reference guide to help solidify the key ideas.

How Does 3D Segmentation Differ From 2D?

Imagine you have a flipbook telling a short story. 2D segmentation is like looking at a single, isolated page. It’s analyzing one flat slice of information—like a single X-ray or one slide from a CT scan. It’s useful, but you're missing the full context.

3D segmentation, on the other hand, is like watching the entire flipbook in motion. It processes the whole volume of data at once, understanding how every slice connects to the next. This gives you a complete, three-dimensional picture, letting you see exactly how a tumor is shaped or how a blood vessel weaves through an organ. You just can't get that level of depth from a single 2D image.

What Kind of Hardware Do You Need for 3D Segmentation?

The answer really depends on the complexity of the technique you're using. Some of the older, more traditional algorithms can get by on a standard computer processor (CPU) without much fuss.

But when you step into the world of modern deep learning, the game changes. These sophisticated models are incredibly power-hungry and rely almost exclusively on high-end Graphics Processing Units (GPUs).

The parallel processing architecture of a GPU is what makes it possible to train these massive models on huge datasets and run them quickly enough to be useful in a real-world setting. Without a powerful GPU, the computations would simply take too long.

Is 3D Segmentation Used Anywhere Besides Medicine?

Absolutely. While its impact on medicine is undeniable, 3D segmentation is a crucial tool in any field where understanding an object's internal or external structure is important.

- Autonomous Driving: Self-driving cars use LiDAR scanners to create a live 3D map of their surroundings. Segmentation helps the car's AI tell the difference between a pedestrian, a fire hydrant, and another vehicle.

- Geology: Scientists use it to analyze seismic data, segmenting different layers of rock and sediment to pinpoint potential oil or gas reserves deep underground.

- Materials Science: Engineers can inspect the inside of a metal component without cutting it open, using segmentation to find microscopic stress fractures or defects that could lead to failure.

At PYCAD, we live and breathe this technology. We focus on putting the power of AI into the hands of medical innovators, offering everything from expert data annotation to full model deployment. If you're building a medical device or pushing the boundaries of research, we can help you implement the precise 3D segmentation solutions you need.