Medical image segmentation is all about taking a digital medical image—like an MRI or a CT scan—and dividing it into meaningful pieces. Think of it as digitally outlining specific anatomical structures like organs, tissues, or tumors, making them stand out for closer inspection by clinicians or analysis by software.

What Is Medical Image Segmentation?

Picture a radiologist staring at a complex MRI of the brain. It's a swirl of grayscale pixels, and their job is to trace the exact boundary of a small tumor. Medical image segmentation is what automates that painstaking process. It's like having a highly skilled digital cartographer for the human body who can draw a precise map, giving every single pixel an identity.

This isn't just about drawing lines. It transforms a flat, static image into a structured dataset packed with quantifiable information. Instead of just a picture, doctors get the exact volume of a heart ventricle, a clear outline of a lung, or the defined borders of a cancerous lesion. This ability is a cornerstone of how modern medicine diagnoses and plans treatments.

From X-Rays to AI-Powered Maps

We didn't get here overnight. The journey really kicked off with Wilhelm Röntgen’s discovery of X-rays back in 1895, but it took nearly a century of innovation to get where we are. The invention of CT and PET scans in the 1960s gave us our first peek inside the body in cross-sections.

The real game-changer, though, was the shift to digital imaging in the 1980s and 1990s, thanks to standards like DICOM. That digital foundation paved the way for the powerful AI algorithms we rely on today. For a deeper dive, you can explore a detailed history of these advancements to see just how far medical imaging has come.

At its core, medical image segmentation answers one simple question for every pixel in a scan: "What part of the body is this?" Answering that brings clarity to incredible complexity.

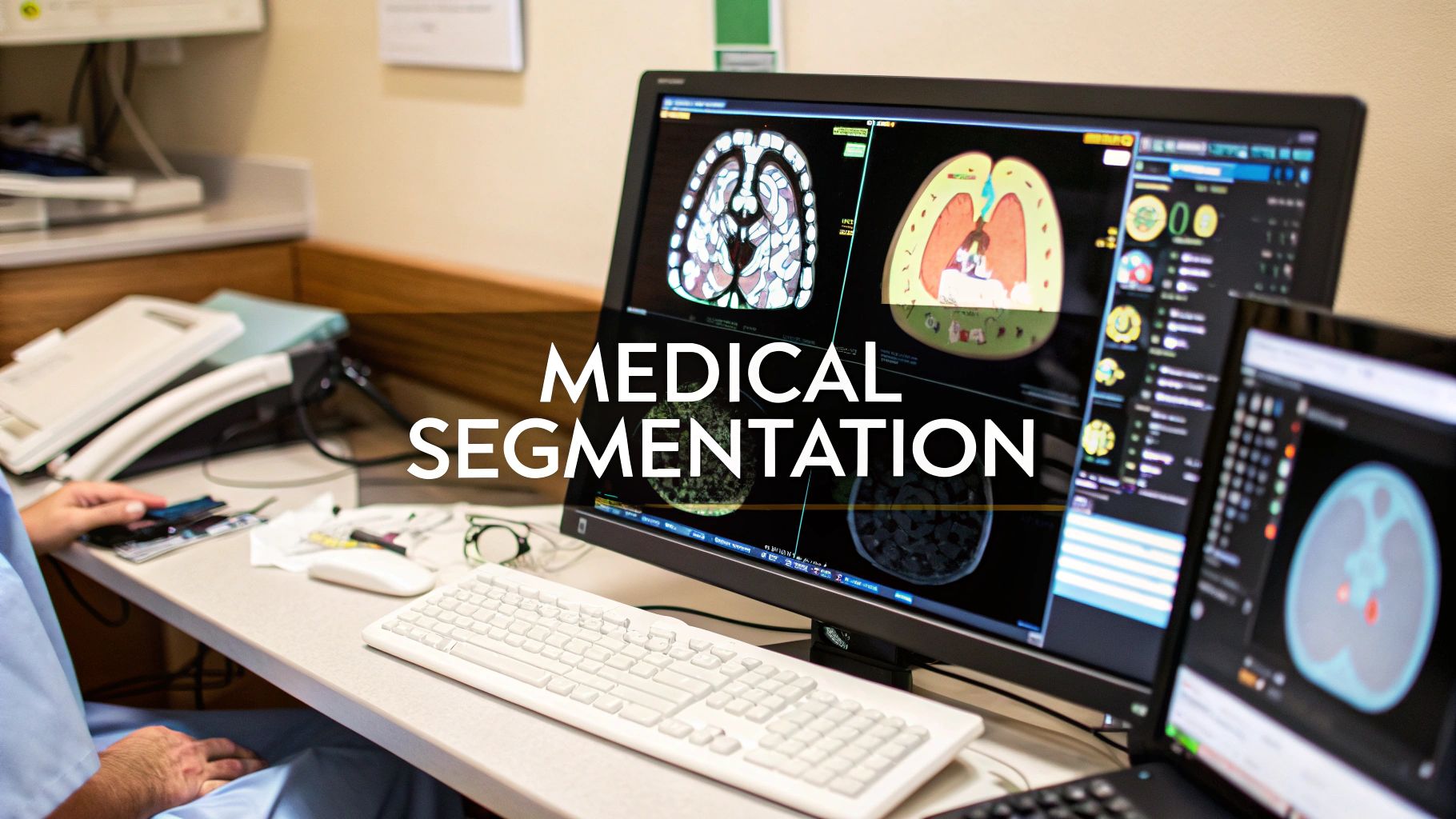

Visualizing the Segmentation Process

The image below shows this idea in action. You can see an original photograph and how different segmentation techniques can pull specific objects out from the background.

Even though this is a regular photo, the principle is exactly the same for a medical scan. The algorithm learns to separate the "foreground"—the anatomy we care about—from the "background," leaving a clean mask that highlights only what’s important.

This first step is crucial for so many clinical applications. It gives software and clinicians the power to:

- Measure with Precision: Accurately calculate the size, shape, and volume of an organ or a tumor.

- Monitor Change Over Time: Objectively track whether a tumor is growing or shrinking between scans.

- Plan Medical Interventions: Build 3D models from segmented images to guide a surgeon's hands or map out radiation therapy.

Ultimately, medical image segmentation provides the detailed anatomical map needed for accurate diagnoses, effective treatments, and new research. It turns raw, messy pixel data into clear, actionable medical insight.

The AI Models That Power Segmentation

The real magic behind medical image segmentation isn't just a clever trick; it’s powered by sophisticated AI models built to interpret medical scans with incredible precision. In the early days, computer vision relied on simpler methods like edge detection or thresholding—basically, telling a computer to separate pixels based on how bright they are. But the true game-changer was the arrival of deep learning.

This newer breed of algorithms, especially Convolutional Neural Networks (CNNs), completely changed the landscape. Instead of being manually programmed to find specific features, a CNN learns to spot patterns on its own by analyzing thousands of labeled images. It works a bit like the human visual cortex, using layers of digital "neurons" to build up an understanding of an image, from simple edges and textures to complex anatomical structures.

U-Net: The Workhorse of Medical Segmentation

In the world of CNNs, one model has become the undisputed champion for medical image segmentation: U-Net. When it was introduced back in 2015, its unique architecture was a huge leap forward, built specifically to handle the high-stakes precision required in biomedical imaging.

You can think of the U-Net model as having two parts that work together seamlessly: an encoder and a decoder.

-

The Encoder (The Context Gatherer): This first half of the network acts like a summarizer. It processes the input image through a series of layers, gradually shrinking the image while capturing the big-picture context. It’s focused on answering the question, "What am I looking at in general?" For instance, it quickly figures out if the scan is of a brain, a lung, or a liver.

-

The Decoder (The Detail Artist): The second half takes that summarized information and carefully reconstructs the image, layer by layer, until it's back to the original size. Its job is to answer the question, "Okay, but exactly where are the boundaries of this structure?"

What made U-Net so special are its "skip connections." These are essentially shortcuts that feed information from the early, detail-rich layers of the encoder directly to the corresponding layers in the decoder. This gives the "detail artist" access to the fine-grained spatial information that would otherwise be lost during the summarizing process. The result is a final segmentation map that is both contextually aware and pixel-perfect.

U-Net's design strikes a critical balance. It understands the 'what' (the organ or lesion) and the 'where' (its exact pixel-perfect location) simultaneously, a combination that makes it exceptionally effective for medical tasks.

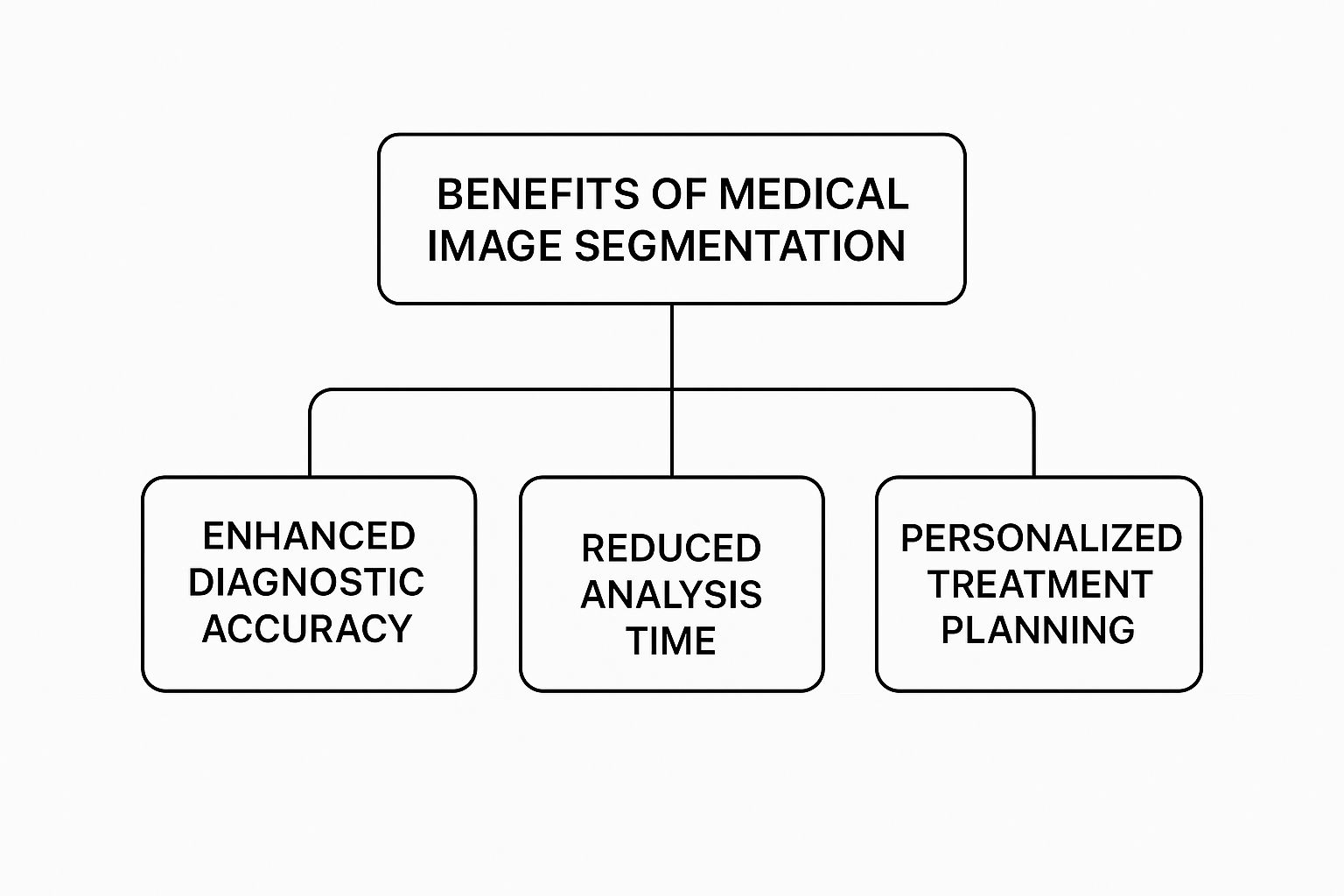

The benefits of models like U-Net in a clinical setting are massive, as this infographic shows.

As you can see, the precision these AI models offer leads directly to more accurate diagnoses, much faster analysis, and the ability to craft personalized treatment plans for every patient.

The table below offers a quick comparison between older computer vision methods and the deep learning models we use today.

Comparison of Segmentation Algorithm Types

| Technique Type | Core Principle | Strengths | Limitations |

|---|---|---|---|

| Traditional Computer Vision | Relies on hand-coded rules based on pixel properties like intensity, color, or texture. | Fast for simple tasks; doesn't require large datasets for training. | Brittle and inflexible; struggles with complex or varied images; requires expert tuning. |

| Deep Learning (e.g., U-Net) | Learns features automatically from vast amounts of labeled data through neural networks. | Highly accurate and robust; adapts well to different image types; can identify complex patterns. | Requires significant data for training; computationally intensive; can be a "black box." |

It's clear that while traditional methods had their place, deep learning models have unlocked a new level of capability.

From 2D Slices to 3D Volumes

The original U-Net was designed for 2D images, analyzing medical scans one slice at a time. But modern imaging, like CT and MRI, gives us rich, three-dimensional data. To handle this, newer versions like 3D U-Net were created. They apply the same core ideas but analyze the entire 3D volume at once, which often leads to far more accurate and consistent results.

These advanced architectures have produced incredible gains in performance. In this field, we often measure success with a metric called the Dice coefficient—a score of 1.0 means the AI's segmentation is a perfect match with the ground truth. Many modern models are now hitting Dice scores well above 0.85 to 0.90 on challenging medical datasets, proving they're more than ready for real-world clinical use.

Of course, AI's impact isn't limited to just drawing lines on images. Similar breakthroughs are happening across medicine. For example, there are AI models transforming healthcare through medical speech recognition that help automate clinical notes and free up doctors from tedious paperwork. It’s all part of a broader shift where AI is becoming an indispensable partner in healthcare.

How Segmentation Is Used in Patient Care

While the theory behind these AI models is interesting, the real story is their direct impact on patients' lives. This isn't some far-off, futuristic concept; image segmentation is already at work in hospitals and clinics today, fundamentally changing how we diagnose, treat, and monitor disease. It's the crucial link that turns raw pixel data from a scan into clear, actionable clinical insights.

Think of it as a high-powered lens that gives doctors the ability to see and measure our anatomy with incredible clarity. From the oncology ward to the cardiac unit, this precision leads to more personalized treatment plans, less invasive procedures, and, most importantly, better outcomes. Let’s dive into some of the most impactful ways it’s being used right now.

Revolutionizing Cancer Treatment in Oncology

Nowhere is the power of medical image segmentation more obvious than in oncology. The goal of a radiation oncologist is simple but incredibly difficult: deliver a lethal dose of radiation to a cancerous tumor while sparing every bit of surrounding healthy tissue. It’s a task that demands millimeter-perfect accuracy.

Not long ago, this meant an oncologist had to sit and manually draw the boundaries of tumors and critical organs on dozens, sometimes hundreds, of individual CT scan slices. The process was painstakingly slow—often taking hours for complex cases—and was prone to what we call "inter-observer variability." Simply put, two different doctors might outline the very same tumor in slightly different ways.

Automated segmentation completely changes the game. An AI model can now delineate a tumor and nearby organs-at-risk (like the spinal cord or salivary glands) with stunning precision in just a few minutes. This speed and consistency unlock the potential for more advanced radiation techniques, like Stereotactic Body Radiation Therapy (SBRT), which uses highly focused, powerful beams of radiation.

The benefits here are huge:

- Maximizing Tumor Destruction: By ensuring the entire tumor volume is accurately targeted, the chances of wiping out all the cancer cells go way up.

- Minimizing Side Effects: Sparing healthy tissue means reducing the often-debilitating side effects of radiation, which dramatically improves a patient's quality of life.

"In radiation oncology, accurate segmentation is the foundation of a safe and effective treatment plan. AI-driven tools give us the confidence to treat tumors more aggressively while protecting the patient."

Segmentation is also vital for checking if a treatment is working. By comparing segmented tumor volumes from scans taken before and after treatment, oncologists can objectively measure whether a tumor is shrinking, stable, or growing, letting them fine-tune their strategy on the fly.

Enhancing Diagnostics in Cardiology

When it comes to the heart, understanding its structure and function is everything. Medical image segmentation gives cardiologists the tools to quantify cardiac performance with incredible detail, using scans like cardiac MRIs and echocardiograms.

For example, one of the most vital metrics for heart health is the ejection fraction (EF). This number measures how much blood the left ventricle pumps out with each beat. To calculate it, a doctor needs to know the volume of the ventricle when it’s full and when it has just contracted.

Trying to manually trace the borders of a moving ventricle on a scan is incredibly challenging and can lead to inconsistent results. AI-powered segmentation automates this, delivering fast and reproducible volume calculations. This leads to far more reliable EF measurements, which are critical for diagnosing conditions like heart failure and deciding on the best course of action.

Other key cardiac applications include:

- Assessing Myocardial Scarring: Segmenting scar tissue within the heart muscle after a heart attack helps doctors predict a patient's risk of future cardiac events.

- Quantifying Aortic Aneurysms: Precisely segmenting the aorta allows for exact measurement and monitoring of aneurysms, helping determine the critical moment when surgery is necessary.

Tracking Disease Progression in Neurology

The brain is an amazingly complex organ, and diseases like multiple sclerosis (MS) or brain tumors demand constant, careful monitoring. In neurology, medical image segmentation has become an essential tool for tracking even the most subtle changes over time.

In MS, the disease causes lesions to form in the brain and spinal cord. Keeping track of the number, volume, and location of these lesions is absolutely essential for understanding how the disease is progressing and seeing if a patient's medication is working.

Imagine trying to manually identify and measure these tiny, scattered lesions across multiple MRI scans—it's a monumental and tedious task. Segmentation algorithms can automate this whole process, detecting and quantifying every single lesion with high sensitivity. This gives neurologists the objective data they need to make informed decisions and adjust treatments.

It’s a similar story in neuro-oncology, where segmentation helps track the size of brain tumors with pinpoint accuracy. This provides a clear, unbiased picture of whether a treatment is succeeding, empowering clinicians to personalize care and intervene at the earliest sign of change.

What Stands in the Way of Implementation?

Taking a powerful AI segmentation model from the lab and putting it to work in a real-world clinic is a whole lot harder than it sounds. It’s not just a matter of installing new software. Getting these systems up and running means confronting a few major roadblocks that require serious thought, planning, and investment.

The single biggest bottleneck, almost every time, is the data. Deep learning models have an insatiable appetite for it. To get a segmentation model to perform reliably, you need to feed it a massive, high-quality dataset. And it's not enough to just have a lot of images; each one needs to be meticulously annotated by clinical experts who trace the precise boundaries of organs or tumors. This is a painstaking, expensive, and incredibly slow process.

On top of that, the data has to be diverse. It needs to reflect the vast differences we see in real patients—different ages, body types, and stages of a disease. If a model is only trained on data from one demographic, it could easily fail when presented with a patient from another, leading to dangerous biases.

Making Sure a Model Works Everywhere, Not Just in One Lab

Another huge challenge is what we call generalizability. Think about it: a model trained entirely on MRI scans from a single machine at one hospital might look like a superstar. But the moment you try to use it at a different hospital with a different brand of scanner or slightly different imaging settings, its accuracy can completely fall apart.

This happens because subtle variations in things like image contrast, resolution, or even electronic "noise" can throw off a model that hasn't been trained to expect them. To build a truly robust tool, you need to ensure it can handle the messiness of the real world and generalize across different clinical environments. This often means gathering data from multiple institutions, which opens up a whole new can of worms regarding patient privacy and logistics.

The real measure of a medical AI model isn’t how it performs on a perfect, hand-picked dataset. It’s how it holds up in the chaotic, unpredictable reality of daily clinical work across dozens of different hospitals and patient populations.

The Technical, Regulatory, and Human Hurdles

Beyond the data itself, a few other significant obstacles need to be cleared for any successful implementation.

-

Serious Computing Power: Training a top-tier segmentation model, especially for 3D data from a CT scan, demands a staggering amount of computational horsepower. This means a hefty investment in high-end GPUs and the infrastructure to keep them running.

-

The Regulatory Maze: As they should be, medical AI tools are scrutinized heavily for safety and effectiveness. Getting approval from bodies like the FDA is a long, expensive, and rigorous journey filled with validation studies and mountains of documentation.

-

Fitting into the Workflow: An AI tool is worthless if it's a pain for clinicians to use. It has to plug seamlessly into the hospital's existing systems, like the Picture Archiving and Communication Systems (PACS), and have an interface that feels natural to a busy doctor, not a computer scientist.

-

The Trust Factor: Ultimately, you have to win over the people who will use it. Clinicians are rightfully skeptical of "black box" AI where the reasoning is a complete mystery. This is why developing interpretable AI—models that can explain why they made a particular segmentation—is so crucial. Building that trust is the final, essential step to getting this technology into the hands of those who can use it to save lives.

What's Next for Intelligent Medical Imaging?

Medical image segmentation isn't just getting better; it's getting smarter. We're moving away from analyzing single images in isolation and toward a future where AI synthesizes massive amounts of data to make diagnostics more predictive and personalized, all while being seamlessly woven into the day-to-day work of clinicians.

The next leap forward is about creating a truly holistic view of a patient's health. Instead of just looking at one scan from one moment in time, the systems of tomorrow will pull together information from multiple sources to paint a much richer, more dynamic picture.

Seeing the Whole Picture with Multi-Modal Imaging

One of the most promising frontiers is multi-modal imaging. You can think of it like giving a doctor a new set of senses. A standard MRI is fantastic at showing the structure of soft tissue—the "what." But a PET scan reveals metabolic activity—the "how it's behaving."

Each scan tells a piece of the story on its own. But when you train an AI model to fuse them together, you get a single, unified view that’s far more insightful than either scan alone. This allows a clinician to see not only the exact location of a tumor from an MRI but also to pinpoint its most aggressive, fast-growing areas from the PET scan. That kind of combined insight is gold for planning targeted biopsies or aiming radiation precisely where it's needed most.

Training Smarter, Safer Models with Federated Learning

One of the biggest hurdles in building great AI has always been getting enough diverse data without violating patient privacy. Federated learning provides a clever way around this. Instead of collecting sensitive patient data in one central database, the AI model travels to each hospital to train on their local data directly.

Federated learning allows a model to learn from a global pool of clinical data without any of that data ever leaving the hospital. It’s a game-changer for building AI that is both powerful and trustworthy.

The model learns from the local scans, and only the abstract mathematical learnings—not the data itself—are sent back and combined with insights from other hospitals. This approach protects patient privacy completely while allowing us to build incredibly robust models that work well across different patient demographics and imaging hardware.

Real-Time Guidance and The Rise of Synthetic Data

Looking even further ahead, a couple of other key developments are set to redefine the field.

-

Real-Time Segmentation: As computers get faster, AI is making its way into the operating room. Imagine real-time segmentation instantly outlining critical organs and blood vessels during robotic surgery. This gives the surgeon an augmented reality "map" to guide their instruments with superhuman precision.

-

Generative AI for Synthetic Data: Data can be hard to come by, especially for rare diseases. Generative AI is now being used to create realistic, high-quality synthetic medical images. This allows developers to train and test their models on vast, perfectly labeled datasets, dramatically speeding up the development of more accurate tools for everyone.

Frequently Asked Questions

As you get deeper into medical image segmentation, a few common questions always seem to pop up. Let's tackle some of the most frequent ones to clear up any confusion.

What Is the Main Difference Between Image Classification and Segmentation?

Think of it this way: image classification looks at a picture and gives you a single, high-level tag. It might tell you, "This is a brain MRI." It's great for sorting images but doesn't offer any detail about what's inside the image.

Image segmentation, on the other hand, is like a highly skilled artist meticulously outlining every important structure within that brain MRI. It assigns a specific label to every single pixel, effectively drawing a precise map of the tumors, tissues, and blood vessels. It’s all about the details.

Why Is the U-Net Model So Popular for Medical Images?

The U-Net architecture became a superstar in medical imaging because it was practically designed for it. Its unique structure, featuring what are called "skip connections," is brilliant at combining two crucial pieces of information. It understands the big-picture context (like knowing it's looking at a kidney) while also capturing the tiny, pixel-level details needed to draw a perfect border around it.

This dual focus makes U-Net incredibly good at producing clean, precise outlines. Plus, it performs surprisingly well even with the smaller datasets that are so common in medical research, which is a massive advantage.

U-Net's magic is its ability to see both the forest and the trees. It recognizes the overall anatomy while simultaneously pinpointing the exact location of the smallest anomalies.

How Accurate Are AI Segmentation Models?

Modern AI models are astoundingly accurate, often matching—or even surpassing—human-level performance on specific, well-defined tasks. It's not uncommon for top-tier models to achieve Dice scores (a common accuracy metric) above 0.90 (90%).

But there's a catch. That accuracy isn't a given. It depends heavily on:

- The quality and size of the training data

- The complexity of the anatomy being segmented

- The clarity of the original medical image

Garbage in, garbage out still applies. High-quality data is the foundation for high-quality results.

Can Segmentation Be Performed in Real Time?

Absolutely, and it's a field that's moving incredibly fast. While segmenting a complex 3D scan still requires serious computational muscle, highly optimized models running on powerful hardware are making it happen.

Real-time segmentation is a game-changer for image-guided interventions. Imagine a surgeon getting instant feedback on tissue boundaries during robotic surgery, or a radiation machine adapting its beam in real time as a tumor moves when a patient breathes. This is where the technology is heading.

At PYCAD, we help turn these advanced AI concepts into practical clinical tools. We work with medical device companies to manage data, train robust models, and deploy systems that boost diagnostic confidence and streamline workflows. Find out how we can help you build the next generation of medical imaging technology.