Clinical trial data management is the behind-the-scenes workhorse of medical research. At its core, it’s the meticulous process of gathering, cleaning, and safeguarding study data to guarantee it's accurate, reliable, and secure. Think of it as the quality control system for the information that ultimately proves whether a new treatment is safe and effective.

The Blueprint for Medical Discovery

Imagine trying to build a skyscraper without a solid architectural blueprint. You might end up with a weak foundation, misaligned walls, and a structure that’s a disaster waiting to happen. That's exactly what clinical trial data management (CTDM) prevents in medical research. It’s the blueprint that provides the structural integrity a study needs to produce trustworthy results.

Without rigorous data management, even the most promising therapy is built on a shaky foundation. The entire process is far more than just administrative box-ticking; it’s a dynamic lifecycle that starts the moment data is collected and ends only when the final, clean dataset is locked for analysis and submitted to regulators. Every step is deliberately planned to catch errors, protect patient privacy, and deliver a dataset that can withstand intense scrutiny.

Core Components of Data Management

So, what makes for a strong data management plan? It all comes down to a few key pillars that work in concert. These components create a system of checks and balances, ensuring every piece of information collected is ready for statistical analysis and regulatory review. A single weak link can jeopardize the entire study.

The ultimate goal of clinical trial data management is to deliver a high-quality, reliable, and statistically sound dataset. This clean data is the bedrock for evaluating a treatment’s safety and efficacy, which directly impacts patient outcomes and public health.

The work itself involves a huge range of tasks. It starts with designing a database tailored to a specific trial's protocol and extends to standardizing information coming in from different places. For instance, if lab results arrive from sites in the US and Europe, the data team must convert them into uniform units so they can be compared apples-to-apples. This is the kind of detail that makes all the difference.

To truly appreciate how this works, it helps to break the process down into its foundational parts.

These four pillars form the backbone of any successful clinical trial data management strategy.

The Four Pillars of Effective Clinical Trial Data Management

This table summarizes the core components that ensure data integrity and trial success, from initial planning to final submission.

| Pillar | Core Function | Key Objective |

|---|---|---|

| Data Collection | Capturing patient information through electronic or paper forms (eCRFs), wearables, and patient diaries. | To gather accurate and complete raw data directly from clinical sites and participants. |

| Data Validation & Cleaning | Running automated checks and manual reviews to identify and resolve inconsistencies, errors, or missing data. | To ensure the dataset is free of errors and discrepancies before analysis begins. |

| Data Storage & Security | Maintaining the dataset in a secure, centralized system with controlled access and detailed audit trails. | To protect patient confidentiality and comply with regulations like GCP and 21 CFR Part 11. |

| Analysis & Reporting | Locking the final clean dataset and preparing it for statistical analysis and regulatory submission. | To produce a final, verifiable dataset that can be used to draw valid scientific conclusions. |

When these four areas are managed effectively, the result is a clean, reliable dataset that regulatory bodies can trust—and that researchers can use to make meaningful discoveries.

The Core Workflow of Managing Clinical Data

Think of clinical trial data like a river. It starts as thousands of tiny streams—individual data points from clinics, patient diaries, and wearable devices—and our job is to guide them all into a single, clean, and powerful current that can drive scientific discovery. This process, from the first drop of data to the final, analysis-ready dataset, is the very heart of clinical trial data management.

It all kicks off the second a trial goes live. Data starts pouring in from every direction: a nurse enters vitals into an electronic Case Report Form (eCRF), a patient logs their symptoms on a smartphone app, or a wearable sensor streams activity levels. The first challenge is simply to capture it all.

But raw data is messy. It's full of typos, missing fields, and illogical entries. That's where the real work begins. We can't just let the river flow wild; we need to build a system of filters and checks to purify it along the way.

From Raw Entry to Refined Data

The first line of defense is at the point of entry. Modern systems use automated checks to catch obvious mistakes on the spot. For instance, if a clinician tries to enter a blood pressure of "12/8," the system will flag it immediately, knowing it should be closer to "120/80." It's a simple but surprisingly effective way to prevent basic errors from muddying the waters.

After this initial screening, the data flows into a central database for a much deeper clean. This is where experienced data managers dive in, looking for more subtle problems. They might spot inconsistencies across a patient’s record—like a follow-up visit dated before the initial one—and ensure the entire story makes sense. A big part of this is just getting everything in order. For a deeper look into this, you can explore tips for organizing research data effectively.

A key part of this cleanup is Query Management. This is the formal back-and-forth process of flagging a problem (like a missing lab result) and sending a "query" back to the clinical site. The site staff investigates, provides the correct information, and resolves the query. This meticulous dialogue is what guarantees every single data point is trustworthy.

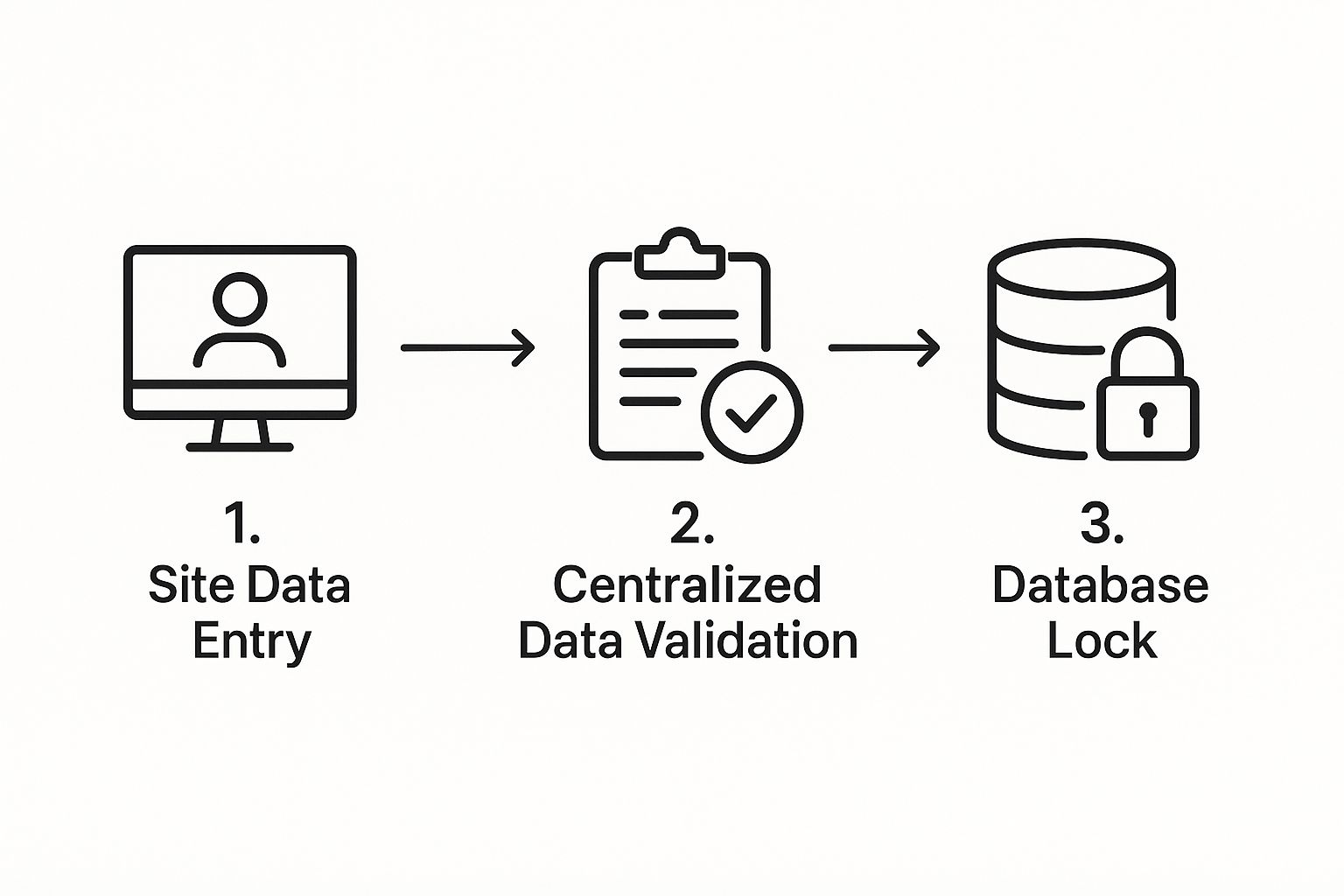

The image below gives you a bird's-eye view of this entire journey, from the raw data entered at a clinic to the final, locked database.

As you can see, it's a step-by-step process of refinement, designed to build a dataset that is solid, reliable, and ready for scrutiny.

Locking Down the Final Dataset

After every query has been resolved and the data is as clean as it can possibly be, we reach the point of no return: database lock. This is a massive milestone. Locking the database makes it read-only, literally freezing it in time so that no more changes can be made. It’s our formal declaration that the data is final and ready to be handed over to the biostatisticians for analysis.

Before we can hit that lock button, there’s a final flurry of activity. It’s a bit like the pre-flight checklist for a space launch—everything has to be perfect.

- Final Data Review: One last sweep to catch any remaining outliers or strange patterns.

- SAE Reconciliation: We cross-reference the Serious Adverse Events reported in the clinical database against the separate safety database to make sure they match perfectly.

- Medical Coding: This is where we standardize all the medical terms. Using dictionaries like MedDRA and WHODrug, we ensure that "heart attack," "myocardial infarction," and "MI" are all coded as the same thing, which is critical for accurate analysis.

- Audit Trail Review: A final check of the logs to confirm that every change made to the data throughout the trial was authorized and properly documented.

Achieving database lock is a huge accomplishment. It means the rigorous work of clinical trial data management has paid off, resulting in a high-quality, unshakeable dataset—the very foundation on which we build valid scientific conclusions.

Navigating the Complex Regulatory Landscape

Managing clinical trial data isn't just about keeping things organized; it’s about successfully navigating a maze of incredibly strict regulations. These rules aren't just bureaucratic red tape. Think of them as essential guardrails, put in place to protect patients, guarantee the integrity of the science, and ultimately, build public trust in medical research.

A great way to look at it is to compare these regulations to the "building codes" for a clinical trial. Just as a building must meet specific safety and stability standards, a clinical trial must follow a precise framework of rules to ensure its findings are sound and its participants are kept safe. If you violate these codes, the entire structure—the trial's results—can be deemed unusable.

The sheer scale of modern research makes this more critical than ever. As of 2025, there are over 450,000 registered clinical trials worldwide. That number is projected to grow by 5.7% every year through 2030. This explosion in research, which covers everything from different trial phases to new medical devices, amplifies the need for specialized data management that can handle a whole host of complex regulatory demands. You can get a better sense of the industry by exploring the top clinical data management service providers for 2025.

Understanding Key Regulatory Standards

While the global regulatory map can feel vast and intimidating, a few key standards form the bedrock of compliant clinical trial data management. Every research team needs to have a firm grasp of what they are and why they matter.

Here are the big three:

-

Good Clinical Practice (GCP): This is the international ethical and scientific gold standard for how trials involving people should be designed, run, recorded, and reported. At its heart, GCP is all about one thing: protecting the rights, safety, and well-being of every single trial participant.

-

FDA 21 CFR Part 11: This specific rule from the U.S. Food and Drug Administration lays out the requirements for electronic records and signatures. It’s what ensures that digital data is just as trustworthy and reliable as old-school paper records by requiring things like secure access controls, audit trails, and fully validated systems.

-

General Data Protection Regulation (GDPR): Enforced across the European Union, GDPR governs exactly how personal data is collected, used, and stored. It gives individuals much greater control over their information and places strict data protection duties on any organization conducting trials in the EU or with EU residents.

These regulations don't operate in a vacuum; they work together to create a secure and ethical research environment. GCP provides the overarching ethical principles, while 21 CFR Part 11 gives you the technical playbook for putting those principles into action in a digital world.

The real-world impact of a rule like 21 CFR Part 11 is profound. Its requirement for secure, computer-generated, time-stamped audit trails means that every single change to the data is tracked. This creates an unalterable digital chain of custody, making the data transparent and verifiable.

Adapting to a Changing Trial Environment

The rise of decentralized clinical trials (DCTs) and multi-national studies has thrown a few new curveballs into the mix. Data is now being collected from patients' homes, through wearable devices, and across international borders. This makes a unified, yet flexible, compliance strategy absolutely essential.

For example, a trial with sites in both the United States and Germany has to comply with both HIPAA and GDPR. When remote interactions are involved, it's crucial to select the right tools, like HIPAA compliant video conferencing platforms, to ensure your practices hold up to scrutiny.

This shift toward global and digital research means that modern data management systems have to be built for agility. They need to handle different consent models, manage data privacy according to local laws, and ensure data integrity, no matter where or how it was collected. A robust, adaptable system is no longer a "nice-to-have"—it's a fundamental necessity for any successful modern research effort.

Best Practices for Modern Data Management

If you're running a clinical trial, think of regulations as your map—they tell you the destination and the major highways. But best practices? That's your GPS, your real-time traffic updates, and your seasoned driving skill all rolled into one. It’s the difference between simply following the rules and executing a trial with precision and efficiency.

These aren't just rigid guidelines; they're the accumulated wisdom of countless research teams. This is what separates world-class clinical trial data management from the rest.

At the very heart of this is the Data Management Plan (DMP). Don't mistake this for a simple checklist you tick off and file away. The DMP is a living, breathing blueprint for the entire study. It spells out everything from where the data will come from and how it will be validated to who is responsible for what. It gets everyone on the same page from day one.

A well-crafted DMP is proactive. It’s designed to spot potential roadblocks and lay out clear instructions for navigating them long before they can cause a traffic jam in your study.

Establish Standardized Data Protocols

Consistency is the absolute foundation of high-quality data. When you’re pulling information from multiple sites, in various formats, entered by different people, the potential for chaos is enormous. That's why standardized data collection protocols are completely non-negotiable.

This means getting granular and defining everything upfront:

- Uniform Measurement Units: All labs must report results using the exact same units. For example, deciding on mg/dL versus mmol/L eliminates dangerous conversion errors down the line.

- Consistent Terminology: You need a common vocabulary. Define specific terms for events and observations so that everyone is truly speaking the same language.

- Standardized Case Report Forms (eCRFs): Every site should use identical electronic forms. This guides data entry and drastically reduces variability between locations.

When you standardize these details, you wipe out ambiguity. The data coming in from Boston becomes instantly and reliably comparable to the data from Berlin.

The goal of standardization is to create a dataset where the only variables are the ones being studied—not the methods used to collect the information. This foundational work is crucial for producing clean, analysis-ready data.

Foster a Collaborative Data Environment

Data silos are the sworn enemy of a successful clinical trial. When your data managers, clinicians, and biostatisticians are all working in their own separate bubbles, crucial insights get missed, and small problems can snowball into major issues. The smart move is to build a deeply collaborative culture where information flows freely between every part of the team.

This kind of teamwork means a clinician’s real-world observations can directly inform the validation rules a data manager builds. In turn, a biostatistician can give early feedback on data trends that might affect the final analysis. It’s this constant feedback loop that catches errors early and lifts the quality of the entire dataset. Of course, sharing this sensitive information demands robust security. You should explore comprehensive strategies for improving patient data security in healthcare IT to build a secure and collaborative framework.

Implement Proactive Quality Control

Waiting until a trial is over to start cleaning the data is a recipe for a painful, expensive mess. The modern approach is all about proactive, ongoing quality control. Think of it less like a post-mortem and more like regular maintenance on a high-performance engine.

This involves a multi-layered approach:

- Automated Edit Checks: The system itself can be your first line of defense, automatically flagging illogical data right at the point of entry.

- Regular Manual Reviews: Data managers should consistently scan incoming data, looking for subtle patterns or errors that an automated check might not catch.

- Risk-Based Monitoring: This is the really smart play. Instead of treating all data as equal, you focus your quality control efforts on the most critical data points—the ones that directly impact the trial's primary endpoints. This concentrates your resources where they matter most, improving efficiency without ever compromising on quality.

This forward-thinking approach is especially critical in complex research areas. For example, look at the projected growth in the rare disease drug market, a field that leans heavily on efficient and precise data management.

This market explosion highlights the urgent need for more cost-effective trial solutions. Rare disease trials are notoriously difficult, with small patient pools and staggering costs, which makes advanced data management platforms essential. With forecasts predicting rare disease drug sales will hit nearly $135 billion by 2027, the pressure is on. We've seen how some solutions have helped contract research organizations (CROs) slash their trial database costs by up to 30% in complex cell and gene therapy studies, proving just how significant the financial upside of optimized data handling can be. You can learn more about the clinical trial trends shaping the industry and what they mean for the future.

How AI and Automation Are Shaping The Future

The future of clinical trial data management isn't some far-off concept anymore. It’s happening right now, with artificial intelligence and automation becoming essential tools of the trade. These aren't just buzzwords; they're practical solutions to some of the oldest headaches in medical research, from stubborn human error to frustrating operational delays.

Think of it this way: what if you had an expert data manager who could sift through millions of data points in seconds, work around the clock without getting tired, and catch tiny inconsistencies a human might overlook? That’s what AI offers. It’s a force multiplier, giving research teams a massive boost in capability.

This isn’t about replacing people, but empowering them. Projections show that by 2025, AI could be handling up to 50% of data-related tasks in clinical trials. And the financial impact is just as significant. Predictive analytics, one of the core functions of AI, is expected to cut trial costs by an estimated 15-25% by making things like patient recruitment smarter and more efficient. To get a better sense of where things are headed, it's worth exploring the future trends of clinical data management.

Smarter Data Cleaning and Validation

One of the most powerful and immediate uses for AI is in data cleaning and validation. Anyone who has worked in this field knows how time-consuming this can be—manually combing through records and firing off queries to sites to fix problems. AI turns this on its head.

Machine learning algorithms can be trained on mountains of historical trial data to learn what "normal" data looks like. Once they have that baseline, they become incredibly effective at automatically flagging anything that seems off.

- Intelligent Anomaly Detection: An AI can instantly spot a lab value that's biologically impossible for a certain patient, flagging it for review before it ever contaminates the dataset.

- Predictive Query Management: Instead of just finding existing errors, some systems can predict which data points are most likely to be wrong based on known risk factors. This lets teams focus their manual review efforts where they'll have the biggest impact.

This automated first pass frees up experienced data managers to tackle the truly complex issues that need a human brain, which helps get the database locked much faster.

The real power of AI in clinical trial data management is its ability to learn and adapt. The more data it processes, the better it becomes at identifying potential issues, creating a continuously improving quality control system.

Optimizing Trial Operations and Recruitment

AI and automation are doing more than just cleaning up data; they're fundamentally changing how we plan and run trials. By analyzing huge datasets from past trials, electronic health records, and real-world evidence, AI models can build a picture of the ideal patient or the perfect clinical site.

For instance, an AI could analyze population health data to find geographic hotspots for a rare disease. This insight guides site selection and recruitment efforts, ensuring they are targeted and effective from day one, which helps avoid the costly delays that come from slow enrollment.

Companies like PYCAD are on the front lines here, especially when it comes to medical imaging. They build AI models that automate the pre-processing and analysis of complex scans, a crucial step in many modern trials. This doesn't just make analysis faster; it also introduces a level of consistency and accuracy that can be difficult to achieve across different sites.

The bottom line is that research teams can move faster and with more precision. This ultimately helps get safe and effective treatments to the people who need them sooner than ever before. The era of intelligent data management is well and truly here.

Choosing The Right Clinical Data Management System

Picking the right Clinical Data Management System (CDMS) is one of the most important calls your research team will make. Think of it as the central nervous system for your entire trial. Get it right, and you’ll see smoother workflows, better data, and faster timelines. Get it wrong, and you're looking at bottlenecks, a frustrated team, and compromised data integrity.

This isn't a one-size-fits-all situation. The ideal CDMS for a massive pharmaceutical company running dozens of global trials is worlds apart from what a small biotech startup needs for a single, focused study. The trick is to line up the system's features with your team's real-world needs, budget, and where you see yourself in a few years.

A truly great CDMS does more than just hold data—it helps you actively manage it. The best systems today act as a central hub, pulling everything together from electronic data capture (EDC) to patient-reported outcomes (ePRO). This creates a single source of truth that you can actually trust.

Core Factors to Evaluate in a CDMS

Once you start looking at vendors, it's easy to get buried in flashy feature lists and technical buzzwords. To find what really matters, you need to zero in on the fundamentals that will shape the day-to-day success of your trial. Remember, you're looking for a partner, not just a piece of software.

Here are the critical factors to dig into:

-

Scalability and Flexibility: Can this system grow with you? A platform that’s perfect for a 50-patient Phase I study needs to handle a 5,000-patient Phase III trial without skipping a beat. It also has to be flexible enough to adapt to those inevitable mid-study protocol changes that are a fact of life in clinical research.

-

Integration Capabilities: Your CDMS won't work in isolation. It needs to play nicely with all the other eClinical tools in your arsenal, like lab information systems, imaging databases, and randomization and trial supply management (RTSM) services. Bad integrations lead to data silos and a ton of manual workarounds.

-

User Experience (UX): A system is only as good as the people using it. If the interface is clunky and confusing, you're practically inviting data entry errors and burnout from your site staff. Look for a clean, intuitive design that helps clinicians, data managers, and monitors get their work done without pulling their hair out.

The ultimate test of a CDMS is how it performs under pressure. A platform with robust security, a clear validation process, and responsive customer support is an asset. One that fails in these areas becomes a liability.

Unified Platform Versus Best-of-Breed

One of the big strategic decisions you'll face is whether to go all-in with a single, unified platform that offers the whole suite of tools (EDC, ePRO, eConsent, etc.) or to build your own stack with specialized, "best-of-breed" solutions from different vendors. Both paths have their own set of trade-offs.

| Approach | Pros | Cons |

|---|---|---|

| Unified Platform | Data flows seamlessly between modules, you have one vendor to call for support, and the user experience is generally consistent. | Might not have the most advanced features for every single function; you're locked into one company's pace of innovation. |

| Best-of-Breed | You get access to the top-tier features for each specific task and the flexibility to swap out components as better options emerge. | Integrations can be a nightmare to set up and maintain, you're managing multiple vendor contracts, and data sync issues can pop up. |

The right answer really comes down to your team's technical know-how and operational style. A unified platform is often the simpler, more reliable choice for teams that want a "just works" solution. The best-of-breed approach offers incredible power and customization but demands a lot more technical oversight to make sure all the parts mesh together.

Ultimately, a winning clinical trial data management strategy is built on a system that molds to your workflow, not the other way around.

Frequently Asked Questions

When you're deep in the world of clinical trial data management, certain questions always seem to pop up. Let's tackle some of the most common ones to clear up any confusion and give you a practical understanding of how things work.

What Is the Difference Between EDC and CDMS?

People often mix these two up, but they play distinct roles. Think of it this way: your smartphone is the device you use to input information (like an EDC), but iCloud or Google Drive is the system that stores, organizes, and backs up all that data (like a CDMS).

- An Electronic Data Capture (EDC) system is the front-end tool. It’s the digital form at the clinical site where a research coordinator or doctor enters patient data directly. It's all about the point of collection.

- A Clinical Data Management System (CDMS) is the comprehensive back-end platform. It’s the brains of the operation. The EDC is often just one component of a larger CDMS, which also handles data validation, cleaning, query resolution, and preparing the final dataset for analysis.

Simply put, an EDC is for capturing data, while a CDMS is for managing its entire lifecycle.

A strong CDMS acts as the single source of truth for a trial. It pulls together information from the EDC, lab reports, e-diaries, and imaging files into one secure, unified database. This is absolutely essential for data integrity.

Who Uses a Clinical Data Management System?

You might think a CDMS is just for data managers, but it's really the central hub for the entire clinical trial team. Different roles rely on it for different reasons.

Here’s a quick look at the key players:

- Sponsors and CROs: They use the CDMS for a bird's-eye view, overseeing the trial's progress and making sure data quality is consistent across every site.

- Clinical Research Associates (CRAs): They depend on it to verify source data and monitor how well a site is performing, often without needing to be physically present.

- Data Managers: This is their primary workspace. They build the database, run quality checks, and spearhead the data cleaning process.

- Biostatisticians: They access the final, locked dataset from the CDMS to run the numbers and find out if the treatment actually worked.

How Does a CDMS Ensure Data Quality?

A well-designed CDMS is built like a fortress to protect data quality. It doesn't rely on a single feature but uses a combination of tools working together to keep the data accurate, complete, and reliable.

First off, it runs automated edit checks in real-time. If someone tries to enter a patient's temperature as 986°F instead of 98.6°F, the system flags it instantly, preventing simple typos from muddying the dataset.

Next, it has a formal query management process. When a more complex issue arises—say, conflicting dates for a patient visit—the system allows data managers to send a formal query to the clinical site to get it resolved.

Finally, every action is recorded in a detailed audit trail. This log shows who changed what, when they changed it, and why. It creates a transparent record that is crucial for regulatory compliance and accountability.

Ready to see how AI can automate and enhance your medical imaging analysis within clinical trials? PYCAD builds intelligent solutions that streamline data processing, from annotation to model deployment, helping you achieve faster, more accurate results. Discover our AI services.