Why DICOM to NIfTI Conversion Changed My Research Game

Let's be honest, working with neuroimaging data can be a real headache. DICOM files? They're like trying to solve a puzzle with the pieces still in the box. Early in my research, I'd waste hours untangling DICOM's metadata, only to discover my analysis tools weren't compatible. It was incredibly frustrating.

But then I started converting my DICOM images to NIfTI format, and it was a game-changer. Seriously.

My processing pipelines went from clunky to smooth, my AI models could finally digest the data, and collaborating with colleagues became so much easier. This simple format switch has a huge impact, affecting everything from processing speed to research reproducibility. Speaking of smooth workflows, building a reliable CI/CD pipeline is essential. Check out this guide on CI/CD Pipeline Best Practices for some helpful tips.

Why NIfTI Simplifies the Neuroimaging Workflow

NIfTI's popularity comes down to its simplicity and how well it works with computational analysis. DICOM is packed with metadata, which is great for clinical settings, but not ideal for computational analysis. NIfTI, on the other hand, has a streamlined structure perfect for neuroimaging software and tools.

This means faster loading, processing, and visualizing of 3D and 4D brain imaging data. Plus, most neuroimaging analysis packages and AI libraries primarily support NIfTI, making it the standard in many research settings.

This shift to NIfTI didn’t happen overnight. Converting DICOM to NIfTI has become a critical step in neuroimaging, significantly impacting data analysis and visualization. Since the early 2000s, researchers have increasingly adopted this conversion to make their workflows more efficient. By 2016, it was a recognized as an essential first step in neuroimaging data processing, simplifying the complexities of DICOM. Discover more insights.

Choosing the Right Conversion Approach

Picking the right conversion method, whether it's a tool like dcm2niix or a programmatic solution like NiBabel, can save you a ton of time and frustration. dcm2niix has a user-friendly command-line interface, perfect for quick conversions, especially with large datasets. NiBabel gives you more control within Python scripts, which is handy for integrating into complex analysis pipelines.

The best approach really depends on what you need and how comfortable you are with the technical aspects. Understanding the strengths of each method lets you tailor the conversion process to your specific research requirements.

What You're Really Working With: Understanding Your Data

Before we jump into the nitty-gritty of converting files, let's talk about what you're dealing with: DICOMs. Think of them as a super detailed medical record attached to your image. Great for doctors, but they can be a real pain when you’re trying to do research. Trust me, I’ve been there. Misunderstanding these files can lead to a lot of wasted time and wonky results.

Decoding the DICOM Structure

DICOMs aren't always straightforward. Different scanners and imaging protocols create quirks, making some datasets easy to convert and others…well, let's just say it can be a headache. One common trap is running into tricky transfer syntaxes. These can cause conversion tools like dcm2niix to fail, forcing you to dig into the technical weeds of your DICOM files. In my experience, figuring this out early can save you hours of troubleshooting.

Another issue is dealing with incomplete series. There's nothing worse than starting a conversion only to realize you're missing some slices. It's like starting a puzzle and finding out pieces are missing. Being able to quickly check if your series is complete before you even begin is essential. This preliminary check is your first line of defense against a failed conversion.

Auditing Your DICOM Collection

Think of auditing your DICOMs like prepping ingredients before you bake. You don't want to find out halfway through that the milk’s gone bad, right? Same goes for starting a conversion with bad data.

First, look at the quality and if everything's there. I always start with a visual check of a few images—just to catch any glaring issues. Then, I make sure all the important details (metadata, like slice thickness or acquisition parameters) are present. It’s like detective work, but trust me, this early effort saves a lot of frustration later. The whole reason we convert to NIfTI format is because DICOM just isn’t built for the kind of advanced analysis we often do in research. NIfTI handles these tasks much better.

And finally, consider preprocessing. Sometimes, you need to tweak your data before you convert. Maybe you need to remove patient information or rotate the images so they’re aligned correctly. Knowing this beforehand can make a big difference in how smooth the whole process goes. This upfront work is the difference between a quick conversion and a debugging marathon that can throw off your entire project.

Mastering dcm2niix: The Conversion Tool That Never Fails

Let me tell you, dcm2niix is a lifesaver in neuroimaging. I’ve wrestled with so many DICOM to NIfTI converters over the years, and honestly, dcm2niix is the one I keep coming back to. It’s reliable, powerful, and gets the job done. Let's dive into why it's so good and how to use it effectively.

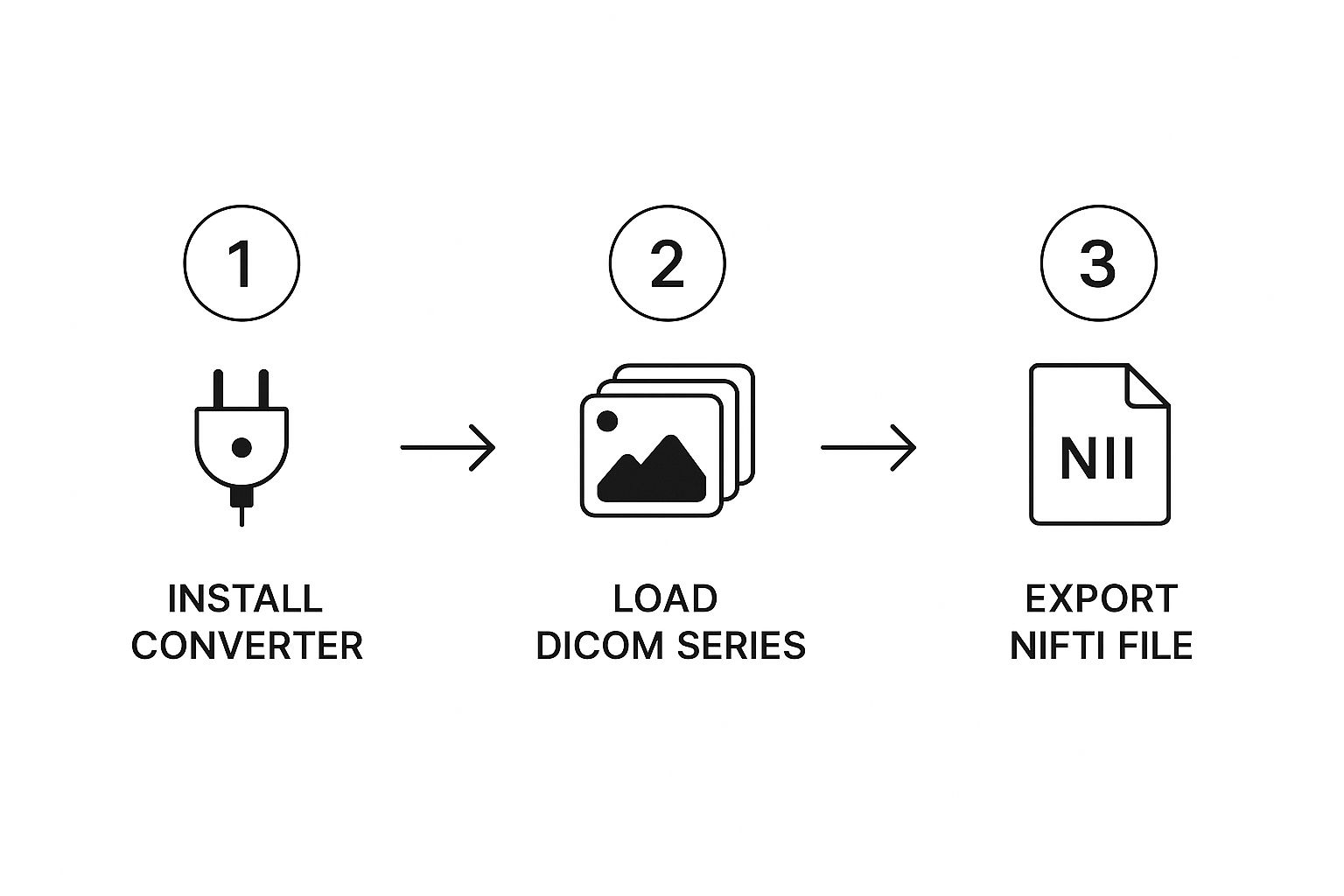

This infographic shows the basic idea behind using a DICOM to NIfTI converter:

You install the converter, load your DICOM series, and export the NIfTI file. Simple, right? It's the foundation for getting medical image data into your research pipelines. Speaking of pipelines, clean data integration is crucial for any kind of data, medical images included. If you're interested in best practices for data integration generally, check out this article: data integration best practices.

Setting Up dcm2niix for Success

Even with good documentation, installation can be tricky. Trust me, I've hit enough roadblocks to know. We’ll cover some of the less obvious installation issues you might run into, with tips for different operating systems. Whether you're compiling from source or wrestling with dependencies, we’ll get dcm2niix up and running on your machine.

Command-Line Mastery for Professional Data Transformation

The real power of dcm2niix is in its command-line interface. This isn't just about converting; it’s about transforming your data. Forget basic commands; we’re going to explore how to truly leverage dcm2niix. Think batch processing hundreds of files without babysitting the process. Think smart output naming conventions that will still make sense months down the line.

Let's talk about practical command options. I've put together a table summarizing some of the most useful ones:

Essential dcm2niix Command Options Comparison

This table breaks down key command-line options and when you’d want to use them.

| Option | Purpose | When to Use | Output Impact |

|---|---|---|---|

-b y |

Enable BIDS sidecar creation | When preparing data for BIDS-compliant datasets | Creates JSON sidecar files with metadata |

-f %p_%t_%s |

Customize output file name | Organize outputs by patient, time, and series | Files named with specific identifiers |

-z y |

Compress output NIfTI files (using gzip) | Save disk space, especially for large datasets | Smaller file sizes, slightly longer conversion time |

-o |

Specify output directory | Keep your project organized | NIfTI files saved to designated location |

-m |

Merge 2D slices into a single 4D file | Analyze fMRI data | Creates a single 4D file |

As you can see, mastering these options gives you fine-grained control over your conversions. This isn’t just convenient; it’s essential for reproducible research.

Advanced dcm2niix Features: BIDS Compliance and Beyond

BIDS (Brain Imaging Data Structure) compliance is essential for modern neuroimaging workflows. We’ll walk through how to configure dcm2niix to automatically create BIDS-compliant output. This makes your data instantly usable with many analysis tools and pipelines, saving you a ton of preprocessing time and ensuring consistency across your projects.

Real-World Scenarios and Troubleshooting

Let’s be honest, DICOM datasets can be a mess. We’ll dive into those tricky situations everyone dreads – multi-echo sequences, incomplete series, huge datasets. I’ll share some hard-won troubleshooting tricks, from deciphering cryptic error messages to salvaging seemingly corrupted data. These tips will have you ready for just about any conversion challenge. This moves beyond simple conversion and into professional data management for neuroimaging research. This way, you’re focused on the science, not wrestling with file formats.

Python Power: Building Custom Workflows with NiBabel

So, you want more control over converting your DICOM images to NIfTI? Or maybe you're trying to build a slick analysis pipeline and need the conversion process baked right in? NiBabel in Python gives you the flexibility to do just that. Let's dive into some practical ways to use it, from single file conversions to wrangling massive datasets, all while keeping things robust and keeping track of progress.

Speaking of NiBabel, here's a snapshot of their project homepage, which really highlights their focus on neuroimaging formats:

As you can see, it's all about reading, writing, and manipulating neuroimaging data, which makes it a must-have if you’re working with DICOM and NIfTI in Python. It's not just about conversion; it's about truly understanding and working with your data.

Combining NiBabel and pydicom for Custom Workflows

I'll show you with clear code examples how to combine NiBabel with pydicom. This combo is seriously powerful. It lets you build custom workflows that cater to your specific research needs—things that off-the-shelf tools might miss. This is incredibly valuable when you have unusual imaging protocols or tricky metadata requirements. I remember working with a dataset once where the slice ordering was totally non-standard. NiBabel and pydicom made it easy to reorder the slices before converting, saving me a huge headache later.

Preserving Critical Metadata During Conversion

Losing crucial metadata during conversion is a nightmare. Luckily, NiBabel is great at keeping this information intact. We'll cover how to transfer essential details, like acquisition parameters and patient demographics, from the DICOM header straight into the NIfTI header. This maintains data integrity, so you don't lose any valuable information.

Implementing Quality Checks and Modular Functions

Quality checks are like insurance for your analysis. We’ll build checks that catch potential issues, like incorrect dimensions or missing data, before they derail your whole project. Trust me, it’s much better to catch these things early! And modular functions? They're all about making your code adaptable and reusable. Think building blocks for your conversion scripts—tailored to your research.

Scaling Your Conversion Pipeline

We'll also explore how to integrate with heavy hitters like NumPy and SciPy. This unlocks the ability to do preprocessing right within your conversion workflow, making your entire analysis process more efficient. Finally, we'll talk about scaling. Whether you're working on a small pilot study or a massive multi-site project, we'll cover how to build pipelines that can handle the load. It's not just about conversion; it's about building a solid foundation for all your neuroimaging analysis.

AI Integration: Making DICOM to NIfTI Work in ML Pipelines

Converting DICOM to NIfTI is essential, sure, but it's really just the first step. The magic happens when you weave this conversion smoothly into your AI workflows. Think of it like a well-oiled machine: the conversion is one vital cog, but everything needs to work together seamlessly for peak performance. Let's dive into how experienced teams automate this process within their machine learning pipelines, from the moment data comes in to deploying the final model.

Building Preprocessing Pipelines That Actually Work

I’ve been there – messy data can completely derail an AI project. That's why building robust preprocessing pipelines is so important. Frameworks like TensorFlow and PyTorch let you create a single, unified workflow that handles not only the DICOM to NIfTI conversion but also normalization and augmentation. Imagine a single pipeline that takes raw DICOM data and spits out data perfectly prepped for your AI model – that’s the dream. This streamlined approach makes data preparation incredibly efficient and minimizes manual tweaks, which are often a source of errors.

Also, a quick tip from experience: document your Python code well. This guide on How To Write Python Code Documentation is a lifesaver. Good documentation makes teamwork smoother and keeps your pipelines maintainable in the long run.

Managing Terabytes of Imaging Data Efficiently

Massive datasets are a fact of life in medical imaging. Efficient data management becomes mission-critical when you're dealing with terabytes of data. I remember working on a project with over 5TB of DICOM images – talk about a data deluge! We had to master optimizing storage, implementing efficient data loading strategies, and using cloud computing to make it work. It's like organizing a gigantic library – you need a solid system to find what you need quickly.

Implementing Bulletproof Error Handling

Let’s be honest, things break. Especially with complex data and automated processes. Robust error handling is your lifeline. Picture this: your pipeline crashes at 3 AM, ruining your entire training run. Ouch. Checks throughout the pipeline, detailed error logging, and automated alerts can prevent these nightmares. I personally rely on email or Slack notifications for critical errors – it's a huge time-saver. A well-designed error handling system keeps things running smoothly, even when the unexpected happens.

Optimizing Conversion Parameters for Different AI Applications

Here's the thing: the best DICOM to NIfTI conversion settings aren't one-size-fits-all. They depend on what you're trying to do. The parameters for training a segmentation model might be different from those for a classification task. Understanding how voxel size, data orientation, and compression affect downstream performance is key to getting good results. In my experience, experimenting with different conversion settings and seeing how they impact your model is worth the effort.

Real-World Case Studies and Practical Tips

Real-world examples show how integrating the conversion process the right way can drastically improve model performance and shorten training time. One study saw a 20% reduction in overall training time just by integrating DICOM to NIfTI conversion directly into the preprocessing pipeline. We'll also cover practical tips for maintaining data lineage and reproducibility. These aren't just theoretical advantages; they make your research better and more efficient, especially when collaborating or publishing your work. These practices help you build a solid, reproducible base for your AI research.

Let's talk tools for a moment. Here’s a comparison of some popular options to give you a sense of their strengths and weaknesses:

Conversion Tools Performance Comparison

Speed, memory usage, and feature comparison across popular DICOM to NIfTI conversion tools

| Tool | Speed (files/min) | Memory Usage | Key Features | Best Use Case |

|---|---|---|---|---|

| dcm2niix | High | Moderate | Flexible, command-line based, widely used | Large datasets, scripting, batch processing |

| NiBabel | Moderate | Low | Python library, good for integration into Python workflows | Python-based pipelines, custom processing |

| 3D Slicer | Low | High | GUI-based, good for visualization and manual adjustments | Small datasets, visual inspection, quality control |

This table offers a quick overview; your specific needs might prioritize certain features over others. For instance, if you're working primarily in Python, NiBabel's integration capabilities might make it the best choice. If you need to process huge datasets quickly, dcm2niix might be the winner.

When Everything Goes Wrong: Real Troubleshooting Strategies

Let's be honest, DICOM to NIfTI conversion can be a real pain sometimes, even with the best tools like dcm2niix and NiBabel. We've all been there: one minute everything's humming along, the next you're staring at some bizarre error message. I've pulled my hair out over this stuff, so let me share some tips that have saved me from countless headaches.

Diagnosing Common Conversion Disasters

Sometimes, your NIfTI files end up corrupted, and your analysis software just throws its hands up. Other times, the orientation gets scrambled, silently messing with your spatial analysis. Or maybe, just maybe, you get hit with those sneaky scale factor errors that can quietly corrupt your data without you even realizing it. I've seen these issues derail entire projects, so catching them early is critical.

Sometimes, your NIfTI files end up corrupted, and your analysis software just throws its hands up. Other times, the orientation gets scrambled, silently messing with your spatial analysis. Or maybe, just maybe, you get hit with those sneaky scale factor errors that can quietly corrupt your data without you even realizing it. Trust me, I've seen these issues throw a wrench in the works of major projects, so early detection is key.

Deciphering Cryptic Error Messages

Those error messages, right? Often, they're more confusing than helpful. But learning to decode them is a crucial skill in neuroimaging. We'll dive into common error patterns, figure out what they really mean, and show you how to get to the root of the problem. Often, the solution is hidden in the subtle relationship between your DICOM data, the conversion tool you're using, and your system environment.

Identifying Problematic DICOM Files Before They Cause Havoc

An ounce of prevention is worth a pound of cure. Instead of letting a bad DICOM file crash your whole batch job, we'll look at ways to spot the troublemakers before they cause problems. This could mean checking metadata for inconsistencies, looking for corrupted slices, or verifying the integrity of the DICOM header. Think of it like a pre-flight check for your data—ensuring a smooth conversion.

Recovering Data from Seemingly Hopeless Conversion Failures

Let's face it, sometimes things go wrong despite our best efforts. But don’t give up! Even when conversions seem totally botched, you can often salvage the data. We’ll cover strategies for recovering data from partially corrupted files, trying different conversion tools or parameters, and even—in extreme cases—manually editing NIfTI headers when you’re truly desperate. Losing data is a nightmare, so having these recovery strategies can be a real lifesaver.

I've worked with all sorts of challenging datasets—from ancient scanner outputs to cutting-edge multi-modal sequences—and I'll share real-world examples of how I've tackled conversion issues. These strategies will help you troubleshoot effectively, saving you time and potentially rescuing your research from disaster.

Building Bulletproof DICOM to NIfTI Workflows That Scale

Working with neuroimaging data? It's not just about knowing the tools—it’s about building a rock-solid, scalable workflow. Whether your project is a small pilot study or a massive multi-site endeavor, a reliable DICOM to NIfTI conversion process is absolutely essential. Let's dive into creating one that’s not just functional, but truly bulletproof.

Practical Quality Assurance for Early Problem Detection

Catching errors early can save you tons of time and frustration down the road. I've been there—small conversion glitches can become major headaches if left unchecked. Building quality checks into every stage—from initial DICOM validation to final NIfTI verification—is like having a safety net for your data. This might include automated checks on data integrity, visually inspecting some sample images, or comparing against previous successful conversions.

Believe me, catching those little issues early is a lifesaver.

Automating Without Sacrificing Reliability

Automation is essential, especially with large datasets. But I've also seen automation gone wrong – creating more problems than solutions. The key is to automate the right things. Batch processing with tools like dcm2niix is a perfect example. You can convert hundreds of files automatically while keeping tight control over crucial parameters and ensuring quality.

Collaboration Frameworks for Team Alignment

Research is a team sport. When multiple people handle data processing, consistency is paramount. Shared conversion scripts, standardized naming conventions, and detailed documentation become essential. I once worked on a project where inconsistent conversion settings between team members led to weeks of debugging. Learning from that, I now always emphasize clear guidelines from the get-go. It keeps everyone on the same page and makes troubleshooting so much easier.

Future-Proofing Your Workflows and Maintaining Data Integrity

Research evolves, and so should your workflow. Designing for flexibility is key. Think modular scripts—easily adapted to new imaging protocols—or using tools that handle a wide array of DICOM variations. And never compromise on data integrity. Thorough documentation of your entire conversion process—tools, parameters, everything—is vital for reproducibility. This isn't just best practice; it’s essential for satisfying reviewers and ensuring your research stands the test of time.

Think about handling updates to your conversion tools. What's your plan when a new version of dcm2niix is released? A solid update and testing strategy ensures you’re using the best tools without disrupting your workflow. Consider how your data storage and archiving interacts with your conversion process. As datasets grow, moving to cloud storage or a more sophisticated data management system might be necessary. Planning for these changes keeps your conversion workflow efficient and scalable.

Ready to take your medical imaging analysis to the next level with AI? Explore PYCAD's solutions today!