Understanding the Medical Imaging Software Landscape

Jumping into medical imaging software development is an exciting prospect, but it helps to first get a feel for the major forces at play. The opportunities are huge, thanks to a combination of new technology and real-world healthcare demands. Just think about the sheer number of diagnostic imaging procedures happening every single day across the globe—that number is always on the rise. At the same time, there's a serious and growing shortage of radiologists to interpret all these scans. This gap is a massive headache for healthcare organizations, which are actively looking for software that can help them keep up.

This isn't just a feeling; it's a powerful market trend. The global medical imaging software market was valued at USD 6.64 billion in 2022 and is expected to climb to USD 12.17 billion by 2031. That's a compound annual growth rate of nearly 7%, which shows steady investment and adoption. For anyone building software in this space, that’s a clear signal of a healthy and expanding field where you can build something truly valuable. You can dig deeper into these numbers in the full medical imaging software market report.

Identifying High-Impact Opportunities

So, where should you point your development efforts? The real success stories often come from teams that laser-focused on specific, frustrating problems within a clinician's daily routine. It’s not always about inventing a revolutionary diagnostic algorithm from scratch. Sometimes, the most helpful solutions are the ones that automate boring, manual tasks, giving clinicians more time to spend on the tough cases.

Think about these promising areas where software is already making a huge difference:

- Automated Measurement & Segmentation: Radiologists spend a lot of time manually measuring tumors or tracing the outlines of organs. An application that does this automatically and accurately can save a ton of time on each patient, which directly improves a department's efficiency. A great example is a tool that automatically calculates tumor volume using RECIST criteria, something that's incredibly useful in oncology.

- Workflow Orchestration: Picture a tool that intelligently organizes a radiologist's to-do list, pushing urgent cases like potential strokes or pulmonary embolisms to the top based on a quick AI pass. This doesn't take the radiologist's job; it just acts like a smart assistant, making sure the most critical patients get seen faster.

- Triage and Prioritization: In a busy emergency room, every second counts. Software that can look at an incoming head CT scan and flag it for immediate review if it spots signs of a brain bleed can dramatically cut down diagnosis times. This is a perfect example of AI supporting, not replacing, clinical judgment.

The main idea here is to think beyond just the image analysis. Look at the entire lifecycle of an image, from the moment it's captured to when the final report is written. The most successful medical imaging software often targets the "in-between" steps where things get stuck. If you can truly understand these real-world clinical pain points, you can build a solution that isn't just technically clever but becomes a go-to tool for healthcare providers.

Designing Your Medical Imaging Software Architecture

Choosing the right architecture for your medical imaging software isn't just a technical decision; it's a foundational one. Once you commit to a specific path—whether it’s cloud-based, on-premise, or a hybrid of the two—pivoting later is both a headache and a major expense. These early choices dictate everything that follows: how fast your application processes scans, what your storage costs will look like, and whether you can meet stringent regulatory demands. A solid architecture can gracefully handle massive image datasets with clinical-grade performance, while a weak one will inevitably falter.

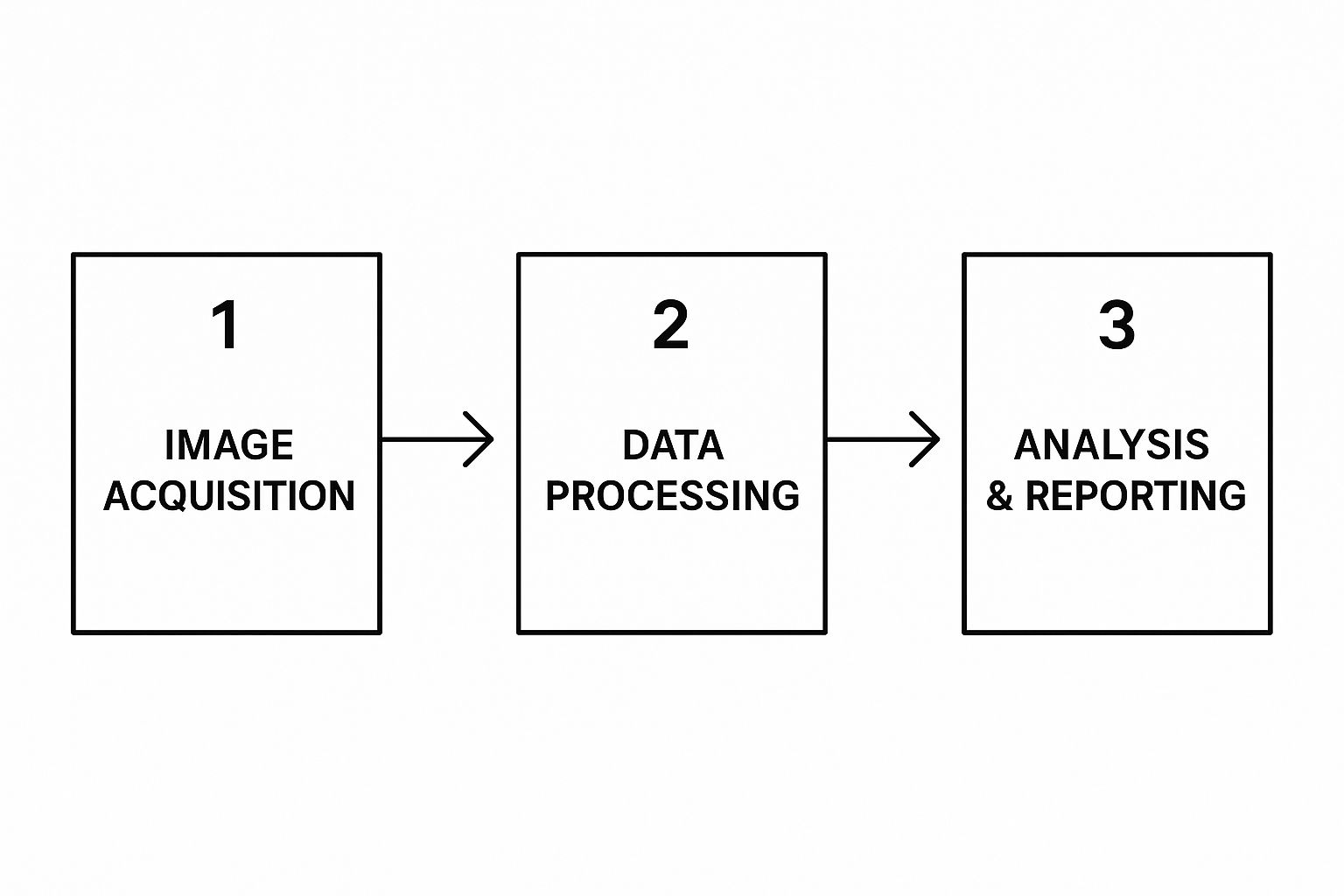

Think of the data flow as a dynamic pipeline. It starts with image acquisition from modalities, moves to the processing stage where your software works its magic, and ends with analysis and reporting for the clinician.

This journey underscores that your system isn't a static box. It needs to be fast, dependable, and secure at every single point.

Key Architectural Decisions

When you're sketching out the blueprint for your software, a few big questions will shape the entire project. Your answers will define how the system operates, scales, and fits into the intricate healthcare ecosystem.

Cloud vs. On-Premise vs. Hybrid: Where Will Your Software Live?

A pure cloud architecture offers fantastic scalability and lets you avoid heavy upfront hardware costs, which is a huge plus for startups. But it's not always that simple. Many hospitals have strict data governance policies that demand patient data never leaves their local network. This makes an on-premise solution a necessity.

Often, a hybrid approach strikes the perfect balance. You can keep sensitive patient data securely on-site while offloading computationally intensive tasks, like training your AI models, to the cloud. This gives you the security of on-premise with the power of the cloud.

To help you weigh these options, here's a breakdown of how architectural components differ across setups.

Medical Imaging Software Architecture Components Comparison

Comparison of essential architectural components and their implementation options for medical imaging software

| Component | Traditional Approach | Modern Cloud-Based | Hybrid Solution | Key Considerations |

|---|---|---|---|---|

| Data Storage | On-premise servers and local PACS. Full control over hardware. | Cloud storage services like Amazon S3 or Google Cloud Storage. Pay-as-you-go. | Sensitive data on-premise, anonymized or large datasets in the cloud for processing. | Data residency laws (HIPAA, GDPR), long-term archival costs, and retrieval speed. |

| Image Processing | Local workstations or dedicated servers. Limited by local hardware capacity. | Scalable cloud compute instances (EC2, Azure VMs). Can scale up or down on demand. | On-premise for real-time analysis, cloud for batch processing or AI model training. | Latency requirements for clinical decisions, computational cost, and data transfer bandwidth. |

| DICOM Handling | Local DICOM listener software connected directly to the hospital network. | Cloud-native DICOMweb services or APIs. Requires secure connection to the facility. | A local DICOM gateway that securely forwards data to a cloud processing pipeline. | Interoperability with various vendor PACS, handling of non-standard DICOM tags, security. |

| Deployment & Maintenance | Requires physical access and on-site IT staff for updates and troubleshooting. | Managed through cloud consoles. CI/CD pipelines enable automated updates. | Requires management of both on-premise hardware and cloud services, adding complexity. | Upfront hardware investment vs. ongoing operational expenses (OpEx), team skill set, update frequency. |

This table shows there's no single "best" answer. The ideal choice depends entirely on your specific use case, your target customer's environment, and your business model.

Data Handling and DICOM Integration

Your architecture must be fluent in DICOM, the universal language of medical imaging. This involves more than just reading a file. You need a robust DICOM listener that can reliably receive images from scanners and a system that can communicate seamlessly with existing Picture Archiving and Communication Systems (PACS). A rookie mistake is to assume all DICOM implementations are the same. In reality, you'll find countless variations from different equipment vendors. Your architecture needs the flexibility to parse these inconsistencies without dropping critical metadata.

Processing Pipeline Design

How will your application actually process the images? The answer depends entirely on the clinical need. For a real-time AI tool designed to flag potential strokes from CT scans, you need an architecture built for ultra-low latency. This might lead you to edge computing, where processing happens right next to the imaging device.

On the other hand, for a research application that crunches through massive archives of historical data to find patterns, a distributed processing framework that can scale across many servers is a much better choice. The trick is to align your technical design with the clinical workflow. The most successful projects get this balance right from the very beginning, ensuring the results are delivered in a timeframe that is genuinely useful to a physician.

Mastering DICOM Standards and Image Processing

If you're building software for medical imaging, getting a firm grip on the DICOM standard is not just a good idea—it's absolutely essential. Your application needs to speak this language fluently to be of any use in a real clinical environment. Think of DICOM as the universal grammar for medical images. It's not just about the picture itself; it's about the rich metadata that gives it context, like patient details, the machine used, and the specific imaging parameters. Nailing this is the bedrock of interoperability.

One of the first challenges you'll hit is the sheer variety within DICOM files. A CT scan from one machine might have different tags or encoding compared to an MRI from another. Your software has to be tough enough to manage these differences without crashing or, worse, losing patient data. A classic mistake is building an app around a "perfect" set of sample images, only to see it fail when it meets real-world data from a dozen different hospitals.

Essential DICOM and Processing Practices

To create a truly dependable system, you need to concentrate on a few key areas. After working on over 10 successful projects here at PYCAD, we've learned that a solid approach to DICOM and image processing makes all the difference.

- Robust DICOM Parsing: Don't reinvent the wheel. Use proven libraries like pydicom for Python or dcm4che for Java. Your parser should be designed to handle missing tags gracefully and validate critical information to keep the data sound.

- Metadata Management: Whatever you do, don't discard the metadata. Set up a system to map and store all DICOM tags, including any custom ones. This information is a lifesaver for traceability and can be invaluable when you're trying to debug a problem down the line.

- Image Processing for Quality: The clinical value of an image hangs on its quality. You'll need to implement processing techniques that preserve diagnostic integrity. This often includes:

- Filtering: Applying algorithms like Gaussian or median filters can significantly reduce noise, especially in low-dose CT scans.

- Enhancement: Using histogram equalization to boost contrast in X-rays can make subtle fractures much easier for a clinician to spot.

- Transformation: You must be able to resize or rotate images accurately without creating artifacts that could mislead a diagnosis.

The official DICOM standard website is the starting point for any serious development work. It’s where you’ll find all the official documentation.

This site isn't just a technical manual; it’s a living resource that outlines how the standard is evolving with technology. Staying current is part of the job. For developers, this means building software that is not only compliant today but also flexible enough for tomorrow’s updates. By deeply understanding and correctly implementing these standards, your application can become a trusted and reliable tool in any clinical workflow.

Building AI Features That Clinicians Actually Use

When we talk about artificial intelligence in medical imaging, it's not about creating a "digital doctor" to take over. The most valuable AI tools I've seen act more like incredibly capable assistants, giving clinicians a boost to deliver better, faster patient care. The aim is to build features that fit smoothly into a hectic clinical workflow, solving genuine problems instead of just being a cool piece of tech. Success in medical imaging software development really comes down to understanding where AI can offer real help.

This often means targeting tasks that are repetitive, time-consuming, or susceptible to human error when fatigue sets in. For instance, automated image segmentation can save a radiologist hours of drawing manual outlines, letting them focus on the complex diagnosis. Similarly, an AI-powered triage system can flag critical cases in a busy emergency department, making sure patients with severe conditions get seen right away. It's all about enhancing clinical judgment, not trying to replace it.

From Good Idea to Trusted Tool

Getting a clinician to trust an AI is a major hurdle. That journey begins by picking the right problem to solve and then training a model that can cope with the unpredictability of real-world medical data.

- Training with Limited Data: Getting your hands on large, high-quality medical datasets is a frequent challenge. Smart development teams navigate this by using techniques like data augmentation—artificially expanding the training set by rotating or slightly changing existing images. Another powerful method is transfer learning, where you take a model already trained on a massive general dataset and fine-tune it with your smaller, specialized medical one.

- Focus on Clinical Impact: The features that actually get used are the ones that clearly improve patient outcomes or make workflows more efficient. A great example of this is the development of an AI medical assistant for differential diagnosis, which directly helps clinicians as they make decisions.

- Managing Edge Cases: Medical imaging is filled with anomalies and unexpected findings. Your AI must be built to handle these "edge cases" without giving dangerously wrong results. This means you have to rigorously test and validate it against diverse and challenging datasets.

The Growing Need for Intelligent Analysis

The demand for these intelligent features is being driven by obvious market needs. Diagnostic workloads are increasing, while there's a worldwide shortage of trained radiologists. This situation has created a perfect environment for new solutions to emerge. The medical image analysis software market shows this trend clearly; it's projected to hit USD 4.3 billion by 2025 and is expected to grow at a steady CAGR of 8% through 2029.

This growth isn't just a number; it points to a critical need for tools that make analysis more efficient and accurate. You can discover more insights about the market drivers on PharmiWeb.com. By concentrating your development efforts on AI features that address these urgent clinical demands, you can build software that becomes an essential part of the modern healthcare system.

Navigating FDA Regulations Without Losing Your Mind

Dealing with the FDA often feels like the most intimidating part of medical imaging software development, but it doesn't have to be a complete headache. The secret is to stop thinking of regulatory compliance as a final hurdle. Instead, weave it into your development process from the very beginning. Tackling it early saves you from expensive and frustrating redesigns down the road. You need to figure out where your software fits within the FDA's Software as a Medical Device (SaMD) framework, as this will shape your entire regulatory journey.

Determining Your Regulatory Pathway

First things first, you need to classify your software. What does it actually do? Does it simply provide information, actively guide clinical decisions, or straight-up diagnose a condition? The answer to this question changes everything about your regulatory obligations.

- Inform Clinical Management: This type of software gives clinicians data but stops short of making a diagnostic claim. A good example is a tool that measures a tumor's size using RECIST criteria. It gives the oncologist objective data, but the doctor retains full control over the diagnosis. These tools typically fall into a lower-risk category.

- Drive Clinical Management: Here, the software offers recommendations intended to be used for treatment or diagnosis. An AI that analyzes a mammogram and flags a specific lesion as "high-risk for malignancy" fits this description. The stakes are higher, and so is the regulatory scrutiny.

- Diagnose or Treat: This is the highest-risk category, reserved for software that makes an autonomous diagnosis or directly guides treatment. An algorithm that can definitively diagnose diabetic retinopathy from a retinal scan without needing a human to confirm it would face the most rigorous review process.

Getting this classification right is crucial because it determines whether you'll pursue a 510(k) premarket notification, a De Novo classification request, or a full Premarket Approval (PMA). For most new AI tools, the 510(k) pathway is the most common route. This involves proving your device is "substantially equivalent" to an existing, legally marketed device—often called a "predicate" device. A successful 510(k) submission depends on detailed documentation that clearly shows how your software's performance stacks up against this predicate.

To give you a clearer picture, here’s a breakdown of how the FDA classifies software and what each path entails.

FDA Software Classification and Requirements Matrix

| Software Type | FDA Class | Regulatory Pathway | Key Requirements | Timeline |

|---|---|---|---|---|

| Inform Clinical Management (e.g., tumor measurement tool) | Class I / Low-Risk Class II | 510(k) Exempt or Traditional 510(k) | General Controls, QMS, Labeling, Registration, potential for performance testing vs. predicate. | 3-6 months (for 510(k)) |

| Drive Clinical Management (e.g., AI flagging high-risk areas) | Class II | Traditional 510(k) or De Novo | General & Special Controls, rigorous performance data (clinical validation), cybersecurity, QMS (ISO 13485). | 6-12+ months |

| Diagnose or Treat (e.g., autonomous diagnostic AI) | Class III | Premarket Approval (PMA) | Most stringent review, extensive clinical trial data proving safety and effectiveness, full QMS. | 1-3+ years |

This table shows that as the software's clinical impact increases, so do the regulatory requirements and the time it takes to get to market. Planning for the correct pathway from the start is essential for a smooth process.

Quality Management and Documentation

A robust Quality Management System (QMS) is absolutely essential. This is the documented system that governs how you design, build, test, and maintain your software to ensure it's both safe and effective. This isn't just about passing an audit; a well-implemented QMS, like one that complies with ISO 13485, genuinely improves your product. It means keeping meticulous records of everything from code reviews and bug tracking to how you manage user feedback.

As you prepare your FDA submission, remember that every single claim you make about your software's performance must be supported by solid, verifiable evidence. This translates to well-designed validation studies, transparent performance metrics, and crystal-clear reporting. Start documenting everything from day one—trust me, your future self will thank you when it's time to assemble the final submission package.

Real-World Testing and Clinical Integration

Taking your software from the controlled quiet of the development lab into a live clinical setting is a monumental leap. This isn't just about squashing the last few bugs; it's about proving your application is safe, effective, and reliable when a patient's health is literally on the screen. To get this right, you need a layered testing plan that examines everything from raw performance to how the software feels in a clinician's hands during a hectic shift.

A core part of this transition is robust validation. It’s a shift in mindset from just "checking boxes" to building genuine quality and trust into your product. If you're looking for more on this topic, it's worth exploring the critical importance of software testing as a strategic function, not just a final step.

From the Lab to the Clinic

Your testing strategy needs to be comprehensive, acting as a series of gates your software must pass through before it’s ready for prime time.

- Performance Testing with Real Data: The clean, predictable test cases you use in the lab are nothing like what you'll find in the real world. Clinical DICOM data is messy, inconsistent, and will throw curveballs you never anticipated. You have to stress-test your application with a wide variety of images from different scanners, hospitals, and patient populations. This is how you uncover the performance bottlenecks and compatibility headaches that a sanitized environment will never reveal.

- Integration Testing with Hospital Systems: Your software is just one piece of a much larger puzzle. It needs to communicate flawlessly with existing Picture Archiving and Communication Systems (PACS), Electronic Health Records (EHR), and other hospital IT systems. This involves intense testing of data exchange protocols to make sure your app can pull and push information without corrupting data or causing chaos in established clinical workflows.

- User Acceptance Testing (UAT) with Clinicians: Honestly, this might be the most crucial part. You have to put your software into the hands of actual radiologists, technologists, and physicians. Watch them use it. Is the interface intuitive? Does it genuinely make their job easier, or does it add frustrating clicks to an already long day? Their feedback is pure gold—it’s what transforms a technically sound tool into something a clinician will actually embrace and depend on.

Designing Effective Clinical Validation

To truly prove your software’s real-world value, you'll often need a structured clinical validation study, particularly for regulated medical devices. This means recruiting clinical sites and navigating the logistical challenges of a multi-site trial. The aim is to gather objective data showing that your software performs as promised and delivers a real benefit.

This kind of validation effort is essential in a booming market. The medical imaging field, valued at roughly USD 41.33 billion in 2024, is expected to reach USD 62.48 billion by 2033. This growth is driven by major players investing heavily in integrating tools just like yours into daily practice. You can read the full research about medical imaging market trends to get a better sense of the landscape.

In the end, successful integration is built on a foundation of trust. You earn that trust through painstaking testing, a deep respect for clinical needs, and a solid plan for post-deployment monitoring to catch any issues before they can affect patient care.

Key Takeaways

Developing medical imaging software is a serious undertaking. It requires careful planning, technical precision, and a genuine understanding of the clinical world it will live in. Success doesn't happen by chance; it's built on smart decisions from the very beginning. We've covered the whole journey, from system design and DICOM to FDA rules and hospital deployment. Let's boil it all down to the essentials.

Your Blueprint for Success

Creating software that doctors and technicians will actually use and trust comes down to a few fundamental ideas. Always keep these in mind during your planning and development.

- Clinician-Centric Design: You can't build useful tools in isolation. Your main objective is to fix a real, specific problem for a healthcare professional. You need to talk to them early and keep talking to them. The software has to slide into their existing workflow, not create a new one.

- Regulatory Compliance is Not an Afterthought: Think of FDA and other regulatory hoops as part of the initial design, not a final checklist. Integrating quality management and thorough documentation into your daily work will save you from expensive fixes and long delays later on.

- Data is Everything: The performance of your software, particularly any AI component, is directly linked to the quality and variety of your data. Make robust data handling a top priority, from securely parsing DICOM files to finding realistic and diverse datasets for testing.

Common Pitfalls and How to Avoid Them

Even the most well-intentioned projects can hit a snag. Here are a few common traps to look out for on your journey.

| Pitfall | The Mistake | The Solution |

|---|---|---|

| Tech-First Mentality | Getting excited about a specific technology and then trying to find a problem it can solve. | Always start with a clinical need. Spend a day shadowing a radiologist or have a conversation with the hospital's IT team to uncover real frustrations. |

| Underestimating Integration | Thinking your software will just "plug and play" with existing PACS and EHR systems. | Plan for integration from day one. Build your software with flexible, standards-based interfaces and be ready to handle the unique quirks of different vendors. |

| Ignoring Usability | Building a powerful tool that's so complicated a busy clinician can't figure out how to use it. | Involve your end-users in User Acceptance Testing (UAT) and continuously improve based on what they tell you. A simple tool that gets used is far better than a complex one that collects dust. |

At the end of the day, creating effective medical imaging software is about earning trust—from clinicians, patients, and regulators. By focusing on real-world problems and holding yourself to high standards, you can build a tool that makes a genuine impact.

If you’re ready to bring your vision to life, our team at PYCAD has the experience to help you at every stage. We’ve completed over 10 successful projects, handling everything from data annotation to deploying the final model. Let's build the future of healthcare technology together.