Ever wondered how an AI can spot the difference between a cat and a dog, or how a self-driving car sees the road on a rainy night? The real secret isn't just the algorithm; it's a crucial, often overlooked, first step called image preprocessing. Think of it as the essential prep work that turns raw, messy images into clean, standardized data that an AI can actually understand.

What Exactly Is Image Preprocessing?

Let’s use an analogy. Imagine a master chef preparing a gourmet meal. They wouldn't just throw raw ingredients into a pan. They meticulously wash the vegetables, trim the fat, and chop everything to a uniform size. A raw image from a camera is a lot like those unprepared ingredients—it can be full of digital 'noise,' plagued by bad lighting, or even warped from an odd angle.

Without that careful prep, the final dish would be a disaster. The same goes for AI. It needs clean, consistent data to work properly. Image preprocessing is that preparatory stage where raw visual data gets refined, setting the stage for any successful computer vision project.

Why It's the Most Important First Step

If you skip this foundational work, you’re setting even the most powerful AI up for failure. It's like asking someone to read a book with blurry text and coffee stains all over the pages. They might grasp the general story, but they'll miss critical details and make mistakes. Image preprocessing is how we clean those pages and sharpen the text for the machine.

The main goals here are pretty straightforward:

- Standardization: Making sure every image in a dataset is a consistent size and color format.

- Noise Reduction: Getting rid of the random speckles and graininess that can hide important details.

- Feature Enhancement: Making key features, like edges or specific textures, stand out so the algorithm can easily find them.

It’s Bigger Than You Think

While the core ideas of digital image processing have been around since the 1960s, its role in modern technology is exploding. The global market for this field was valued at around $10.31 billion in 2025 and is on track to more than double to $23.74 billion by 2029. This isn't just some niche academic field; it's the engine behind medical diagnostics, autonomous vehicles, and so much more. You can dig into the specifics of this growth in a full market research report from ResearchAndMarkets.com.

So, what is image preprocessing at its core? It's the disciplined art of cleaning, correcting, and standardizing digital images to unlock the valuable information hidden inside. It’s less about making pictures look pretty and more about making them computationally useful.

By turning a chaotic jumble of pixels into structured, meaningful data, we empower algorithms to "see" and interpret the world with a clarity that would otherwise be impossible.

The Essential Toolkit for Preprocessing Images

https://www.youtube.com/embed/VMeDbP8gX80

So, what exactly happens during image preprocessing? Let's open up the digital toolkit and look at the specific methods used to get raw images ready for a machine learning model. Each technique is like a specialized instrument, designed to fix a particular problem and prime the image for intelligent analysis.

Think of it this way: before a chef can cook a meal, they have to wash, peel, and chop the ingredients. Preprocessing is the "kitchen prep" for images. The goal is to create a clean, consistent, and optimized dataset where every image speaks the same language, making it easier for an AI to understand.

Geometric Transformations for Uniformity

One of the first hurdles in any computer vision project is that real-world images come in all shapes, sizes, and orientations. Geometric transformations are the tools we use to bring a sense of order to this chaos.

Imagine you're putting together a family photo album. You wouldn't just slap in photos of all different sizes and angles, right? You’d probably crop and align them for a clean, consistent look. That's exactly what these transformations do for a machine.

- Resizing: This is the most basic but crucial step. AI models are built to accept inputs of a fixed size. So, whether you have a massive high-res photo or a tiny thumbnail, every image gets scaled to a standard dimension, like 224×224 pixels.

- Rotating and Flipping: Sometimes, an object just isn't facing the right way. A medical scan might be upside down, or a security camera photo could be tilted. Rotation fixes this. Flipping an image horizontally is another common trick, often used in a process called data augmentation to create more training examples from the images you already have.

- Cropping: This is all about focus. Cropping lets us snip out a specific part of an image, removing distracting backgrounds and zeroing in on the object of interest. It's the digital equivalent of using a magnifying glass.

These geometric tweaks ensure that when an AI model analyzes an image, it isn't thrown off by variations in size or orientation. It can get straight to work on the features that actually matter.

Photometric Corrections for Clarity

Once the geometry is standardized, the next step is to fix issues with color and lighting. Photometric corrections are all about enhancing an image's visual quality, making hidden details pop and ensuring consistent lighting across the entire dataset.

This is a bit like adjusting the settings on your TV. If a picture is too dark, too bright, or completely washed out, it’s tough to see what’s going on. By fiddling with the contrast and brightness, you can make the scene clear and vibrant.

For an algorithm, this isn't about making an image look "prettier." It's about adjusting the pixel values to make important features more mathematically distinct and easier to detect.

A great example is Histogram Equalization. This technique automatically boosts an image's contrast by spreading out the most common intensity values. For a low-contrast medical image, like a faint X-ray, this simple adjustment can make a subtle fracture or a small tissue abnormality stand out, making it far easier for a diagnostic AI to spot.

Another common method is Grayscale Conversion. Full-color images contain a massive amount of data across red, green, and blue channels. For many tasks, like reading text or basic object detection, all that color information is just noise. Converting an image to grayscale simplifies the data down to a single channel of light intensity, which can dramatically speed up processing and reduce the computational workload.

To help you get a clearer picture of these techniques and their roles, here’s a quick summary table.

Common Image Preprocessing Techniques and Their Purpose

| Technique | Primary Goal | Example Use Case |

|---|---|---|

| Resizing | Create uniform input dimensions for the model. | Scaling all product photos to 512×512 pixels for an e-commerce recommendation engine. |

| Rotation/Flipping | Correct image orientation or augment the dataset. | Rotating satellite images to a north-up orientation for mapping analysis. |

| Cropping | Isolate the region of interest and remove noise. | Cropping out just the license plate from a photo of a car for an automated reader system. |

| Histogram Equalization | Enhance contrast in low-light or washed-out images. | Making faint tumors in an MRI scan more visible for an AI-powered diagnostic tool. |

| Grayscale Conversion | Simplify the image and reduce computational load. | Converting scanned documents to grayscale for an Optical Character Recognition (OCR) system. |

| Noise Reduction | Remove random pixel variations (e.g., salt-and-pepper noise). | Cleaning up grainy images from a low-light security camera before facial recognition. |

As you can see, each tool has a very specific job. By combining them, we build a powerful pipeline that turns messy raw data into something a machine can reliably interpret.

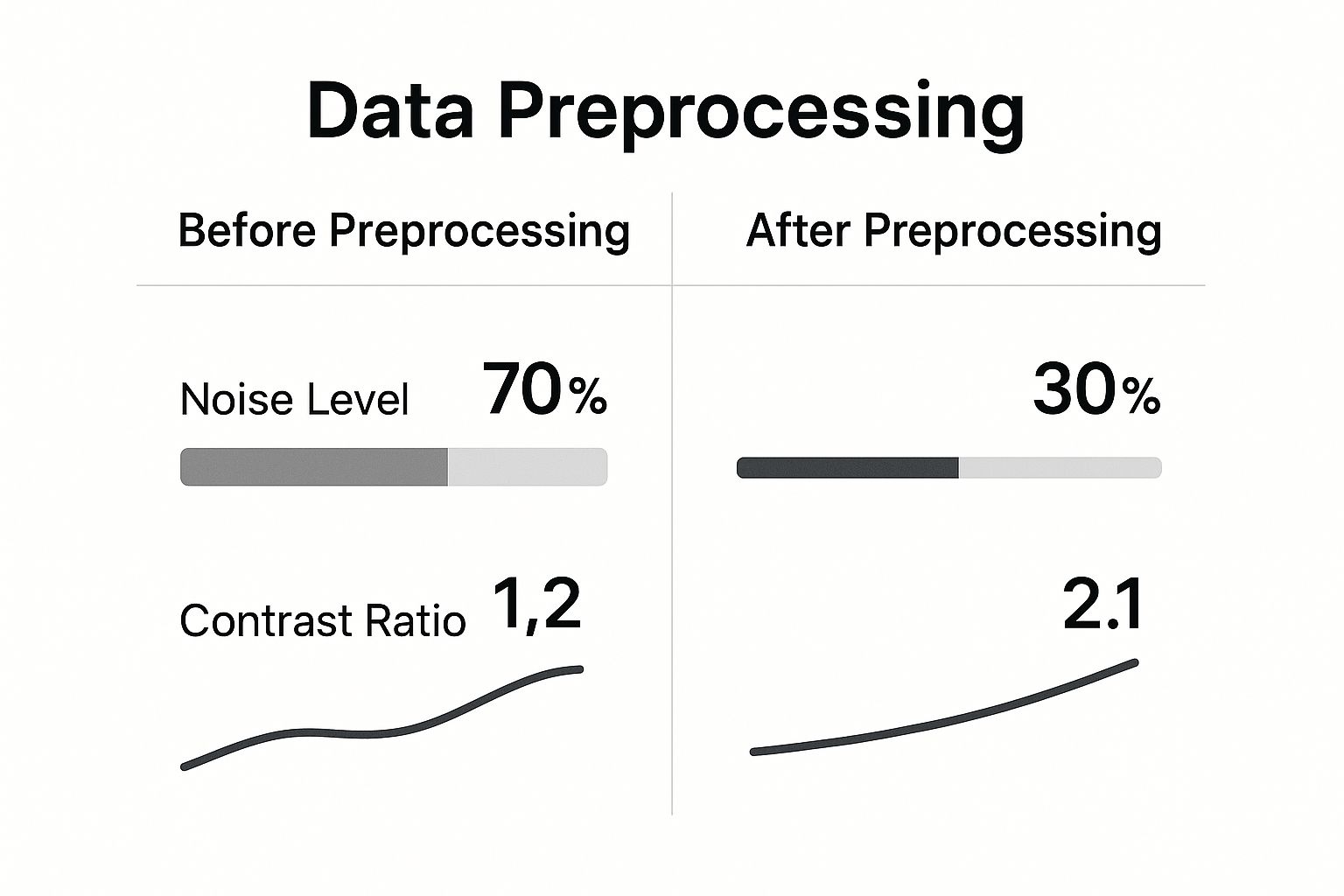

This image perfectly illustrates the "before and after." The original is murky and unreliable, but after preprocessing, it's clean and high-contrast, ready for precise analysis. By applying these geometric and photometric tools, we lay the critical groundwork for any successful computer vision system.

Advanced Techniques for AI and Machine Learning

While basic steps like resizing and color correction get your images in the door, training a truly powerful AI model requires a more sophisticated approach. When we move into advanced preprocessing, the goal isn't just to clean up images; it's to strategically enhance them to boost the model's ability to learn and make accurate predictions.

Think of these methods as a specialized training camp for your data. They get your images into peak condition, ensuring the AI learns from the most robust and informative visual cues possible.

Sharpening Focus with Noise Reduction

Let's face it: raw digital images are rarely perfect. They almost always have random specs of odd color or brightness, a phenomenon we call digital noise. You might recognize it as a grainy texture, especially in photos taken in low light. For an AI model, this noise is just a distraction, muddying the waters and hiding the very features it’s trying to learn.

Noise reduction filters are like digital-age stain removers, carefully cleaning up an image without damaging the important details. Two workhorses in this area are the Gaussian and Median filters.

- Gaussian Filter: This filter applies a bell-curve logic to smooth out an image, averaging pixel values with a stronger emphasis on the central ones. It's great at blurring out minor, high-frequency noise while keeping the larger features intact.

- Median Filter: Instead of averaging, this filter looks at a pixel's neighbors and replaces it with the median value. This trick is exceptionally good at zapping "salt-and-pepper" noise—those jarring black and white dots—without blurring the crisp edges of objects.

By applying these filters, we help the AI focus on the real subject matter, not the random digital static.

Defining Shapes with Edge Detection

Before an AI can identify a car, it needs to understand what a "car shape" is. Edge detection algorithms are built for exactly this purpose. They take a full-color image and boil it down to a high-contrast outline, almost like creating a coloring book page that highlights an object's silhouette.

How does it work? These algorithms scan the image for areas where brightness changes suddenly, which almost always signals the edge of an object. By stripping away complex colors and textures and leaving just the essential lines, edge detection dramatically simplifies the object recognition task for the AI. It cuts down on the amount of data the model has to sift through, forcing it to focus on what matters most: the object’s core structure.

By converting a visually rich image into a simple line drawing, edge detection helps a model learn the fundamental shape of an object. This makes the AI more resilient to variations in color, lighting, and texture.

Expanding Datasets with Data Augmentation

One of the biggest bottlenecks in machine learning is simply getting enough training data. Data augmentation offers a brilliant workaround by artificially growing your dataset. It creates slightly modified copies of your existing images, essentially showing your AI an object from dozens of different angles and under all sorts of conditions.

This process involves applying a range of random yet realistic transformations to each image in your training set. Common tweaks include:

- Rotating or flipping the image

- Zooming in and out

- Shifting the image up, down, left, or right

- Altering the brightness and contrast

Training a model on this expanded, more varied dataset makes it far more robust. It learns to ignore irrelevant changes in orientation or lighting and instead focuses on the defining features of an object. For specialized tasks, using an AI tool for web vision often requires this level of preprocessing, so the AI can effectively 'see' and interpret visual information on a webpage.

In the end, noise reduction, edge detection, and data augmentation form a powerful trio. They work together to produce a dataset that is clean, feature-rich, and diverse, giving your machine learning model the best possible foundation to learn faster, generalize better, and hit a higher mark of accuracy.

How Preprocessing Is Fueling Medical Breakthroughs

The real-world impact of image preprocessing truly comes into focus when we look at medical imaging. In a field where a tiny, overlooked detail on an MRI or a subtle shadow on an X-ray can change a person's life, preprocessing isn't just a technical step—it's a critical, often life-saving, tool. It takes raw, ambiguous scans and sharpens them into a clear source of diagnostic truth.

Medical images are notoriously tricky. They're often plagued by unique problems that can hide crucial information. An MRI scan, for example, might have inconsistent brightness from imperfections in the magnetic field. Or, a series of CT scans taken over several months won't line up perfectly. These aren't just minor glitches; they're major hurdles that can throw off both human radiologists and AI models.

Specialized Techniques for Clinical Precision

To get around these issues, the medical field uses highly specialized preprocessing techniques. It helps to think of these not just as cleanup tools, but as a digital magnifying glass that brings the most important details for diagnosis and treatment into sharp relief.

Let’s look at a couple of common examples from neuroimaging: bias field correction and skull stripping.

- Bias Field Correction: This technique tackles the slow, gradual changes in brightness you often find in MRI scans. It’s like a smart photo editor that balances the lighting across the image, making sure the contrast between different tissues is consistent and trustworthy.

- Skull Stripping: When you’re analyzing a brain scan, the skull, scalp, and other tissues are just noise. Skull stripping acts as a precise digital scalpel, automatically removing these surrounding structures to isolate the brain for a much more focused analysis.

By using these methods, radiologists and algorithms get a clearer view, making it far easier to spot tumors, measure stroke damage, or plan a complex surgery with confidence. The value here is immense. The global market for medical image analysis software, which leans heavily on these very techniques, was projected to reach USD 4.26 billion by 2025. You can dig deeper into these medical imaging market trends from Market.us.

Aligning Images for Longitudinal Studies

Another crucial preprocessing job is image registration. This is all about perfectly aligning multiple images, which is absolutely essential for things like tracking a disease's progression or seeing how well a treatment is working over time.

Think about a patient getting cancer therapy. Doctors need to compare a new MRI with one taken six months ago to see if a tumor has shrunk. The patient will never be in the exact same position in the scanner twice. Image registration digitally stacks and aligns these scans, pixel for pixel, so any change in the tumor's size or shape is immediately obvious.

Without precise registration, comparing scans would be like trying to spot the differences between two photos that are misaligned and taken from slightly different angles. It makes an accurate assessment nearly impossible.

This alignment is also key for fusing data from different types of scans. For instance, a doctor might want to combine the detailed anatomical view from an MRI with the functional data from a PET scan. Preprocessing makes this possible, giving clinicians a complete, multi-layered picture of what's happening inside the patient.

Ultimately, these advanced preprocessing steps are a cornerstone of modern medicine. They directly boost diagnostic accuracy, lead to better patient outcomes, and continue to push the boundaries of what's possible in healthcare.

Real-World Applications Beyond Healthcare

While medical imaging is a prime example of its power, image preprocessing is quietly working its magic in dozens of other industries. It’s the foundational step that helps machines make sense of the visual world, turning messy, real-world data into something clean and reliable enough for AI to act on.

Think of it as the meticulous prep work before the main event. Without it, many of the smart technologies we see today simply wouldn't work.

This is especially true for autonomous vehicles. The sensors on a self-driving car are constantly flooded with chaotic information—blinding sunlight, pouring rain, thick fog, and unpredictable shadows.

For a car to drive safely, it can't afford to mistake a blurry, rain-soaked shape for an open road. Preprocessing algorithms jump in instantly to clean up the noise. They use methods like histogram equalization to handle harsh lighting and apply filters to negate the blur from raindrops on a lens. This gives the car's AI a clear, stable picture of pedestrians, signs, and other cars, no matter the conditions.

Precision in Modern Manufacturing

Over in the manufacturing sector, preprocessing is central to automated quality control. On a fast-moving assembly line, microscopic defects in things like circuit boards or textiles are often impossible for a person to spot. But even the tiniest flaw can cause a major product failure down the line.

High-resolution cameras scan every single item. Before an AI can decide if a product passes or fails, preprocessing techniques sharpen the image, boost the contrast to make defects stand out, and fix any optical distortion from the camera. This lets the system catch tiny cracks or misalignments with a level of precision and speed that humans just can't match.

Image preprocessing serves as the bridge between raw, imperfect visual data and actionable, intelligent insights. It's the critical translation layer that allows automated systems to understand and react to the physical world reliably.

A New Perspective from Above

The impact of this technology reaches far beyond ground level. Satellite imagery, for example, is a goldmine of data for agriculture and environmental science. The problem is, raw satellite photos are often clouded by atmospheric haze and warped by the curvature of the Earth.

Preprocessing algorithms correct these distortions, delivering a crisp and accurate view of the planet.

- For farmers: Cleaned-up satellite data lets them monitor crop health across huge farms, pinpointing areas that need water or are showing signs of disease.

- For environmental scientists: It allows for precise tracking of deforestation, the melting of ice caps, and the real-time assessment of damage from natural disasters.

These widespread industrial uses are fueling major economic growth. The global market for image processing systems, valued at USD 19.07 billion in 2024, is projected to climb to USD 49.48 billion by 2032. You can dig into the numbers in this image processing systems market growth report. This boom is directly tied to how deeply these techniques are being woven into the automotive, aerospace, and transportation industries.

From making our roads safer to helping us farm more sustainably, image preprocessing is a universal engine for innovation, silently making our daily technologies smarter and more reliable.

Common Questions About Image Preprocessing

As you start working with computer vision, a few key questions about image preprocessing always pop up. It’s a foundational step that can feel a bit abstract at first, but getting your head around its nuances is what separates a good model from a great one. Let's break down some of the most common queries with clear, practical answers.

What Is the Difference Between Image Preprocessing and Image Processing?

This is easily the most frequent point of confusion, but a simple analogy makes it crystal clear.

Think of it like cooking a meal. Image preprocessing is all the prep work you do before the real cooking begins. You’re washing vegetables, trimming fat off the meat, and measuring your spices. The entire point of this prep is to make sure your ingredients are clean, standardized, and ready to go—it’s essential for a fantastic final dish.

Image processing, on the other hand, is the actual cooking. It’s the main event where you combine those prepared ingredients to create the final meal. In our world, that "meal" is the analysis itself.

To put it in more technical terms:

- Preprocessing is the set of steps you take to improve an image for a specific task. This could mean removing digital noise, correcting weird lighting, or resizing a whole batch of images so they’re all the same dimensions.

- Processing is the main job you actually want to do. That could be anything from detecting faces in a photo to identifying faulty products on an assembly line or diagnosing a medical condition from a scan.

Preprocessing isn't the end goal; it's the critical first stage that makes accurate, reliable image processing possible. You have to prep the data first before you can effectively analyze it later.

What Are Some Common Tools and Libraries for Image Preprocessing?

For anyone looking to get their hands dirty with image preprocessing, you're in luck. There’s a whole ecosystem of powerful, accessible tools out there, especially in Python. You don't need to reinvent the wheel and build these algorithms from scratch. Instead, you can stand on the shoulders of giants by using robust, open-source libraries that are industry standards.

Here are a few of the most popular choices:

- OpenCV: Short for Open Source Computer Vision Library, this is an absolute powerhouse. It's packed with over 2,000 optimized algorithms for a massive range of tasks, from basic image tweaks to real-time video analysis.

- Pillow (PIL Fork): If your needs are more fundamental—like resizing, cropping, or rotating images—Pillow is a fantastic, lightweight library. It’s intuitive and supports just about any image format you can throw at it.

- Scikit-Image: This library leans more toward scientific and research applications. It offers a comprehensive collection of algorithms for filtering, feature detection, and segmentation, making it a favorite in R&D circles.

On top of those, the big machine learning frameworks have their own built-in tools to make your life easier. Both TensorFlow (with its tf.image module) and PyTorch (with torchvision.transforms) provide a suite of preprocessing functions designed to plug directly into their model training pipelines. This makes building an end-to-end workflow so much more straightforward.

What Are the Biggest Challenges in Image Preprocessing?

While the benefits are huge, image preprocessing is definitely not a walk in the park. Getting a pipeline right requires careful thought and a real understanding of your data and what you're trying to achieve. Just slapping on a few random filters can easily do more harm than good.

One of the biggest hurdles is choosing the right technique without accidentally losing important information. For example, a really aggressive noise reduction filter might clean up a grainy image beautifully, but it could also blur or completely erase the subtle features your model needs to see. It’s a constant balancing act between cleaning up junk and preserving critical details.

Another major challenge is the computational cost. Chewing through thousands of high-resolution images is an intensive job. It can be incredibly time-consuming and hog a ton of computing power, especially for advanced techniques. This is why specialized hardware like GPUs often becomes necessary to get the work done in a reasonable amount of time.

Finally, there’s no such thing as a "one-size-fits-all" solution. A preprocessing pipeline that works perfectly for crisp, well-lit photos taken during the day will almost certainly fail on blurry, dark images shot at night. Every project and dataset comes with its own quirks, so pipelines have to be carefully customized, tested, and fine-tuned to handle the specific problems in your data.

At PYCAD, we specialize in navigating these very challenges to build robust AI solutions for medical imaging. From expert data annotation and anonymization to deploying highly accurate models, we provide the end-to-end expertise needed to turn complex medical data into actionable insights.

Discover how we can advance your medical imaging projects at https://pycad.co.