How AI Helps Doctors See Better

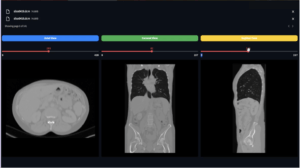

Many of our blog posts and YouTube videos delve into the technicalities of processing medical images, including how to load data, anonymize it, enhance visualization, and even advance to tasks like classification or segmentation.

However, we’ve noticed that these technical details might not fully resonate with everyone — especially those without a technical background. They often wonder about the practical applications of these projects and how they translate into real-world benefits. That’s precisely what we aim to address in this blog.

We’ll explore the significant impact of our technical work on the daily lives of doctors, radiologists, and anyone interested in developing medical imaging products. Join us as we bridge the gap between technical intricacies and their real-world applications, demonstrating how they facilitate better healthcare outcomes.

Why Do We Anonymize Medical Images?

Anonymization is a vital process that removes personal identifying information from medical images, ensuring patient privacy while enabling the data’s use for research and development. This practice not only adheres to legal requirements, such as HIPAA, but also fosters patient trust and facilitates advancements in diagnostics and treatment by providing a secure foundation for the development of AI and machine learning technologies. By balancing the protection of individual privacy with the needs of medical innovation, anonymization exemplifies the ethical commitment of the medical community to both individual rights and the collective good.

Why Is Segmenting Vertebrae from CT Scans Important?

Segmenting vertebrae from CT scans is crucial for precise diagnosis and treatment planning in spinal health care. For instance, consider a patient suffering from a subtle vertebral fracture, a condition that can be challenging to diagnose but has significant implications for treatment and recovery. By accurately segmenting the vertebrae, AI-enhanced tools can identify and analyze these fractures in detail, enabling early intervention. This approach not only aids in tailoring specific treatment plans — such as determining the need for surgical intervention or guiding targeted therapy — but also plays a pivotal role in monitoring the healing process, ensuring treatments are effective and adjusted as necessary. Through this precise segmentation, patients benefit from personalized care, potentially leading to quicker recovery and reduced risk of complications.

Why Do We Detect Brain Tumors?

Detecting brain tumors through imaging and using multiple colors to differentiate various classes is crucial for accurate diagnosis, treatment planning, and monitoring. This color-coded segmentation helps in distinguishing between tumor types, their boundaries, and the surrounding tissues, essential for understanding the tumor’s nature and precise location. For example, a patient diagnosed with a glioblastoma — a highly aggressive type of brain tumor — benefits significantly from this approach. The different colors in the imaging can delineate the tumor core, edematous tissue, and any necrotic areas, providing invaluable information for tailoring the treatment plan. This clarity is vital for neurosurgeons in planning surgical removal, for oncologists in designing targeted radiation therapy, and for assessing the effectiveness of chemotherapy regimens. Moreover, it allows for the monitoring of tumor growth or shrinkage over time, offering insights into the patient’s response to treatment and facilitating timely adjustments to their therapy. In essence, the multi-color classification enhances the precision of medical interventions, potentially improving patient outcomes by enabling a more targeted and effective approach to treatment.

The examples highlighted above represent just the tip of the iceberg, or what we might call the traditional use cases of AI in medical imaging. Let’s explore some additional examples.

Early Detection of Alzheimer’s Disease

AI models can analyze PET scans or MRIs to detect early signs of Alzheimer’s disease, even before symptoms appear. By identifying subtle changes in brain structure or function, such as amyloid plaque accumulation, these models can predict the onset of Alzheimer’s, enabling early intervention strategies.

Automated Screening for Diabetic Retinopathy

Automated analysis of retinal images can help in early detection of diabetic retinopathy, a leading cause of blindness. AI algorithms can screen images for signs of retinal vessel damage and provide a risk assessment, facilitating early treatment to prevent vision loss.

Detection and Classification of Skin Cancer

AI-powered analysis of skin lesion images can aid in the early detection and classification of skin cancer. By differentiating between benign and malignant lesions, and among various types of skin cancer, these tools can prioritize high-risk cases for further examination and biopsy.