Artificial intelligence is quickly becoming a fixture in modern medicine, helping with everything from diagnostics to treatment planning. But there's a huge catch. When an AI model offers a life-or-death recommendation but can't show its work, it's impossible to fully trust. This is the classic ‘black box’ problem, and explainable AI in healthcare is the answer.

Why AI in Healthcare Needs to Be Explainable

Think of it like this: a brilliant new doctor joins your team and makes consistently accurate diagnoses, but when you ask for their reasoning, they just shrug. Would you bet a patient's life on their judgment? Of course not. That’s the exact situation we face with traditional AI in a hospital. Explainable AI (XAI) is all about lifting the hood and making these models transparent, turning them from mysterious black boxes into trustworthy clinical partners.

The need for this clarity is more pressing than ever. A 2025 industry survey revealed that a staggering 63% of healthcare organizations are already using AI, which is well ahead of the 50% average across other industries. With 55% of clinicians using generative AI to draft notes and 53% using AI agents, the technology is already woven into the fabric of daily medical practice.

Building the Foundation of Trust

Medicine is built on a foundation of trust. Patients trust their doctors, and doctors need to trust their colleagues and their tools. When an algorithm flags a scan as high-risk or suggests a specific treatment, the attending physician needs to know why.

Without that insight, clinicians are stuck. Do they blindly accept the AI’s output, or do they ignore a tool that could potentially save a life? Explainable AI bridges that gap by answering the critical questions:

- What specific features in the patient’s data led to this prediction?

- Why did the model favor this course of action over another?

- Could a hidden bias in the training data be skewing the result?

By making the machine's "thinking" visible, explainable AI empowers clinicians to use AI with confidence, ensuring that technology enhances their expertise rather than undermining their autonomy.

Managing Risks and Ensuring Accountability

Let's be clear: integrating AI into a field like healthcare is not without risk. This is where implementing explainable AI becomes part of building robust risk management strategies.

When something goes wrong, accountability is everything. If an AI model with hidden logic makes a mistake, pinpointing the cause is next to impossible. This creates a legal and ethical minefield.

Explainability provides a clear audit trail. It gives developers, regulators, and hospital administrators a window into the model's decision-making process, allowing them to verify that it aligns with clinical guidelines and regulatory standards. This isn't just a nice-to-have feature; it’s a non-negotiable requirement for deploying AI safely and responsibly in patient care.

Understanding Explainable AI Beyond the Buzzwords

Let's cut through the jargon and get to what explainable AI really means.

Think about it this way: you take your car to two different mechanics. The first one fixes it and just hands you the keys, saying, "It's all set." The second mechanic walks you over to the workbench, shows you the worn-out part, explains exactly why it failed, and details the repair. Who are you going to trust next time something goes wrong?

You'd pick the second mechanic, right? That's the essence of Explainable AI (XAI). It's a collection of tools and methods designed to pop the hood on the "black box" of artificial intelligence, showing us the why behind a decision in plain, human-understandable terms. In a field like healthcare, this isn't just a nice-to-have; it's a must-have.

XAI transforms AI from a mysterious system that spits out answers into a true partner for clinicians—a tool that complements their expertise, rather than just dictating to it.

Transparency vs. Interpretability: What's the Difference?

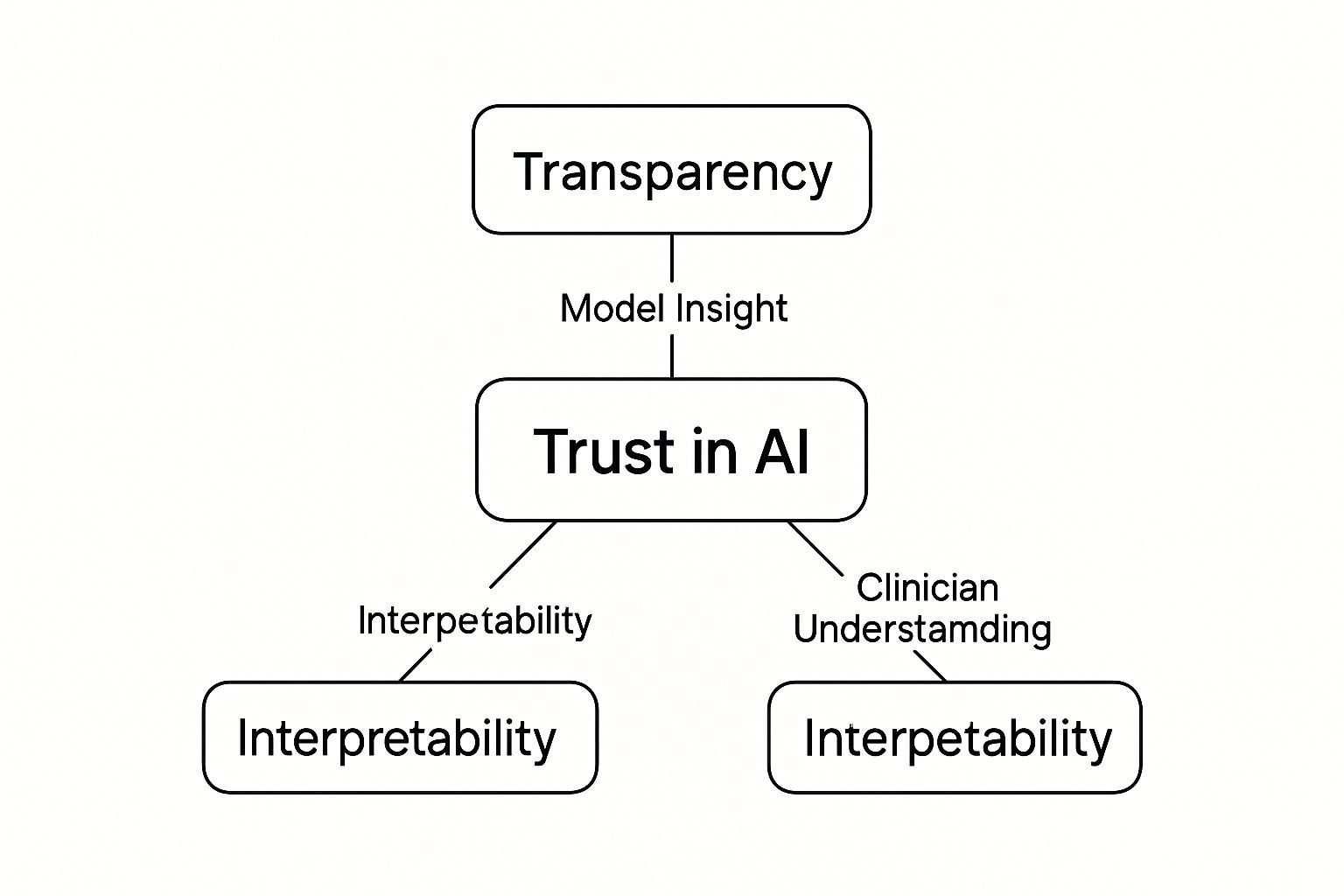

To really get a handle on XAI, it helps to understand two key concepts that are often mixed up: transparency and interpretability. They sound similar, but they tackle different parts of the problem.

Transparency is all about seeing how the model works on a fundamental level. It’s like having the blueprints for an engine. You can trace every wire and understand the mechanics from the ground up. In the AI world, this means having access to the algorithm's code, logic, and how its internal parts work together.

Interpretability, on the other hand, focuses on why the model made a specific call for a specific case. If transparency is the blueprint, interpretability is the mechanic pointing to the exact corroded wire that caused your car to stall. For a doctor, this means seeing which piece of patient data—a specific lab result, a shadow on an X-ray—made the AI flag a potential issue.

This concept map breaks down how these ideas work together to build trust.

As you can see, giving developers insight through transparency and giving clinicians understanding through interpretability are both essential for creating real confidence in AI systems.

From Theory to the Clinic

The whole movement toward explainable AI in healthcare is a direct response to the ethical and practical problems that opaque machine learning models create. A model can be incredibly accurate, but if doctors can't understand its reasoning, they simply won't use it.

This is why new developments are so critical. Take RiskPath, a tool developed by researchers at the University of Utah. It's shown to be 85–99% accurate in spotting patients at risk for chronic diseases years before symptoms appear—a massive improvement over the 50–75% accuracy of older methods. You can read the full research about these predictive AI toolkits and see how they are paving the way.

Explainable AI models provide clear, interpretable insights into how risk factors interact and change over time, which is essential for clinicians and regulators who must understand and justify AI-driven decisions.

Despite progress, a huge number of AI tools in healthcare still operate as black boxes. This raises serious questions about legal liability, hidden biases in the algorithm, and even damaging the patient-doctor relationship if a clinician can't explain the logic behind an AI's suggestion. It's this gap that has pushed regulators and top healthcare organizations to make XAI a priority, ensuring that AI is a tool that enhances patient care, not one that complicates it.

How Explainable AI Techniques Work

To really get why explainable AI in healthcare is so important, we need to peek under the hood and see how these techniques actually work. But don't worry, this isn't about getting lost in complex computer science. It’s more like understanding the different tools a detective might use to solve a case.

Some of these methods are like forensic investigators—they show up after the fact to piece together the evidence that led to a specific conclusion. Others are designed to be transparent from the very beginning, like an open-book test where you can see all the work.

Post-Hoc Methods: The AI Detectives

The most common way to achieve explainability is with post-hoc (or "after the fact") techniques. These are tools you can apply to an existing "black box" model after it's been trained and has already made a prediction. They don't mess with the model itself; they just help us understand its behavior.

Two of the most popular post-hoc methods you'll hear about are LIME and SHAP.

-

LIME (Local Interpretable Model-agnostic Explanations): Think about trying to make sense of a dense legal contract. Instead of reading all 100 pages, you might just ask a lawyer to explain one critical clause in simple language. LIME does something very similar for AI. It zeroes in on a single prediction and builds a much simpler, temporary model to explain the logic just for that one instance. For a doctor, this could mean seeing that a patient's high-risk diagnosis was driven almost entirely by a specific combination of blood pressure and cholesterol, while ignoring thousands of other less important data points.

-

SHAP (SHapley Additive exPlanations): This one takes a broader view. It’s like a coach breaking down a game-winning play to show exactly how much credit each player deserves. SHAP assigns a precise value to every feature that contributed to the AI's prediction. It calculates the impact of every piece of patient data—age, genetics, lab results—on the final outcome. This gives a clinician a detailed, mathematically sound breakdown, showing not just what was important, but how much each factor swayed the model's decision.

The real beauty of these "detective" techniques is their flexibility. You can use them on almost any type of machine learning model without having to rebuild your algorithms from the ground up.

Inherently Interpretable Models: Transparent by Design

The other approach involves models that are built to be understandable from the start. Instead of needing a detective to figure them out, their logic is clear as day. They might not always have the raw predictive power of a massive "black box" for every single task, but their simplicity is a massive advantage in a clinical setting where trust is everything.

An inherently interpretable model is like a flowchart. You can follow the path from start to finish and see exactly how every decision leads to the final recommendation. This clarity is invaluable when the stakes are high.

Common examples of these transparent models include:

-

Decision Trees: This is the classic flowchart model. It works by asking a series of simple yes/no questions to reach a conclusion. For example, a decision tree might first check if a patient is over 50, then check their blood sugar, then their family history—each step is incredibly easy for a person to follow and double-check.

-

Linear Regression: This model finds a simple, straight-line relationship between different factors. A hospital administrator could use it to see precisely how patient wait times go up or down based on staffing levels. It’s a predictable and easily explainable connection.

Comparing Explainable AI Techniques in Healthcare

Choosing the right technique depends entirely on the situation. Do you need a deep forensic analysis of a complex existing model, or is a straightforward, transparent model a better fit from the start? This table breaks down some of the most common methods.

| Technique | How It Works (Analogy) | Best For… | Key Benefit |

|---|---|---|---|

| LIME | A lawyer explaining one specific clause in a complex contract. | Explaining individual predictions from any "black box" model. | Fast, intuitive explanations for single cases. |

| SHAP | A coach assigning credit to each player for a team victory. | Understanding the global and local impact of all features on a model's output. | Provides a mathematically rigorous and complete picture of feature importance. |

| Decision Trees | A simple, visual flowchart with yes/no questions. | Scenarios where a clear, step-by-step decision path is required for trust and compliance. | Extremely easy to interpret and visualize. |

| Linear Models | A basic calculator showing a direct cause-and-effect relationship. | Modeling straightforward relationships, like resource planning or risk scoring. | Simplicity and predictable outcomes. |

Ultimately, the goal of all these methods is the same: to turn an AI's output from a cryptic command into a collaborative conversation.

Because so many of these XAI techniques involve dissecting data, a solid grasp of data analysis best practices is non-negotiable. Whether you’re building a transparent model from scratch or interpreting a complex one, the core principles of good data handling are what make it all work.

These tools give clinicians the evidence they need to trust, question, and ultimately partner with technology. They ensure every AI-supported decision is anchored in clear, verifiable reasoning.

Real-World Examples of XAI in Patient Care

https://www.youtube.com/embed/DsEyRv6h5-c

The theory behind explainable AI in healthcare is one thing, but seeing it work in the real world is where its value truly clicks. These aren’t just ideas for the future. XAI is already reshaping how doctors diagnose diseases, predict patient outcomes, and design personalized treatment plans.

When we pull back the curtain on AI's decision-making process, we turn abstract algorithms into practical tools that give clinicians more confidence and lead to better results for patients. Let's look at a few ways this is happening right now.

Giving Radiologists a Second, Smarter Pair of Eyes

Medical imaging is a natural fit for explainable AI. Radiologists spend their days poring over complex scans, searching for subtle clues that could change a patient's life. While an AI can be trained to spot patterns the human eye might overlook, a simple "yes" or "no" from a black box isn't enough to act on.

Think about an AI model scanning a brain MRI for a potential tumor. A standard AI might just spit out a probability score, leaving the radiologist wondering what, exactly, triggered the alert.

An XAI system takes it a step further. Instead of just a number, it overlays a "heat map" onto the MRI, visually pinpointing the specific pixels and anatomical structures that led to its conclusion. This gives the radiologist instant, verifiable evidence to confirm or question the AI's findings.

This isn't about replacing the expert; it's about handing them a much more powerful magnifying glass. They can see not only what the AI thinks but why it thinks it, leading to faster, more confident, and more defensible decisions. This kind of technology is a major focus for companies like PYCAD, which specializes in weaving AI into medical imaging to boost diagnostic accuracy.

Predicting Disease Outbreaks with Pinpoint Accuracy

Beyond the individual, explainable AI is proving to be a game-changer for public health. Health officials rely on predictive models to forecast disease outbreaks and get ahead of them with resources and prevention campaigns. But a vague warning that a community is at risk doesn’t give them much to work with. They need to know the why behind the what.

Let’s say an AI model predicts a spike in flu cases in a certain city. An explainable model can break that prediction down, revealing the key drivers:

- Low vaccination rates are concentrated in a handful of neighborhoods.

- The area has high population density and heavy reliance on public transport.

- Socioeconomic data points to limited access to clinics and pharmacies.

Armed with these specifics, public health teams can shift from reacting to a crisis to preventing one. They can launch targeted vaccination drives, get health information to the right people, and set up mobile clinics exactly where the data shows they’ll do the most good. The explanation turns a general forecast into an actionable battle plan.

Designing Treatment Plans as Unique as the Patient

The one-size-fits-all approach to medicine is quickly becoming a thing of the past. The real goal now is to create treatments tailored to a patient's specific genetic profile, lifestyle, and medical history. AI is fantastic at sifting through all that data to find the best path forward, but doctors need to understand and trust its recommendations.

Imagine an oncologist using an AI tool to choose a chemotherapy regimen. An XAI system wouldn't just name a drug; it would lay out its reasoning in plain English.

The system could show that its recommendation is based on:

- The tumor's specific genetic markers, which are known to respond well to the suggested drug.

- The patient's age and overall health, indicating they can likely tolerate this particular therapy.

- Real-world data from similar patients in past clinical trials who had positive outcomes.

This level of transparency is essential. It lets the oncologist have a meaningful conversation with their patient, explaining precisely why one treatment was chosen over another. The AI stops being a black box and becomes a collaborative partner, ensuring every decision is clear, evidence-based, and focused on the individual.

Why Building Trust with Transparent AI Is a Game-Changer

Bringing explainable AI into healthcare isn’t just a technical upgrade; it's about fundamentally changing how medicine is practiced for the better. When we move from mysterious algorithms to accountable clinical partners, the entire system gets stronger, safer, and more reliable for everyone.

The industry is already betting big on this shift. In 2023, global spending on AI in healthcare hit $19.3 billion, with the U.S. market making up a solid $6.1 billion of that. But the real story is where we're headed: projections show worldwide spending could rocket to $613.8 billion by 2034. That kind of investment signals a massive change in how care gets delivered, driven by the need to solve real problems like workforce shortages. You can dig deeper into how AI is being integrated into the U.S. healthcare workplace.

But all that spending is pointless if clinicians don't trust the tools. Explainability is the key that unlocks that trust.

Winning Over the People on the Front Lines: Clinical Adoption

Let’s be honest: doctors can't afford to take a leap of faith. When a patient’s life is on the line, acting on a recommendation from a "black box" simply isn't an option. Explainable AI tackles this head-on by turning the algorithm from a mysterious oracle into a dependable co-pilot.

When a model can actually show its work—maybe by highlighting the exact spots on an MRI that led to its conclusion—it gives clinicians the power to check its logic against their own hard-won expertise. This creates a powerful feedback loop. Doctors can validate the AI's output and, in many cases, learn from it by spotting subtle patterns they might have otherwise missed.

Explainability empowers clinicians to critically evaluate AI-driven insights, ensuring that the final medical decision remains in the hands of a human expert who understands the full context of their patient's care.

This kind of collaboration is what drives real adoption. It ensures these tools genuinely support clinical judgment instead of trying to replace it.

Making Healthcare Safer and More Equitable

One of the biggest fears with AI is that it can accidentally learn and even amplify the hidden biases lurking in its training data. An algorithm trained mostly on data from one demographic might fail spectacularly when used on another, creating dangerous inequities in care.

Think of explainable AI as a powerful audit tool. It shines a light into the model's inner workings, letting developers and hospital administrators hunt for hidden biases. For example, if an AI model consistently downplays cardiovascular risk for women, XAI can help pinpoint exactly which data features are causing that skewed result.

This transparency allows organizations to fix the problem at its source, leading to fairer and more reliable systems. The tangible benefits are clear:

- Spotting Hidden Biases: You can see if an algorithm is giving too much weight to factors like race, gender, or zip code.

- Improving Model Fairness: It allows for targeted tweaks to ensure the model works well for all patient groups.

- Cutting Down on Errors: It ensures recommendations are rooted in sound clinical data, not flawed demographic shortcuts.

Clearing Regulatory and Ethical Hurdles

As AI becomes standard in medicine, regulators around the world are stepping in with new rules. The one constant in this evolving legal landscape? The demand for transparency and accountability. Healthcare organizations that can't explain how their AI works are walking into a compliance minefield.

Explainable AI provides the framework needed to meet these demands. It creates a clear audit trail that shows precisely how an algorithm arrived at a decision, which helps protect both the hospital and its doctors from liability.

This auditability is non-negotiable. If an AI-assisted diagnosis is ever called into question, you need a documented record of its reasoning. This isn't just about satisfying regulators; it's about upholding ethical standards and making sure technology is used responsibly.

Hurdles on the Road to Transparent AI

While the potential of explainable AI is exciting, getting it into the hands of doctors and nurses isn't as simple as flipping a switch. There are real-world obstacles we need to navigate, forcing us to strike a delicate balance between competing priorities and think carefully about what truly transparent technology should look like.

One of the biggest sticking points is what we call the performance-versus-explainability trade-off. It’s a classic dilemma: the AI models that are often the most accurate—like deep neural networks with millions of moving parts—are also the most opaque. On the other hand, simpler, more transparent models are easier to understand but might not be powerful enough to pick up on the faintest, most subtle patterns in the data. This creates a constant tug-of-war between raw predictive power and practical, real-world clarity.

Turning Data into Meaningful Stories

Another major challenge is that an "explanation" isn't one-size-fits-all. What's perfectly clear to a data scientist can be a wall of statistical jargon to a clinician trying to make a quick decision at a patient's bedside.

The real goal isn't just to spit out an explanation. It's to deliver a genuine insight—one that's relevant, easy to grasp, and actually useful in a clinical setting. An overly technical explanation is just as unhelpful as having no explanation at all.

This is exactly why collaboration is non-negotiable. AI developers have to get out of the lab and work side-by-side with the doctors and nurses who will use these tools every day. Together, they can design interfaces and explanation styles that fit naturally into the flow of patient care. The focus has to shift from just "showing the math" to telling a clear, compelling story about the patient's health.

What’s Next for Explainable AI?

Looking ahead, the future of XAI in healthcare is all about closing these gaps. The push is toward making the partnership between humans and AI feel less like a transaction and more like a conversation.

We're moving away from AI systems that just give a one-way report. The next wave of XAI will be more interactive. Imagine a doctor being able to ask follow-up questions like, "What if this patient's lab value was different?" and getting an immediate, dynamic response. This turns the AI from a black box into a true reasoning partner.

Several key developments are paving the way for this future:

- Standardized Evaluation Metrics: The industry is working on creating clear benchmarks to measure how good an AI's explanation actually is. This will bring much-needed consistency and reliability.

- Intuitive User Interfaces: The focus is on designing dashboards and visuals that translate complex AI logic into something a medical professional can understand at a glance, no data science degree required.

- Hybrid AI Models: Researchers are building new types of models that combine the raw power of complex algorithms with the built-in transparency of simpler ones, hopefully giving us the best of both worlds.

Ultimately, we're working toward AI that can have a natural, back-and-forth dialogue with a clinician about its reasoning. That’s when these tools will become truly trusted and indispensable parts of modern medicine.

Common Questions About Explainable AI in Healthcare

When it comes to bringing explainable AI into a real hospital setting, theory quickly gives way to practical questions. Clinicians, hospital administrators, and IT professionals all have valid concerns about how these systems work in the real world. Getting clear answers is the first step toward building trust and making a successful transition.

Let's tackle some of the most common questions that come up when healthcare organizations start exploring transparent AI.

Does Making an AI Model Explainable Hurt Its Accuracy?

This is probably the number one concern we hear, often called the "performance vs. explainability trade-off." The worry is that to make a model understandable, you have to "dumb it down" and lose accuracy. The short answer? Not necessarily. It’s all about the approach.

It's true that some of the most powerful "black box" deep learning models are incredibly complex by nature. But modern XAI techniques like SHAP and LIME don't actually change the model itself. Instead, they act like an investigative layer on top, showing you why the powerful model made its decision. Think of it as getting a peek inside the engine without having to rebuild it.

The goal isn't to sacrifice performance for clarity. It’s about finding the right balance where a model is both highly effective and completely trustworthy for a specific clinical job.

How Can a Hospital Get Started with XAI?

You don't need to rip out your existing systems and start from scratch. A smart, phased approach is the best way to begin integrating explainable AI.

- Kick off a Pilot Project: Don't try to boil the ocean. Pick one specific, high-value area to start, like analyzing diagnostic scans or predicting patient readmission risks.

- Bring Clinicians to the Table Early: Get the doctors, radiologists, and nurses who will actually use the tool involved from day one. Their feedback is gold for figuring out what an explanation needs to look like to be useful in a busy workflow.

- Choose User-Friendly Tools: Opt for XAI systems that provide clear, visual outputs. Heatmaps overlaid on an MRI or simple charts showing the top three risk factors are far more effective than a wall of code.

- Test, Get Feedback, and Refine: Once it's running, watch how the explanations are used. Do they help clinicians feel more confident? Are they leading to better decisions? Use that real-world feedback to make the system even better.

Is Explainable AI a Legal Requirement in Medicine?

The rules are still being written, but the direction of travel is unmistakable. Regulators are demanding more transparency from AI systems. While you might not find a law that uses the exact term "explainable AI" today, major regulations like the EU's Artificial Intelligence Act are putting high-risk AI systems—especially in medicine—under the microscope.

These new legal frameworks are making it clear: if your AI affects a patient's health, you need to be able to explain how it works. This essentially makes explainability a non-negotiable part of compliance, risk management, and ethical practice.

Getting ahead of the curve and adopting XAI now isn't just good practice; it's a strategic move to prepare for future legal standards and show a deep commitment to patient safety.

At PYCAD, we focus on building transparent AI tools for medical imaging that make sense to clinicians. We combine the power of AI with the clarity you need to make decisions with confidence. Learn more about our approach on the PYCAD website.