Welcome to the new era of radiology. Learning how to interpret X-ray images isn't just about looking at a picture anymore—it's about unlocking the massive amount of data hidden inside. The process has evolved into an incredible journey, transforming a raw digital image into a powerful clinical insight, all with the help of artificial intelligence. Static scans are now dynamic, data-rich assets.

The New Frontier of Medical Imaging

It’s time to move past the old-school film and clunky light boxes. Today, making sense of a medical scan is a sophisticated workflow where raw data becomes actionable intelligence on advanced web platforms. This guide will walk you through the entire lifecycle, from the moment an image is captured to its final AI-powered analysis.

This isn’t just for radiologists, either. This field is now a critical space for data scientists, software engineers, and healthcare innovators who are all working to redefine diagnostics. Every single pixel holds a clue, and we finally have the tools to uncover what they mean.

Key Concepts in the Modern Workflow

To really get a handle on this new world, you need to be familiar with a few core ideas that are the bedrock of any modern medical imaging project. These pieces work in concert to make sure data is consistent, secure, and ready for its close-up with AI.

-

The DICOM Standard: Think of this as the universal language for all medical imaging. DICOM (Digital Imaging and Communications in Medicine) is what allows a CT scan taken in one hospital to be read and analyzed by software in a completely different one, keeping all the vital metadata intact.

-

Precise Image Annotation: This is where human expertise meets machine learning. Annotation is the careful process of labeling medical images to teach an AI model what to look for—be it a fracture, a tumor, or a subtle anomaly. It’s how we transfer our knowledge to the machine.

-

AI-Powered Analysis: This is where the magic happens. We use algorithms, usually deep learning models, to automatically detect, segment, or classify features within an X-ray. It’s about finding a tiny fracture that might be missed or flagging the earliest signs of disease.

Here at PYCAD, we live and breathe the practical side of these concepts. We build custom web DICOM viewers and integrate them into medical imaging web platforms, creating the interactive spaces where this data truly comes alive. These viewers are absolutely essential for clinicians to interact with AI findings directly on the scans themselves. You can see what we've built in our extensive portfolio.

The combination of DICOM standards and AI has created a powerful ecosystem where a simple X-ray can reveal insights far beyond what the human eye alone can see. It's all about making diagnostics faster, more accurate, and ultimately more accessible.

This shift gives professionals the power to build tools that can genuinely change patient outcomes for the better. As AI continues to redefine what’s possible in diagnostics, you can delve deeper into AI advancements and see how they're being applied across industries. The journey of an X-ray is no longer just a snapshot; it's a story of data, insight, and innovation.

Laying the Groundwork: Acquiring and Preparing Your X-Ray Data

Every incredible AI imaging project starts in the same place: with the data. Before a single line of code is written, before any algorithm is trained, we must focus on the images themselves. This first phase of acquiring and preparing high-quality X-ray data isn't just a box to check—it’s the absolute foundation of everything that follows. Get this right, and you’re on the path to building a truly reliable and accurate model.

The move from old-school film X-rays to modern Digital Radiography (DR) systems was a massive leap for medical imaging. For AI, digital formats are non-negotiable. They give us the raw pixel data our algorithms need to see, learn, and make predictions. This transition turned a simple picture into a deep, explorable dataset, unlocking a world of possibilities we could only dream of with film.

The DICOM Revolution: From Film to Digital

It all started on November 8, 1895, when physicist Wilhelm Conrad Röntgen discovered a new kind of ray. His first image, a ghostly picture of his wife's hand, showed the world a way to peer inside the human body without a single incision. That moment was the spark that ignited decades of innovation, leading us straight to the sophisticated digital systems we rely on today.

At the very core of modern medical imaging is the DICOM (Digital Imaging and Communications in Medicine) standard. Think of it as the universal language for all medical images. It's so much more than a simple JPEG or PNG. A DICOM file is a complete package, bundling the image itself with a goldmine of metadata—patient details, scanner settings, acquisition parameters, you name it. For any serious AI endeavor, working with DICOM isn't a suggestion; it’s essential for ensuring data integrity as images are passed between different viewers, platforms, and algorithms.

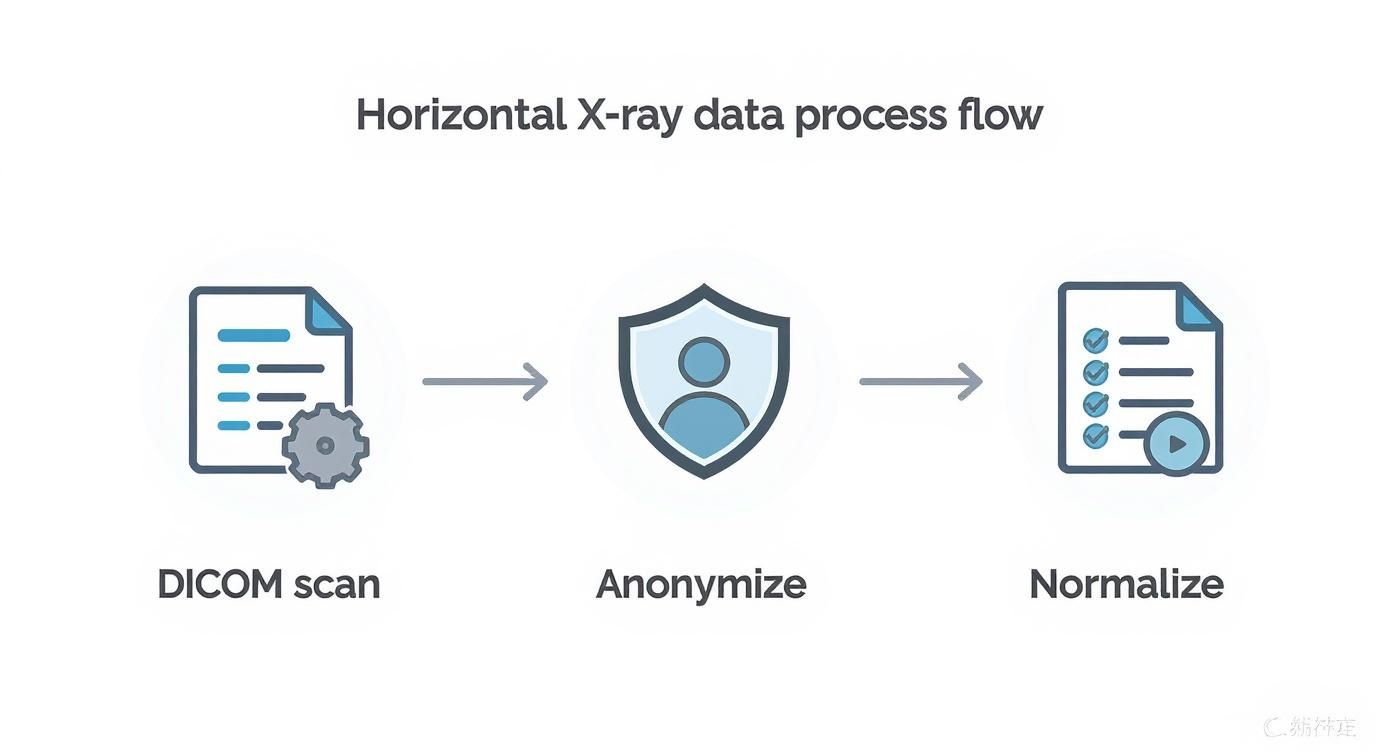

Getting Your Data Ready for Prime Time

Once you have your digital images, the real prep work begins. Raw data pulled from different clinics, using different machines, is often a chaotic mess of inconsistencies. On top of that, protecting patient privacy is your highest priority. These next few steps are all about creating a dataset that is clean, consistent, and ethically sound.

- Anonymization is Non-Negotiable: The very first thing you must do is scrub all personally identifiable information (PII) from the DICOM metadata. This means names, patient IDs, birthdays—everything. Skipping this step isn't just sloppy; it's a major breach of privacy laws like HIPAA.

- Normalize for Consistency: X-rays from different machines will naturally have variations in brightness, contrast, and resolution. Normalization is how we level the playing field, standardizing these values across the entire dataset. This is a critical step that teaches your AI model to spot medical conditions, not just the quirks of a particular scanner.

- Manage It All with PACS: For any project of significant scale, a Picture Archiving and Communication System (PACS) is your best friend. This is the technology medical professionals use to store, retrieve, and share images securely. A PACS acts as a centralized, organized library for your prepared data, making large-scale AI projects manageable.

Data preparation isn't about deleting information—it's about refining it. When you properly anonymize and normalize your X-rays, you create a dataset that is both ethical and scientifically robust. This is how you pave the way for an AI model that can deliver truly powerful insights.

Here at PYCAD, this is our world. We build custom web DICOM viewers and integrate them into sophisticated medical imaging web platforms—all designed to work with perfectly prepared DICOM data. These platforms become the interactive spaces where clinicians can see and act on the insights our AI models generate.

To really grasp how vital proper data management is in a real-world clinical setting, you should check out our deep dive into the complexities of the image acquisition process. A well-organized dataset is the first, most important step toward building a system that can genuinely change patient outcomes. Feel free to explore some of our work in our portfolio.

The Art of Precise Image Annotation

An AI model is only as brilliant as the data it learns from. In medical imaging, that learning process begins with annotation—the meticulous, human-guided art of labeling X-ray images. This is where we transform raw pixels into meaningful lessons for a machine. Think of it as the critical bridge where a radiologist's years of experience get translated into a language an algorithm can finally understand.

This isn't just a theoretical exercise; it's a hands-on, practical skill. The whole point is to show the AI exactly what to look for, pixel by pixel. It’s far more than just circling a problem area. It demands specific, structured techniques that turn complex medical observations into actionable data.

Before you can even start annotating, you need a solid data preparation workflow. This usually involves taking the initial DICOM scan, ensuring it's properly anonymized, and then normalizing it for consistency.

Getting this workflow right ensures every image is secure and uniform, creating a reliable foundation for the detailed labeling that comes next.

Translating Expertise Into Data

How you annotate an image depends entirely on what you want the AI to learn. Different diagnostic goals call for different labeling methods, and each one guides the model's focus in a unique way.

Here are a few of the most common approaches I've used:

-

Bounding Boxes: This is your go-to for speed and simplicity. You just draw a rectangle around an object of interest. It's perfect for object detection tasks, like training a model to find a potential nodule or spot a medical device in a chest X-ray. It basically answers the question, "Is something here, and what's its general location?"

-

Polygons: When you need more precision, polygons are the way to go. By drawing a series of connected points, you can trace the exact shape of an anatomical structure, like the contour of a lung or the specific border of a tumor. This is absolutely essential for segmentation, which allows models to measure size, volume, and shape with incredible accuracy.

-

Keypoints: Sometimes, the goal isn't to outline a whole region but to pinpoint specific anatomical landmarks. Keypoint annotation is just that—placing single dots on critical points, like the vertebrae in a spine or the joints in a hand. This technique is invaluable for any task involving alignment, posture analysis, or measuring angles.

Choosing the right technique is the first step. But it's the consistency in applying it that will make or break your project.

The quality of your annotations directly sets the performance ceiling for your AI model. A model trained on noisy, inconsistent labels will never be reliable, no matter how sophisticated the algorithm. "Garbage in, garbage out" is the one unbreakable law of machine learning.

Choosing the Right Annotation Tool for Your Project

So, we've covered the 'what' and 'why' of annotation. The 'how' really comes down to having the right tools and a rock-solid set of instructions. Finding the perfect software is a crucial first step. The table below compares a few popular options to help you see what might fit your project's needs.

| Tool | Primary Use Case | Key Features | Best For |

|---|---|---|---|

| Labelbox | General-purpose data labeling | Collaborative workflows, QA tools, API integration | Large teams working on diverse image types (classification, segmentation). |

| CVAT | Open-source, versatile annotation | Supports video & image, multiple annotation types | R&D projects, academic use, or teams needing a flexible, free tool. |

| 3D Slicer | Medical image segmentation & visualization | DICOM support, advanced 3D rendering, segmentation tools | Complex 3D medical imaging analysis, especially for research. |

| V7 | Automated & AI-assisted annotation | Auto-annotate features, model-in-the-loop workflow | Teams looking to accelerate labeling with AI assistance for large datasets. |

Our team has spent countless hours testing these platforms and more. If you want to go deeper, you can explore our detailed review of the best image annotation tools to find the perfect match.

The Human Element: Tools and Guidelines

Even with the best software in the world, human error is always the biggest variable. This is where clear, comprehensive annotation guidelines become your project's North Star. These guidelines must be incredibly specific, leaving absolutely no room for interpretation.

For instance, when labeling a lung nodule, your guidelines should explicitly define:

- What is the minimum size to be considered a nodule?

- Should surrounding inflammation be included inside the polygon?

- How should a nodule that's only partially visible at the edge of the scan be handled?

At PYCAD, this is our bread and butter. We specialize in building the infrastructure that makes this whole process feel seamless. We build custom web DICOM viewers and integrate them into medical imaging web platforms, often including sophisticated, built-in annotation modules. These integrated systems ensure the annotation process is tightly linked with the viewing and analysis workflow, which I've found is a huge factor in maintaining both quality and efficiency.

Ultimately, mastering X-ray analysis in the age of AI means becoming a master of annotation. It's a discipline that demands clinical knowledge, precision, and a deep understanding of what you're trying to teach the machine. When you get it right, you're not just labeling images—you're creating the very intelligence that will one day help save lives.

Training AI Models for X-Ray Analysis

Once your data is perfectly prepped and meticulously annotated, it’s time for the magic to happen. This is where we breathe life into the AI model, moving from careful preparation to actual creation. Think of it as turning raw, structured data into an intelligent system that can see things the human eye might otherwise miss. It's a truly inspiring part of the process.

At the very core of modern computer vision, you'll find an engine called the Convolutional Neural Network (CNN). Imagine a CNN as a series of highly specialized filters. As an X-ray image passes through its layers, each one learns to spot progressively more complex features. The first few layers might pick up on simple edges and textures, while the deeper layers learn to recognize intricate anatomical structures and the subtle signs of disease.

This layered learning gives the model a rich, hierarchical understanding of the image, which is exactly why it’s so incredibly effective for medical imaging tasks.

Matching the Model to the Mission

Not all AI models are built the same; the right one for your project depends entirely on the clinical question you’re trying to answer. Choosing the correct model architecture isn't just a technical detail—it's absolutely crucial for success.

Here are the main players in the world of X-ray analysis:

-

Classification Models: These are your workhorses for answering a simple "yes or no" question about an image. A classic example is a model that looks at a chest X-ray and classifies it as either showing signs of pneumonia or being normal. The output is a clear, straightforward label.

-

Object Detection Models: Taking it a step further, these models don't just identify an issue; they pinpoint its location with a bounding box. A great real-world use case is training a model to draw a box around a suspected lung nodule or highlight a bone fracture.

-

Segmentation Models: When you need the highest level of precision, segmentation models are the answer. Instead of a simple box, these models meticulously outline the exact shape of a pathology or an anatomical feature, right down to the pixel. This is vital for tasks that demand precise measurements, like calculating the volume of a tumor or the total area of a damaged lung.

The groundwork for today's AI was laid decades ago. X-ray technology has come a long way since its discovery, with the 1960s bringing us computed tomography (CT)—a game-changing method for creating detailed cross-sectional images. Then, the 1970s introduced digital radiography, which made image acquisition faster and opened the door to computerized processing. These digital systems have since become the global standard, and they are the very reason modern AI analysis is even possible. You can discover more about the history of X-ray evolution and its profound impact on medicine.

The Power of Transfer Learning

If you were to build a medical AI model from scratch, you'd need a staggering amount of labeled data—often millions of images. In medicine, datasets of that size are incredibly rare and prohibitively expensive to create. This is where transfer learning completely changes the game.

The idea is both simple and brilliant. Instead of starting from zero, you begin with a model that has already been trained on a massive, general-purpose image dataset like ImageNet. This pre-trained model already understands fundamental visual features like edges, shapes, and textures. From there, you "fine-tune" this model using your smaller, specialized set of X-ray images.

Transfer learning is like hiring an experienced professional instead of a fresh graduate. The model already has a vast base of knowledge; you just need to teach it the specific nuances of your medical task. This approach dramatically reduces the amount of data and computational power needed to achieve exceptional results.

This technique is what makes it possible for teams to build highly accurate models even with limited medical data, unlocking incredible potential for innovation in virtually any clinical specialty.

An Iterative Journey to Accuracy

Training an AI model isn't something you do just once. It's a continuous, iterative cycle of training, validating, and testing. To do this right, you’ll split your annotated dataset into three distinct parts.

-

Training Set: This is the largest chunk of your data, typically 70-80%, and it’s used to teach the model how to spot patterns.

-

Validation Set: This smaller set (10-15%) is used to periodically check the model's performance on data it hasn't seen before. It’s your guide for tuning the model and preventing it from just memorizing the training images.

-

Test Set: Kept completely separate until the very end, this final set (10-15%) gives you an unbiased, real-world evaluation of how the model will perform.

Here at PYCAD, we know that a trained model is only truly useful when a clinician can interact with it seamlessly. That’s why we build custom web DICOM viewers and integrate them into medical imaging web platforms. This creates an environment where a doctor can see AI predictions directly overlaid on the X-ray, making the insights immediately actionable.

Feel free to browse our portfolio to see how these integrated systems come to life. The ultimate goal is to build a model that is not only accurate but also robust and generalizable—one that’s ready to make a real difference in a clinical setting.

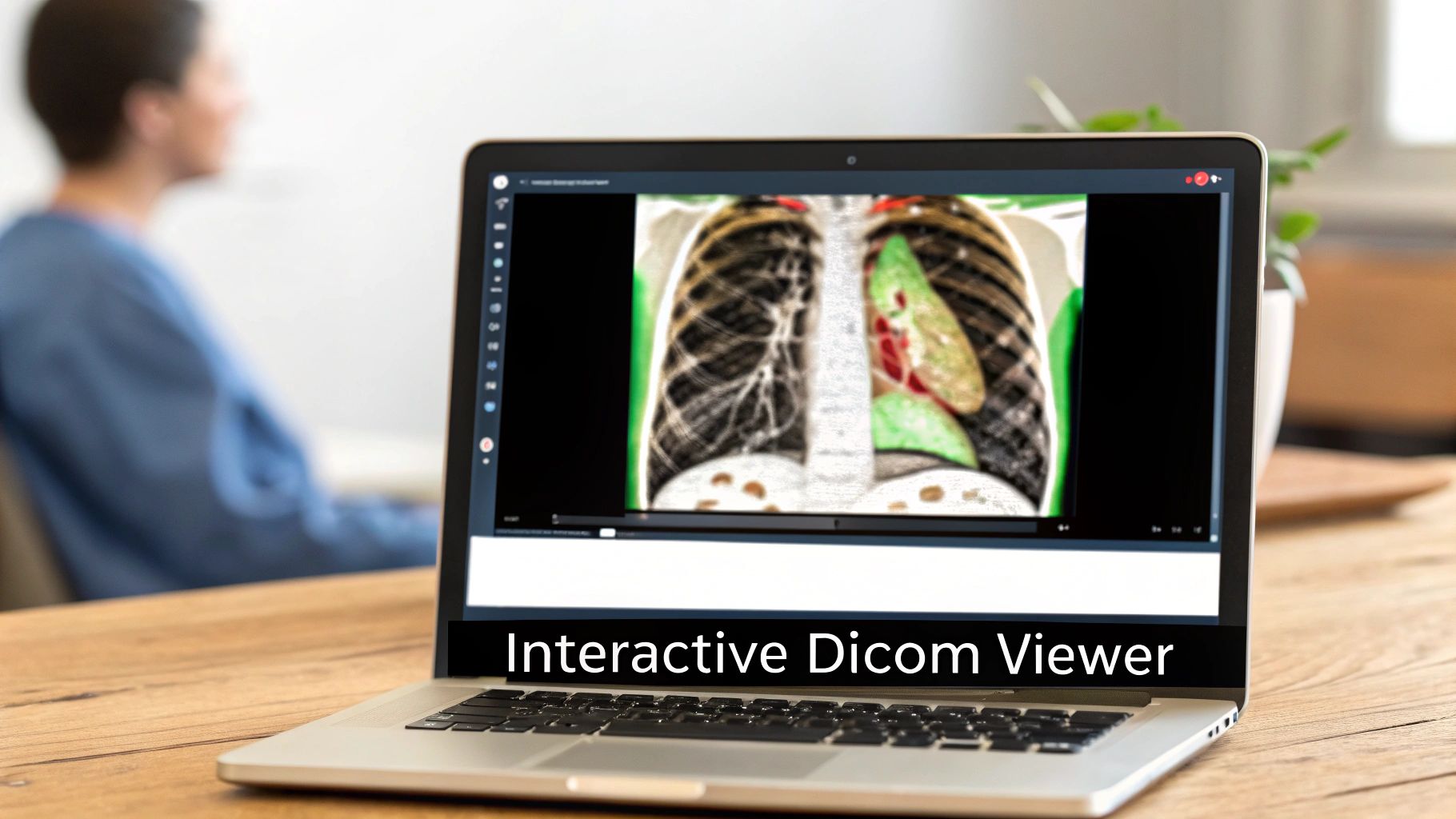

Bringing AI Insights to Life with a Custom DICOM Viewer

An AI model’s predictions are just numbers until a clinician can actually see and interact with them. This is where we bridge the gap between a powerful algorithm and a confident clinical diagnosis—the final, most inspiring step of the journey.

A standard image viewer just doesn’t cut it when the stakes are this high.

This is where the magic truly unfolds. We’re not just looking at a picture; we’re integrating your model’s findings directly onto the X-ray, turning a static, grayscale image into a dynamic, interactive diagnostic tool.

Beyond a Simple Picture Viewer

You can't just open a medical scan in a generic JPEG viewer. Why? Because you'd lose all the vital metadata embedded within the DICOM file—everything from patient orientation to the specific scanner settings. This context is absolutely essential for a correct interpretation.

A specialized web-based DICOM viewer is designed to handle this complexity, ensuring the image is always displayed accurately. But a custom viewer takes this a giant leap further. It's built to become an integral part of the diagnostic workflow, the very platform where AI insights become visible, tangible, and actionable.

An AI model might be 95% accurate, but that number means nothing if a clinician can’t see why the model made its decision. A custom viewer makes AI explainable by visualizing its findings right on the scan, building trust and enabling verification.

This is exactly what we specialize in at PYCAD. We build custom web DICOM viewers and integrate them into medical imaging web platforms, creating environments where clinicians can seamlessly interact with AI-generated data.

Making AI Tangible for Clinicians

Think of it this way: a custom viewer is the difference between looking at a static paper map and using a live, interactive GPS. It’s not just about showing an image; it’s about creating an experience tailored to what medical professionals actually need.

Here are just a few ways a custom DICOM viewer brings AI to life:

- Interactive Heatmaps: Instead of a simple "positive" or "negative" result, the viewer can overlay a heatmap showing the exact areas the model found suspicious. This immediately draws a radiologist's attention where it's needed most.

- Dynamic Segmentation Overlays: For a model that identifies anomalies, the viewer can draw the precise outlines directly onto the image. A clinician can then adjust the borders, measure the area, and instantly validate the finding.

- Collaborative Annotation: Imagine multiple experts reviewing the same complex case from different locations, adding their own notes in real-time. This is invaluable for tough diagnoses and for training junior staff.

- Side-by-Side Comparisons: A custom viewer can pull up a patient's current X-ray right next to their previous scans, with AI-flagged changes highlighted automatically. Tracking disease progression becomes incredibly efficient.

To build these kinds of powerful tools, you have to understand the very structure of the files they handle. For a deeper dive, check out our guide on how to read DICOM files.

The Future of Diagnostic Confidence

When a doctor can see a model’s prediction overlaid on an X-ray, review the highlighted region, and use specialized tools to measure and analyze it, their confidence skyrockets. The AI is no longer a "black box" but a trusted, transparent assistant.

This synergy between human expertise and machine intelligence is what truly elevates the entire process. It improves diagnostic speed, enhances accuracy, and ultimately leads to better patient outcomes.

At PYCAD, we are dedicated to building these crucial bridges between algorithms and clinical practice. Our work is all about creating the platforms that make these advanced interactions possible. To see what a truly integrated system looks like, we invite you to explore the solutions we've built for our clients on our portfolio page. It’s where data becomes diagnosis.

Have a Few Questions?

Diving into the world of AI for medical imaging can feel like exploring new territory. It's only natural to have questions as you chart your course. Think of this as a conversation with a seasoned guide—here are some of the most common things people ask when they're ready to bring their vision for X-ray analysis to life.

What's the Toughest Part of Kicking Off an AI Project with X-Rays?

Hands down, the biggest roadblock is almost always the data. Getting your hands on a large, clean, and well-annotated set of X-ray images is the first—and often most daunting—challenge. Medical data is, for very good reason, locked down by privacy rules like HIPAA. This means thorough anonymization isn't just a good idea; it's a mandatory first step before you can even think about training a model.

On top of that, X-rays themselves are incredibly varied. Images from different machines, hospitals, or even technicians can have subtle differences in exposure, contrast, and patient positioning. This variability means you have to be meticulous about cleaning and normalizing your data. If you don't, your model might end up learning the quirks of a specific machine instead of the actual medical patterns you're trying to find. This initial data work is where a project truly finds its foundation for success.

Can I Really Use an Off-the-Shelf AI Model for My X-Ray Project?

Absolutely. In fact, you'd be putting yourself at a huge disadvantage if you didn't. This approach is called transfer learning, and it’s a game-changer. Models like ResNet or VGG have already spent thousands of hours learning from millions of general-purpose images, so they're experts at identifying basic features like edges, shapes, and textures.

When you fine-tune one of these pre-trained models with your specialized X-ray dataset, you’re not starting from zero. You're giving it an advanced education on your specific problem. This lets you achieve incredible accuracy with much less data and in a fraction of the time. For medical imaging, where massive, perfectly labeled datasets are rare, this isn't just a shortcut—it's smart strategy.

Think of transfer learning like hiring a brilliant specialist. They already have their medical degree; you're just training them on the specific nuances of your clinic's imaging equipment and patient population.

Why Do I Need a Custom DICOM Viewer? Can't I Just Use a Regular Image Viewer?

A standard image viewer that opens JPEGs or PNGs is blind to the wealth of information tucked inside a DICOM file. It's like trying to read a patient's chart by only looking at the cover. A DICOM file contains a treasure trove of metadata—patient details, acquisition settings, and other clinical context—that is completely invisible to a normal viewer.

A specialized DICOM viewer can read and display all that crucial information. But a custom viewer, like the ones we build at PYCAD, takes it to a whole new level. We don't just build viewers; we build custom web DICOM viewers and integrate them into medical imaging web platforms. This creates a seamless workflow designed around your specific clinical needs. It allows you to overlay AI-driven insights directly onto the scan, turning a static image into a dynamic, interactive diagnostic tool. You can see what this looks like in action over in our portfolio.

How Can I Be Sure the AI Isn't Just a "Black Box"?

That's the million-dollar question, and it gets to the heart of building trust in medical AI. We call this "explainability." One of the most powerful ways to peek inside the AI's "mind" is with a technique called Grad-CAM. It generates a heatmap over the X-ray, visually showing you the exact pixels the model focused on to arrive at its conclusion.

This lets a radiologist see, at a glance, if the AI is looking at the correct pathology or just some random artifact. It's an instant gut check. Beyond that, the only way to build real-world confidence is through relentless testing. You have to validate your model on diverse datasets from different hospitals and patient populations to ensure it's robust, unbiased, and truly ready for clinical use. Transparency is what turns a mysterious algorithm into a trusted collaborator.

At PYCAD, our passion is crafting the technology that makes these breakthroughs possible. We build secure, intuitive web platforms with custom DICOM viewers and integrated AI that empower healthcare professionals and inspire a new wave of innovation.