Think of data preprocessing as the essential, often unseen, groundwork of any great data project. It's the process of taking raw, messy, and chaotic information and methodically cleaning, shaping, and organizing it. The goal? To create a high-quality, structured dataset that machine learning models and analytics tools can actually understand and use.

This isn't just a technical step; it's the foundation that ensures the reliability and accuracy of every insight you hope to uncover.

The Hidden Foundation of Modern AI

Imagine trying to build a magnificent skyscraper on a foundation of loose sand and shifting pebbles. It doesn’t matter how brilliant your architectural plans are—the entire structure is doomed from the start. The very same principle holds true when you feed raw data to an artificial intelligence model. Without a solid, well-prepared base, the whole project is compromised before it even begins.

This is precisely where data preprocessing shines. It’s far more than just a technical chore. It's the art of sculpting raw information into something truly valuable, transforming a jumble of inconsistent data into a clean, structured, and reliable asset. It’s the unsung hero behind every accurate prediction, every smart decision, and every groundbreaking discovery.

Why This First Step Is Everything

Data pulled from the real world is rarely, if ever, perfect. It’s often incomplete, riddled with inconsistencies, and full of errors. You might find missing values, duplicate entries, or formats that algorithms simply can't process. Trying to analyze this kind of data "as is" is a recipe for disaster, leading to skewed results, faulty models, and deeply flawed conclusions.

Data preprocessing is the foundational pillar that supports the entire data science lifecycle. It’s not a small task, either. Studies consistently show that data scientists can spend up to 80% of their time on this meticulous preparation, a number that speaks volumes about its importance.

To get a clearer picture, here’s a quick breakdown of what data preprocessing actually involves.

A Quick Answer to What Is Data Preprocessing

At its core, data preprocessing is a series of steps used to clean and organize raw data, making it suitable for machine learning models and data analysis.

| Stage | What It Does | Example |

|---|---|---|

| Data Cleaning | Fixes or removes incorrect, corrupt, or duplicate data. | Deleting a patient record that was accidentally entered twice. |

| Data Transformation | Converts data into a more appropriate format or structure. | Scaling all patient ages to a range between 0 and 1 (normalization). |

| Data Reduction | Reduces the volume of data while preserving its integrity. | Using Principal Component Analysis (PCA) to reduce the number of features. |

Ultimately, this careful preparation builds a dataset you can genuinely trust.

This process involves several key activities that come together to create that trustworthy dataset:

- Cleaning the Slate: This is all about finding and correcting errors, figuring out how to handle missing values, and getting rid of any duplicate records.

- Creating Harmony: It’s about standardizing data formats and bringing information from different sources into a single, unified structure so everything speaks the same language.

- Refining the Material: This involves transforming the data to make it more suitable for modeling, like scaling numerical values or encoding categorical variables so an algorithm can work with them.

Grasping what data preprocessing is and why it matters is vital for anyone hoping to unlock the true power of data. In highly specialized fields like medical imaging, this process is absolutely critical.

Here at PYCAD, we live and breathe this reality. We at PYCAD, build custom web DICOM viewers and integrate them into medical imaging web platforms where flawless data preparation isn't just best practice—it's a clinical necessity. The quality of our work, which you can explore in our portfolio, depends entirely on our ability to turn complex medical data into a pristine asset for analysis. This section is just the beginning of a deeper journey into this essential discipline.

Why Data Preprocessing Is the Unsung Hero of Machine Learning

In the world of AI, it’s the flashy algorithms and complex models that usually grab the headlines. We get excited about their predictive power and how they solve incredible problems. But behind every single one of those successes, there's a silent hero working in the shadows: data preprocessing. It’s the essential, often-overlooked foundation that truly makes or breaks any data-driven project.

The entire field runs on a simple, timeless principle: "garbage in, garbage out." It doesn't matter how sophisticated your algorithm is. If you feed it messy, inconsistent, or just plain wrong data, you'll get unreliable results. Every time. Preprocessing is the gatekeeper that turns that raw, chaotic information into something structured and valuable, unlocking its true potential.

Boosting Model Accuracy and Reliability

So, what’s the most immediate payoff for all this upfront work? A massive leap in your model's accuracy. Raw data is almost always a minefield of missing values, typos, and mismatched formats. All of this acts like static noise, completely confusing the learning process. By cleaning and standardizing your dataset, you're essentially clearing away that static so the model can hear the real signal.

Think of it like a detective trying to solve a case. If the clues are incomplete, contradictory, or written in a dozen different languages, their chances of cracking it are pretty slim. Data preprocessing is the equivalent of meticulously organizing all the evidence, translating the notes, and filling in the blanks. You’re giving the detective—your AI model—the best possible shot at finding the right answer.

This isn’t just theory. A model trained on clean data isn't just more accurate; it's also more robust. It learns from genuine patterns, not random noise, which means it performs far more reliably when it encounters new, real-world data. The critical role of solid data is often underestimated, but as discussions around poor data infrastructure and its implications highlight, it’s the absolute bedrock of machine learning.

Enhancing Efficiency and Preventing Errors

Beyond just getting the right answer, good preprocessing makes everything run more smoothly. Raw datasets can be huge and unnecessarily complex. Techniques like dimensionality reduction help simplify things by removing redundant information without sacrificing what's important. This means your models have less data to churn through, which slashes training times and saves on computational costs.

Investing time in data preprocessing is not a detour; it is the most direct path to a reliable and powerful AI. It prevents the catastrophic—and costly—errors that arise from biased or flawed data.

Take a real-world example. Without proper normalization, features with huge numerical ranges can completely dominate a model's calculations, leading to skewed results. Imagine a medical algorithm looking at patient data. If one lab result with a massive range isn't scaled correctly, it could easily overshadow vital signs like blood pressure or heart rate. The result? Dangerously wrong predictions.

This is a challenge we at PYCAD tackle head-on every single day. We at PYCAD, build custom web DICOM viewers and integrate them into medical imaging web platforms, where there is absolutely no room for error. Making sure that data coming from different scanners is normalized and cleaned is fundamental to creating diagnostic tools that clinicians can actually trust. You can see how we put this into practice in our portfolio.

As more and more businesses rely on data to make decisions, the tools for preprocessing are getting smarter. In 2024, a staggering 72% of firms confirmed that data-driven growth was a critical priority, fueling investment in better data management. Now, AI is being built directly into data prep platforms to automate tasks like cleaning and feature extraction, drastically cutting down on manual effort, as seen with solutions from IBM. It’s clear proof that putting resources into preprocessing isn't just a best practice—it's the cornerstone of building AI systems that deliver real-world value.

Core Techniques That Transform Raw Data into Gold

Data preprocessing isn't just one step; it's a whole symphony of techniques working together to refine raw information. Picture a master sculptor meticulously shaping a block of marble. Every chip, every polish, and every precise cut is intentional, all designed to reveal the masterpiece hidden inside. These methods are the tools that turn chaotic, messy data into a structured, reliable asset that’s truly ready for analysis.

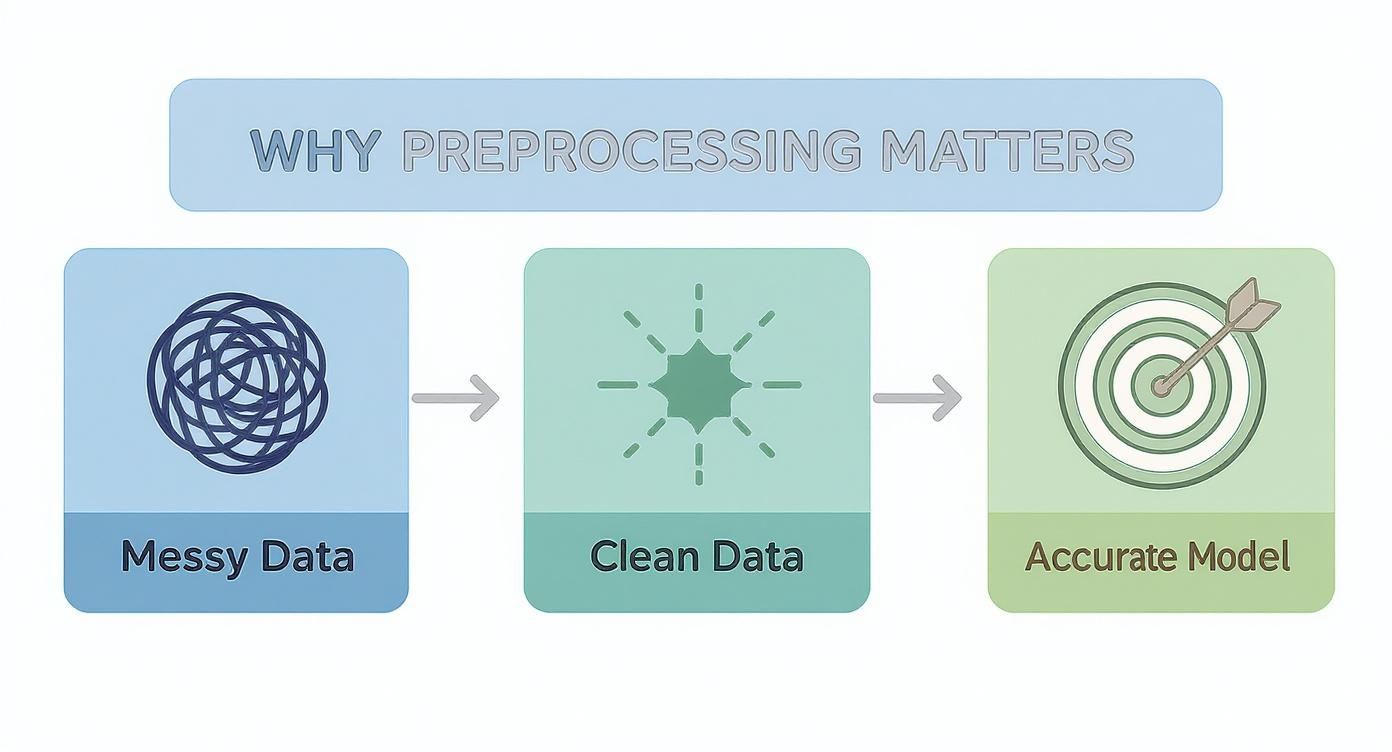

This visual flow shows the journey from tangled, raw data to a clean, usable state—the secret sauce for achieving accurate and reliable model outcomes.

As you can see, without that crucial middle step of cleaning and organizing, raw data leads straight to flawed results. It’s a powerful reminder that preprocessing isn't just a "nice-to-have"; it's completely non-negotiable.

Let's dive into the core techniques that make this transformation possible.

The Art of Data Cleaning

The journey always begins with data cleaning. This is where we roll up our sleeves to find and fix—or sometimes remove—the errors, inconsistencies, and inaccuracies hiding in a dataset. Think of it as a meticulous quality control check for your data, making sure the very foundation of your analysis is solid.

Imagine a patient database where ages are sometimes entered as "999" or names have common typos. An AI model trying to learn from this would get confused, treating "999" as a real age or thinking "John Smith" and "Jhon Smith" are two different people. Data cleaning is what solves these exact problems.

Common tasks in data cleaning include:

- Handling Missing Values: What do you do with empty cells? You have to decide whether to fill them with a calculated value (like the mean or mode) or a placeholder. If a record is missing too much information, the best move might be to remove it entirely.

- Correcting Inconsistent Formats: Data pulled from different systems rarely plays by the same rules. One might use "MM-DD-YYYY" for dates, while another uses "YYYY/MM/DD." Cleaning standardizes everything into one consistent format.

- Removing Duplicates: Identical records can seriously skew your results by giving too much weight to a single data point. Finding and getting rid of these duplicates is absolutely essential for maintaining the integrity of your dataset.

Data cleaning isn't about mindlessly deleting bad data. It's about making smart, strategic decisions to keep as much valuable information as you can while cutting out the noise that could derail your entire project.

This process is absolutely vital in high-stakes fields like healthcare. At PYCAD, we know a single misplaced decimal in a medical image's metadata can have serious consequences. It's why our preprocessing pipelines are built with an unwavering focus on meticulous data cleaning, especially when we build custom web DICOM viewers and integrate them into medical imaging web platforms.

If you want to go deeper on how this applies to model building, our comprehensive guide on data preprocessing for machine learning is a great place to start.

Harmonizing Data Through Transformation

Once your data is clean, the next step is data transformation. This is all about converting data into a structure or format that’s better suited for your model. It’s like creating a level playing field where every feature can be compared fairly and accurately.

A huge part of this is normalization and scaling. Let’s say you’re analyzing patient data with two features: age (ranging from 0-100) and white blood cell count (ranging from 4,000-11,000). Without transforming this data, an algorithm might mistakenly think the blood cell count is thousands of times more important just because its numbers are bigger.

Normalization fixes this by scaling these values to a common range, like 0 to 1, ensuring no single feature dominates the analysis just because of its scale. It allows the model to see the true impact of each variable. Another key technique here is encoding, where we convert categorical data (like "Male" or "Female") into a numerical format that algorithms can actually understand.

Simplifying Datasets With Data Reduction

Finally, we have data reduction. Now, it might sound strange to reduce the amount of data you've worked so hard to prepare, but this step is all about simplifying the dataset without losing its essential information. Huge, complex datasets can be incredibly slow and expensive to process.

Data reduction strategies find a more compact, yet equally meaningful, representation of the data. This is typically done in two ways:

- Feature Selection: This is about identifying and keeping only the most relevant features. If you have a hundred variables but find that only ten of them really impact your final outcome, focusing on those ten can make your model faster and, surprisingly, often more accurate.

- Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) create new, combined features (called principal components) from the original ones. This lets you capture most of the dataset's story with far fewer variables, simplifying the problem without a major loss of information.

To give you a clearer picture, here’s how these powerful techniques stack up.

Key Data Preprocessing Techniques At A Glance

| Technique | Primary Goal | Common Methods |

|---|---|---|

| Data Cleaning | To fix or remove errors, inconsistencies, and inaccuracies. | Imputation (mean, median, mode), removing duplicates, handling outliers. |

| Data Transformation | To convert data into a suitable format for modeling. | Normalization (Min-Max), Standardization (Z-score), encoding, log transforms. |

| Data Reduction | To simplify the dataset without losing critical information. | Principal Component Analysis (PCA), feature selection, sampling. |

Each of these core techniques—cleaning, transformation, and reduction—is a critical piece of the puzzle. When brought together, they create a robust, efficient, and reliable dataset that forms the bedrock of any successful AI or data science project. You can see how we put these principles into action by exploring some of our work in the PYCAD portfolio.

Why Data Preprocessing Is Your Secret Business Weapon

Think of data preprocessing as more than just a technical chore. It's the essential first step that turns raw, messy data into a powerful strategic asset. In a world where every major decision hinges on data, the quality of that information determines the quality of your insights. Smart leaders get this: spending time and effort on data preparation isn't a cost—it's a direct investment in the reliability of your entire analytics engine.

This isn't about tidying up a few spreadsheets. It's about unlocking the true potential hidden within your information. When your data is clean, consistent, and well-structured, it becomes the foundation for game-changing initiatives, from marketing campaigns that genuinely connect with customers to predictive maintenance that can save millions.

Gaining a Competitive Edge, One Clean Dataset at a Time

The real value of data preprocessing snaps into focus when you see it in action. Every industry has its own unique data headaches, but the reward for solving them is always the same: a massive leap ahead of the competition. Great data work transforms raw information into a source of incredible strategic value.

Take the telecommunications industry, for example. These companies are swimming in massive streams of data from network traffic and customer interactions every single second. By preprocessing this tidal wave of information, they can:

- Boost Network Performance: Spot usage patterns to predict and prevent network congestion, keeping customers happy and connected.

- Slash Customer Churn: Analyze behavior to identify customers who might be about to leave, then step in with a personalized offer to keep them.

- Improve Service Quality: Use clean location data to pinpoint areas with spotty coverage and plan infrastructure upgrades where they’ll make the biggest impact.

It’s a similar story in the high-stakes world of finance, where data preprocessing is the first line of defense against fraud. Financial institutions painstakingly clean and structure transaction data to train their fraud detection models. These systems can then spot unusual activity in real-time, protecting both the bank and its customers from huge losses. That kind of proactive security is only possible when it’s built on a bedrock of pristine data.

The Exploding Market for Data Excellence

The business world’s appetite for high-quality data is driving incredible growth in the tools and services that deliver it. The global market for data preparation tools was valued at around USD 6.95 billion in 2025 and is on track to nearly double to USD 14.71 billion by 2030. This boom is largely fueled by the rise of AI and automation, which are making it easier to handle complex data prep tasks. You can dive deeper into these market trends with the full research from Mordor Intelligence.

Data preprocessing is what turns your organization's raw data from a potential liability into its most powerful strategic asset. It's the bridge from chaotic information to clear, actionable intelligence.

This market explosion highlights a fundamental shift in thinking. Companies no longer view data as a simple byproduct of doing business; they see it as the raw material for future innovation. At PYCAD, we live this reality every day in the medical imaging space. We at PYCAD, build custom web DICOM viewers and integrate them into medical imaging web platforms. For our clients, preprocessing isn't just a buzzword—it's the critical process that ensures diagnostic algorithms are trained on accurate medical data, which ultimately saves lives. You can see this life-changing work in our portfolio.

At the end of the day, committing to data preprocessing is a commitment to excellence. It’s a powerful statement that your organization values accuracy, trusts its insights, and is ready to make the smartest decisions possible.

Data Preprocessing in Medical Imaging: A Specialized Frontier

When you shift from general business data to the high-stakes world of medical imaging, the rules of data preprocessing change dramatically. We're no longer just tweaking numbers to improve a business metric; we're refining data that can directly shape a patient's diagnosis and their entire course of treatment. This is a field where precision, consistency, and absolute reliability are the bare minimum.

Medical images, typically stored in the complex DICOM (Digital Imaging and Communications in Medicine) format, are a world away from a simple JPEG. These files aren't just pictures. They are dense packages of information, bundling the image itself with a rich layer of metadata—everything from patient details to the specific settings of the scanner. This complexity requires a far more specialized approach to get the data ready for analysis.

The Unique Hurdles of Medical Data

Working with medical images means facing a very specific set of obstacles. The end goal is to prepare this data for advanced analysis and AI models, but getting there is an intricate process. If you're just getting started, our introductory guide on the basics of what is image preprocessing is a great place to build your foundational knowledge.

Here are the main challenges we tackle in this field:

- Handling DICOM Complexity: The very first step is carefully extracting both the image and its critical metadata from DICOM files without losing or corrupting anything. Even a small error in parsing metadata could lead a doctor or an algorithm to completely misinterpret a scan.

- Intensity Normalization: Every scanner is different. An MRI, CT, or X-ray from one manufacturer—or even the same machine on a different day—can produce images with wildly different pixel intensities. Normalization brings everything to a consistent scale, making sure an AI model is comparing actual anatomy, not just the quirks of the hardware.

- Patient Data Anonymization: Protecting patient privacy isn't just good practice; it's a legal and ethical imperative. A non-negotiable step in preprocessing is scrubbing all personally identifiable information (PII) from the DICOM metadata before it ever gets used for research or AI training.

In medical imaging, flawless data preprocessing is not just a technical best practice—it is a clinical necessity. The integrity of every subsequent analysis, from diagnostic AI to surgical planning, depends entirely on this foundational work.

Precision as a Clinical Imperative

Think about an AI model built to spot tumors. If it's only trained on images from one type of scanner without proper normalization, it might completely miss a tumor on a scan from another hospital simply because the image is a bit darker or brighter. This isn't just a hypothetical scenario; it's a real-world problem that medical data scientists solve every single day through meticulous preprocessing.

This is the world we at PYCAD live and breathe. We at PYCAD, build custom web DICOM viewers and integrate them into medical imaging web platforms, where this level of precision isn't just expected—it's the standard.

Ensuring that complex medical data is flawlessly cleaned, anonymized, and normalized is the heart of creating tools that clinicians can actually trust. The powerful solutions we deliver are all built on this deep commitment to data quality. Ultimately, in this specialized frontier, data preprocessing is the silent, essential work that turns a raw medical scan into a life-saving insight. Our portfolio showcases the real-world impact of this dedication.

Navigating the Murky Waters of Data Preprocessing

Let's be honest: data preprocessing isn't always a walk in the park. It’s a journey filled with its own unique set of challenges, the kind that can make even the most experienced data scientists pause and think. But these hurdles aren't stop signs. They're invitations to get creative, to build smarter, and to forge more robust data pipelines.

One of the biggest mountains to climb is simply the sheer volume of data we deal with today. We're talking terabytes, even petabytes, of information. Your standard tools can easily choke on that much data, grinding processing to a halt. This is a daily reality in fields like telecom and IT, where a constant flood of data needs to be tamed before it can offer up any real insights.

To put it in perspective, global mobile data traffic was already hitting an estimated 77.5 exabytes per month back in 2022. That's an almost unimaginable amount of raw information that needs to be handled. You can get a better sense of these trends by exploring the latest data preparation market analysis.

How to Turn Roadblocks into Breakthroughs

It's not just the size of the data that's tricky; it's the shape. Unstructured data like text, images, and audio files don't play by the rules of neat rows and columns. They require specialized approaches to unlock their value. On top of that, we have the critical responsibility of protecting sensitive information throughout the process. To dig deeper into this essential topic, check out our guide on what is data anonymization.

So, how do we get past these common sticking points and come out stronger on the other side? It's all about adopting smarter strategies.

- Bring in the Bots (Automation): Why manually handle repetitive cleaning and transformation? Intelligent tools can automate these tasks, freeing up your experts to focus their brainpower on strategy and complex problem-solving.

- Look to the Cloud: Cloud platforms are built for this. They offer the elastic, scalable power needed to chew through massive datasets without making your local servers cry for mercy.

- Set the Rules (Data Governance): Don't skip this step. Establishing clear standards for data quality and handling is non-negotiable. A solid governance framework is the bedrock of consistency and trust in your data.

Here at PYCAD, we live and breathe these challenges. We at PYCAD, build custom web DICOM viewers and integrate them into medical imaging web platforms, turning raw, complex medical data into a reliable, powerful asset. You can see how we put these principles into action in our portfolio.

By embracing these strategies, you can shift your perspective on data preprocessing—from a necessary chore to your most powerful engine for discovery and innovation.

Your Data Preprocessing Questions, Answered

As we wrap up our deep dive into data preprocessing, let's tackle a few common questions that often pop up. Think of this as clearing the final hurdles, cementing your understanding of this critical process.

What's the Real Difference Between Data Cleaning and Data Preprocessing?

This is a great question, and it's easy to get them mixed up. The best way to think about it is that data preprocessing is the entire multi-course meal, and data cleaning is just one of the first, most important courses.

Data preprocessing is the whole shebang—it’s the umbrella term for every step you take to get your raw data ready for a model. This includes cleaning up messes (like fixing typos or handling missing values), but it also involves so much more, like transforming data to a common scale (normalization) or creatively crafting new, more meaningful predictors (feature engineering).

Seriously, How Much Time Should I Spend on This?

You've probably heard the old saying in data science, and it holds true: expect to spend up to 80% of your project time just preparing the data. It sounds like a lot, I know.

But don't think of it as a delay. It's an investment. Pouring time and effort into this stage is what stops your models from failing spectacularly down the line. It's the foundation that ensures your results are not just accurate, but genuinely trustworthy.

The sweet spot is almost always a hybrid approach. Let automation do the heavy lifting, but have human expertise guide the process. This blend of efficiency and intelligent oversight is where the magic happens.

Can We Just Automate the Whole Thing?

While it’s tempting to dream of a one-click solution, fully automating preprocessing isn't usually the goal. Sure, modern tools are incredible at handling repetitive tasks. They can spot duplicates, scale numbers, and perform other routine jobs in the blink of an eye.

However, the most crucial decisions require a human touch. How should you handle missing patient data in a clinical trial? What new features could you create from existing sensor readings to predict equipment failure? These choices demand context, creativity, and deep domain knowledge—things that algorithms, for all their power, just don't have.

At PYCAD, we live and breathe this balanced approach, especially in the high-stakes world of medical imaging. We at PYCAD, build custom web DICOM viewers and integrate them into medical imaging web platforms, where every single data point matters. Precise, expert-guided preprocessing isn't just a step for us; it's the core of our commitment to turning complex medical data into life-saving clinical insights.

See how our expertise makes a difference by exploring our work at https://pycad.co/portfolio.